Oracle 19c RAC安装以及升级 RU

Revision V1.0

| No. | Date | Author/Modifier | Comments |

|---|---|---|---|

| 1.0 | 2020-02-05 | 谈权 | 初稿 |

Table of Contents

1. 系统规划

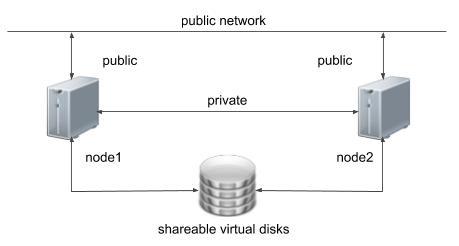

1.1 网络规划

- 主机名允许使用小写字母、数字和中横线(

-),并且只能以小写字母开头。 - 两节点rac建议DNS服务器环境配置3个SCANIP,否则配置1个SCANIP。

- 私网需要使用独立交换机,而不是网线对联。

- 多套RAC使用同一私网交换机,需划分成不同VLAN,或者使用不同网段。

1.2 存储规划

存储使用Oracle ASM管理。操作系统层通过udev绑定。如果使用afd参考以下文档

- 1.ASMFD (ASM Filter Driver) Support on OS Platforms (Certification Matrix).

(文档 ID 2034681.1)

- 2.How to configure and Create a Disk group using ASMFD

(文档 ID 2053045.1)

1.3 操作系统规范

操作系统:CentOS Linux 7.7

磁盘分区:(内存 4G)

| 分区 | 大小 (Size) |

|---|---|

| SWAP | 8G |

| / | 100G |

1.4 数据库相关介质

| 介质 | 文件名 |

|---|---|

| Oracle grid | LINUX.X64_193000_grid_home.zip |

| Oracle database | LINUX.X64_193000_db_home.zip |

| Patch 30501910: GI RELEASE UPDATE 19.6.0.0.0 说明:由于 GI RU 包含 DB RU,所以 RAC 环境升级 DB 时,还将使用此 Patch。 | p30501910_190000_Linux-x86-64.zip |

| Patch 30557433: DATABASE RELEASE UPDATE 19.6.0.0.0 说明:单实例升级 DB RU 时,使用此 Patch。 | p30557433_190000_Linux-x86-64.zip |

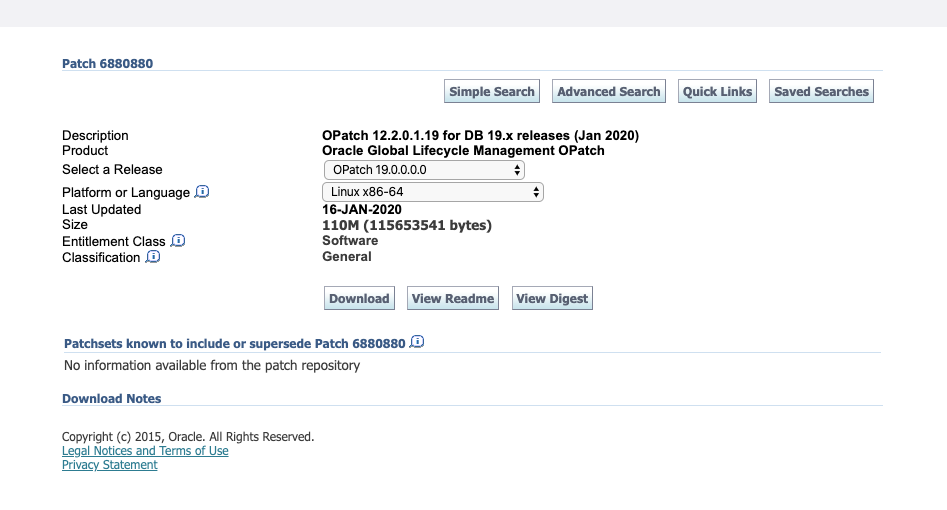

| OPatch | p6880880_190000_Linux-x86-64.zip |

1.5 小结:双节点RAC整体规划

rac1/rac2 主机名:

| rac1 | rac2 | |

|---|---|---|

| 操作系统 | CentOS 7.7 | CentOS 7.7 |

| 主机名 | tqdb21 | tqdb22 |

| IP地址 (Public)(enp0s8) | 192.168.6.21 | 192.168.6.22 |

| IP地址 (Private)(enp0s9) | 172.16.8.21 | 172.16.8.22 |

| IP地址 (Virtual)(enp0s8) | 192.168.6.23 | 192.168.6.24 |

| IP地址 (SCAN)(enp0s8) | 192.168.6.20 | 192.168.6.20 |

| GRID 用户环境变量 | export ORACLE_SID=+ASM1 | export ORACLE_SID=+ASM2 |

| export ORACLE_BASE=/u01/app/grid | export ORACLE_BASE=/u01/app/grid | |

| export ORACLE_HOME=/u01/app/19c/grid | export ORACLE_HOME=/u01/app/19c/grid | |

| export TNS_ADMIN=$ORACLE_HOME/network/admin | export TNS_ADMIN=$ORACLE_HOME/network/admin | |

| ORACLE 用户环境变量 | export ORACLE_SID=tqdb1 | export ORACLE_SID=tqdb2 |

| export DB_UNIQUE_NAME=tqdb | export DB_UNIQUE_NAME=tqdb | |

| export ORACLE_UNQNAME=tqdb | export ORACLE_UNQNAME=tqdb | |

| export ORACLE_BASE=/u01/app/oracle | export ORACLE_BASE=/u01/app/oracle | |

| export ORACLE_HOME=/u01/app/oracle/product/19c/dbhome | export ORACLE_HOME=/u01/app/oracle/product/19c/dbhome | |

| export TNS_ADMIN=$ORACLE_HOME/network/admin | export TNS_ADMIN=$ORACLE_HOME/network/admin | |

| GRID Version | 19.6.0.0.0 | 19.6.0.0.0 |

| DB Version | 19.6.0.0.0 | 19.6.0.0.0 |

| 共享存储 OCR & voting disk | 2G * 3 | 2G * 3 |

| 共享存储 ASM: DATA DiskGroup | 50G * 2 | 50G * 2 |

2. 环境配置

2.1 网络配置

rac1/rac2 主机名:

| rac1 | rac2 | |

|---|---|---|

| 主机名 | tqdb21 | tqdb22 |

| IP地址 (Public)(enp0s8) | 192.168.6.21 | 192.168.6.22 |

| IP地址 (Private)(enp0s9) | 172.16.8.21 | 172.16.8.22 |

| IP地址 (Virtual)(enp0s8) | 192.168.6.23 | 192.168.6.24 |

| IP地址 (SCAN)(enp0s8) | 192.168.6.20 | 192.168.6.20 |

修改 HOSTS 文件实例:

# vim /etc/hosts ``` 127.0.0.1 localhost # Public (enp0s8) 192.168.6.21 tqdb21 192.168.6.22 tqdb22 # Private (enp0s9) 172.16.8.21 tqdb21-priv 172.16.8.22 tqdb22-priv # Virtual (enp0s8) 192.168.6.23 tqdb21-vip 192.168.6.24 tqdb22-vip # SCAN 192.168.6.20 tqdb-cluster tqdb-cluster-scan ```

2.2 修改启动模式为: 「multi-user.target」

-- 查看当前启动模式: ``` systemctl get-default ``` -- 设置(修改)启动模式: 「multi-user.target」 ``` systemctl set-default multi-user.target ```

2.3 关闭操作系统 NUMA

``` 1. 编辑 `/etc/default/grub` 文件,在 `GRUB_CMDLINE_LINUX=` 加上:numa=off 2. 重新生成 /etc/grub2.cfg 配置文件: `grub2-mkconfig -o /etc/grub2.cfg` 3. 重启操作系统 `reboot` 4. 重启之后进行确认: `dmesg | grep -i numa` 再次确认: `cat /proc/cmdline` ```

2.4 关闭防火墙

原来使用iptables,现在在CentOS 7中失效。关闭防火墙使用

chkconfig iptables off,是会报错error reading information on service iptables: No such file or directory。需要:

# systemctl stop firewalld.service # systemctl disable firewalld.service # systemctl status firewalld.service

2.5 在CentOS 7 中禁止 IPv6

vim /etc/default/grub 增加 `ipv6.disable=1` ``` [root@tqdb21: ~]# vim /etc/default/grub GRUB_TIMEOUT=5 GRUB_DISTRIBUTOR="$(sed 's, release .*$,,g' /etc/system-release)" GRUB_DEFAULT=saved GRUB_DISABLE_SUBMENU=true GRUB_TERMINAL_OUTPUT="console" GRUB_CMDLINE_LINUX="ipv6.disable=1 spectre_v2=retpoline rhgb quiet numa=off" GRUB_DISABLE_RECOVERY="true" ``` # grub2-mkconfig -o /boot/grub2/grub.cfg # reboot # lsmod | grep ipv6

2.6 禁用 SELINUX 配置

# vim /etc/selinux/config ``` SELINUX=disabled ```

2.7 配置(关闭)服务

# systemctl disable firewalld # systemctl disable avahi-daemon # systemctl disable bluetooth # systemctl disable cpuspeed # systemctl disable cups # systemctl disable firstboot # systemctl disable ip6tables # systemctl disable iptables # systemctl disable pcmcia

2.8 关闭 THP

关闭 Transparent HugePages 特性(RHEL7/OL7)

# vim /etc/rc.d/rc.local # 增加下列内容: ``` if test -f /sys/kernel/mm/transparent_hugepage/enabled; then echo never > /sys/kernel/mm/transparent_hugepage/enabled fi if test -f /sys/kernel/mm/transparent_hugepage/defrag; then echo never > /sys/kernel/mm/transparent_hugepage/defrag fi ```

授权、执行、查看:

# chmod +x /etc/rc.d/rc.local # source /etc/rc.d/rc.local # cat /sys/kernel/mm/transparent_hugepage/enabled ``` always madvise [never] <<--- THP Disabled ```# cat /sys/kernel/mm/transparent_hugepage/defrag ``` always defer defer+madvise madvise [never] ```

2.9 NOZEROCONF

12c RAC 配置

CSSD Fails to Join the Cluster After Private Network Recovered if avahi Daemon is up and Running (Doc ID 1501093.1)文档中建议

# echo "NOZEROCONF=yes" >> /etc/sysconfig/network

2.10 软件包安装

配置扩展 YUM 源:

RHEL六版用户 # wget https://dl.fedoraproject.org/pub/epel/epel-release-latest-6.noarch.rpm # rpm -Uvh epel-release-latest-6.noarch.rpm RHEL七版用户 # wget https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm # rpm -Uvh epel-release-latest-7.noarch.rpm

安装系统包:

# rpm -q --qf '%{NAME}-%{VERSION}-%{RELEASE} (%{ARCH})\n' binutils \ compat-libstdc++-33 \ compat-libcap1 \ elfutils-libelf \ elfutils-libelf-devel \ gcc \ gcc-c++ \ glibc \ glibc-common \ glibc-devel \ glibc-headers \ ksh \ libaio \ libaio-devel \ libgcc \ libstdc++ \ libXext \ libXtst \ kde-l10n-Chinese.noarch \ libstdc++-devel \ make \ xclock \ sysstat \ man \ nfs-utils \ lsof \ expect \ unzip \ redhat-lsb \ openssh-clients \ smartmontools \ unixODBC \ perl \ telnet \ vsftpd \ ntsysv \ lsscsi \ libX11 \ libxcb \ libXau \ libXi \ strace \ sg3_utils \ kexec-tools \ net-tools \ unixODBC-devel |grep "not installed" |awk '{print $2}' |xargs yum install -y

2.11 安装 cvuqdisk 包

安装包位置在:解压 grid 安装包后

(在步骤:「3.1 GRID 安装」解压 grid 安装包后,安装 cvuqdisk-1.0.10-1.rpm 包)

# cd $ORACLE_HOME/cv/rpm # rpm –ivh cvuqdisk*.rpm

2.12 时间服务

使用 Chrony 服务

# vim /etc/chrony.confserver

iburst 重启时间同步服务:

# systemctl restart chronyd.service # systemctl enable chronyd.service查看时间同步源:

# chronyc sources -v查看时间同步状态:

# chronyc sourcestats

2.13 创建用户

创建用户组:

# groupadd -g 600 oinstall # groupadd -g 601 dba # groupadd -g 602 oper # groupadd -g 603 asmadmin # groupadd -g 604 asmoper # groupadd -g 605 asmdba # groupadd -g 606 backupdba # groupadd -g 607 dgdba # groupadd -g 608 kmdba # groupadd -g 609 racdba创建 oracle 和 grid 用户:

# useradd -u 600 -g oinstall -G asmadmin,dba,asmdba,backupdba,dgdba,kmdba,racdba,oper oracle # useradd -u 601 -g oinstall -G oper,asmadmin,asmdba,asmoper,dba,racdba grid设置 oracle 和 grid 用户的密码:

-- oracle 口令 # passwd oracle -- grid 口令 # passwd grid

2.14 内核参数调整

修改内核参数(结合实际环境修改):

# vim /etc/sysctl.conf ``` # oracle-database-preinstall-19c fs.file-max = 6815744 kernel.sem = 250 32000 100 128 kernel.shmmni = 4096 kernel.shmall = 1073741824 kernel.shmmax = 4398046511104 kernel.panic_on_oops = 1 net.core.rmem_default = 262144 net.core.rmem_max = 4194304 net.core.wmem_default = 262144 net.core.wmem_max = 1048576 net.ipv4.conf.all.rp_filter = 2 net.ipv4.conf.default.rp_filter = 2 fs.aio-max-nr = 1048576 net.ipv4.ip_local_port_range = 9000 65500 # SGA 1(G)*1024/2 +20 =HugePages_Total vm.nr_hugepages = 532 vm.swappiness=5 ```参数名 参数说明 建议值

kernel.shmmax=单个共享内存最大值单位b,一般大于等于sga_max_target

kernel.shmall=控制内存页数,一页是4kb,一般是kernel.shmmax/4

kernel.shmmni 共享内存段的最大数量 4096

kernel.shmall 控制共享内存页数 根据计算公式进行调整,物理内存0.71024*1024KB/4KB

kernel.shmmax 单个共享内存段的最大值 根据计算公式进行调整,物理内存0.7102410241024

kernel.sem 每个进程通讯需要的信号灯

计算Hugepagesize的方法

vm.nr_hugepages = sga_max_size / Hugepagesize = 12GB/2048KB = 6144 (can be set slightly bigger than this figure)

执行命令生效:

# sysctl -p

2.15 LIMITS 配置

# vim /etc/security/limits.conf ``` # oracle-database-preinstall-19c oracle soft nofile 1024 oracle hard nofile 65536 oracle soft nproc 16384 oracle hard nproc 16384 oracle soft stack 10240 oracle hard stack 32768 oracle hard memlock 134217728 oracle soft memlock 134217728 grid soft nofile 1024 grid hard nofile 65536 grid soft nproc 16384 grid hard nproc 16384 grid soft stack 10240 grid hard stack 32768 grid hard memlock 134217728 grid soft memlock 134217728 ```备注:Memlock在HugePage环境开启,单位为KB。

值得一提的是,Linux 7 的 limit 配置已经不在是

/etc/security/limits.conf了,而是在/etc/security/limits.d目录下面。[root@tqdb21: /etc/security/limits.d]# cat oracle-database-19c.conf ``` oracle soft nofile 1024 oracle hard nofile 65536 oracle soft nproc 16384 oracle hard nproc 16384 oracle soft stack 10240 oracle hard stack 32768 oracle hard memlock 134217728 oracle soft memlock 134217728 grid soft nofile 1024 grid hard nofile 65536 grid soft nproc 16384 grid hard nproc 16384 grid soft stack 10240 grid hard stack 32768 grid hard memlock 134217728 grid soft memlock 134217728 ``` [root@tqdb21: /etc/security/limits.d]#

2.16 目录创建

# mkdir -p /u01/app/grid # mkdir -p /u01/app/oraInventory # mkdir -p /u01/app/19c/grid # mkdir -p /u01/app/oracle # mkdir -p /u01/app/oracle/product/19c/dbhome # chown -R grid:oinstall /u01 # chown -R grid:oinstall /u01/app/oraInventory # chown -R oracle:oinstall /u01/app/oracle # chown -R oracle:oinstall /u01/app/oracle/product # chown -R oracle:oinstall /u01/app/oracle/product/19c/dbhome # chmod -R 775 /u01

2.17 配置 profile

# vim /etc/profile # 增加下列内容: if [ $USER = "oracle" ] || [ $USER = "grid" ]; then if [ $SHELL = "/bin/ksh" ]; then ulimit -p 16384 ulimit -n 65536 else ulimit -u 16384 -n 65536 fi umask 022 fi

2.18 GRID 用户环境变量

rac1 节点 1:

-- rac1 节点 1: GRID用户环境变量 # su - grid $ vim .bash_profile ``` export TMP=/tmp export TMPDIR=$TMP export ORACLE_SID=+ASM1 export ORACLE_BASE=/u01/app/grid export ORACLE_HOME=/u01/app/19c/grid export TNS_ADMIN=$ORACLE_HOME/network/admin export NLS333=$ORACLE_HOME/ocommon/nls/admin/data export NLS_LANG=american_america.AL32UTF8 export LIBPATH=$ORACLE_HOME/lib:$ORACLE_HOME/lib32 export LD_LIBRARY_PATH=$ORACLE_HOME/jdk/jre/lib:$ORACLE_HOME/network/lib:$ORACLE_HOME/rdbms/lib:$LD_LIBRARY_PATH export LD_LIBRARY_PATH=$ORACLE_HOME/lib:$ORACLE_HOME/lib32:$LD_LIBRARY_PATH export CLASS_PATH=$ORACLE_HOME/jre:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib:$ORACLE_HOME/network/jlib export PATH=/usr/local/bin:/usr/bin:/bin:/usr/sbin:/usr/ucb:/usr/bin/X11:/sbin:. export PATH=$ORACLE_HOME/bin:$ORACLE_HOME/OPatch:$HOME/bin:$PATH export PATH=/usr/bin/xdpyinfo:$PATH umask 022 if [ $USER = "grid" ]; then if [ $SHELL = "/bin/ksh" ]; then ulimit -p 16384 ulimit -n 65536 else ulimit -u 16384 -n 65536 fi fi ## export NLS_LANG="american_america.AL32UTF8" export LANG="en_US.UTF-8" export NLS_DATE_FORMAT="YYYY-MM-DD HH24:MI:SS" export NLS_TIMESTAMP_FORMAT="YYYY-MM-DD HH24:MI:SS.FF9" alias impdp='rlwrap impdp' alias sqlplus='rlwrap sqlplus' alias asmcmd='rlwrap asmcmd' ```rac2 节点 2:

-- rac2 节点 2: GRID用户环境变量 # su - grid $ vim .bash_profile ``` export TMP=/tmp export TMPDIR=$TMP export ORACLE_SID=+ASM2 export ORACLE_BASE=/u01/app/grid export ORACLE_HOME=/u01/app/19c/grid export TNS_ADMIN=$ORACLE_HOME/network/admin export NLS333=$ORACLE_HOME/ocommon/nls/admin/data export NLS_LANG=american_america.AL32UTF8 export LIBPATH=$ORACLE_HOME/lib:$ORACLE_HOME/lib32 export LD_LIBRARY_PATH=$ORACLE_HOME/jdk/jre/lib:$ORACLE_HOME/network/lib:$ORACLE_HOME/rdbms/lib:$LD_LIBRARY_PATH export LD_LIBRARY_PATH=$ORACLE_HOME/lib:$ORACLE_HOME/lib32:$LD_LIBRARY_PATH export CLASS_PATH=$ORACLE_HOME/jre:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib:$ORACLE_HOME/network/jlib export PATH=/usr/local/bin:/usr/bin:/bin:/usr/sbin:/usr/ucb:/usr/bin/X11:/sbin:. export PATH=$ORACLE_HOME/bin:$ORACLE_HOME/OPatch:$HOME/bin:$PATH export PATH=/usr/bin/xdpyinfo:$PATH umask 022 if [ $USER = "grid" ]; then if [ $SHELL = "/bin/ksh" ]; then ulimit -p 16384 ulimit -n 65536 else ulimit -u 16384 -n 65536 fi fi ## export NLS_LANG="american_america.AL32UTF8" export LANG="en_US.UTF-8" export NLS_DATE_FORMAT="YYYY-MM-DD HH24:MI:SS" export NLS_TIMESTAMP_FORMAT="YYYY-MM-DD HH24:MI:SS.FF9" alias impdp='rlwrap impdp' alias sqlplus='rlwrap sqlplus' alias asmcmd='rlwrap asmcmd' ```

2.19 ORACLE 用户环境变量

rac1 节点 1:

-- rac1 节点 1:ORACLE用户环境变量 # su - oracle $ vim .bash_profile ``` export LANG=en_US export TMP=/tmp export TMPDIR=$TMP export ORACLE_SID=tqdb1 export DB_UNIQUE_NAME=tqdb export ORACLE_UNQNAME=tqdb export ORACLE_BASE=/u01/app/oracle export ORACLE_HOME=/u01/app/oracle/product/19c/dbhome export TNS_ADMIN=$ORACLE_HOME/network/admin export NLS333=$ORACLE_HOME/ocommon/nls/admin/data export NLS_LANG=american_america.AL32UTF8 export LIBPATH=$ORACLE_HOME/lib:$ORACLE_HOME/lib32 export LD_LIBRARY_PATH=$ORACLE_HOME/jdk/jre/lib:$ORACLE_HOME/network/lib:$ORACLE_HOME/rdbms/lib:$LD_LIBRARY_PATH export LD_LIBRARY_PATH=$ORACLE_HOME/lib:$ORACLE_HOME/lib32:$LD_LIBRARY_PATH export CLASS_PATH=$ORACLE_HOME/jre:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib:$ORACLE_HOME/network/jlib export PATH=/usr/local/bin:/usr/bin:/bin:/usr/sbin:/usr/ucb:/usr/bin/X11:/sbin:. export PATH=$ORACLE_HOME/bin:$ORACLE_HOME/OPatch:$HOME/bin:$PATH export PATH=/usr/bin/xdpyinfo:$PATH umask 022 if [ $USER = "oracle" ]; then if [ $SHELL = "/bin/ksh" ]; then ulimit -p 16384 ulimit -n 65536 else ulimit -u 16384 -n 65536 fi fi ## export NLS_LANG="american_america.AL32UTF8" export LANG="en_US.UTF-8" export NLS_DATE_FORMAT="YYYY-MM-DD HH24:MI:SS" export NLS_TIMESTAMP_FORMAT="YYYY-MM-DD HH24:MI:SS.FF9" alias impdp='rlwrap impdp' alias sqlplus='rlwrap sqlplus' alias rman='rlwrap rman'rac2 节点 2:

-- rac2 节点 2:ORACLE用户环境变量 # su - oracle $ vim .bash_profile ``` export LANG=en_US export TMP=/tmp export TMPDIR=$TMP export ORACLE_SID=tqdb2 export DB_UNIQUE_NAME=tqdb export ORACLE_UNQNAME=tqdb export ORACLE_BASE=/u01/app/oracle export ORACLE_HOME=/u01/app/oracle/product/19c/dbhome export TNS_ADMIN=$ORACLE_HOME/network/admin export NLS333=$ORACLE_HOME/ocommon/nls/admin/data export NLS_LANG=american_america.AL32UTF8 export LIBPATH=$ORACLE_HOME/lib:$ORACLE_HOME/lib32 export LD_LIBRARY_PATH=$ORACLE_HOME/jdk/jre/lib:$ORACLE_HOME/network/lib:$ORACLE_HOME/rdbms/lib:$LD_LIBRARY_PATH export LD_LIBRARY_PATH=$ORACLE_HOME/lib:$ORACLE_HOME/lib32:$LD_LIBRARY_PATH export CLASS_PATH=$ORACLE_HOME/jre:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib:$ORACLE_HOME/network/jlib export PATH=/usr/local/bin:/usr/bin:/bin:/usr/sbin:/usr/ucb:/usr/bin/X11:/sbin:. export PATH=$ORACLE_HOME/bin:$ORACLE_HOME/OPatch:$HOME/bin:$PATH export PATH=/usr/bin/xdpyinfo:$PATH umask 022 if [ $USER = "oracle" ]; then if [ $SHELL = "/bin/ksh" ]; then ulimit -p 16384 ulimit -n 65536 else ulimit -u 16384 -n 65536 fi fi ## export NLS_LANG="american_america.AL32UTF8" export LANG="en_US.UTF-8" export NLS_DATE_FORMAT="YYYY-MM-DD HH24:MI:SS" export NLS_TIMESTAMP_FORMAT="YYYY-MM-DD HH24:MI:SS.FF9" alias impdp='rlwrap impdp' alias sqlplus='rlwrap sqlplus' alias rman='rlwrap rman'

2.20 ROOT 用户添加 crsctl 命令

-- ROOT用户添加crsctl命令

# vim /etc/profile ``` # ROOT用户添加 `crsctl` 命令 export PATH=/u01/app/19c/grid/bin:$PATH export ORACLE_BASE=/u01/app/grid export ORACLE_HOME=/u01/app/19c/grid # 图形化界面相关 export PATH=/usr/bin/xdpyinfo:$PATH # history: 显示历史时间 export HISTSIZE=4096 export HISTTIMEFORMAT="%F %T `whoami` " ```

2.21 手动配置 SSH 等效性 (也可在图形安装GRID、DB时,点击SSH connectivity)

2.21.1 oracle 用户的 SSH 等效性

命令: (建立oracle 用户的SSH等效性(两个节点在oracle用户下执行))

-- 1. 在 tqdb21 节点1 执行: root# su - oracle oracle$ mkdir ~/.ssh oracle$ chmod 700 ~/.ssh/ oracle$ ssh-keygen -t rsa oracle$ ssh-keygen -t dsa -- 2. 在 tqdb22 节点2 执行: root# su - oracle oracle$ mkdir ~/.ssh oracle$ chmod 700 ~/.ssh/ oracle$ ssh-keygen -t rsa oracle$ ssh-keygen -t dsa -- 3. 在 tqdb21 节点1 执行: oracle$ cd ~/.ssh oracle$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys oracle$ cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys oracle$ ssh oracle@tqdb22 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys oracle$ ssh oracle@tqdb22 cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys oracle$ scp /home/oracle/.ssh/authorized_keys oracle@tqdb22:~/.ssh/authorized_keys -- 4. 在 tqdb22 节点2 执行: ( 查看从 tqdb21 复制(scp) 过来的 `authorized_keys` 文件) oracle$ cd ~/.ssh oracle$ ll oracle$ cat authorized_keys 在每个节点上测试连接。验证当您再次运行以下命令时,系统是否不再提示您输入口令。 -- 5. 在 tqdb21 节点1 执行: -- 第一次,需要输入 `yes` oracle$ ssh tqdb21 date oracle$ ssh tqdb22 date oracle$ ssh tqdb21-priv date oracle$ ssh tqdb22-priv date -- 第二次,不再需要输入 `yes`, 可以直接返回结果。 说明 SSH 等效性已配置好了。 oracle$ ssh tqdb21 date oracle$ ssh tqdb22 date oracle$ ssh tqdb21-priv date oracle$ ssh tqdb22-priv date oracle$ date; ssh tqdb22 date oracle$ date; ssh tqdb22-priv date -- 6. 在 tqdb22 节点2 执行: -- 第一次,需要输入 `yes` oracle$ ssh tqdb21 date oracle$ ssh tqdb22 date oracle$ ssh tqdb21-priv date oracle$ ssh tqdb22-priv date -- 第二次,不再需要输入 `yes`, 可以直接返回结果。 说明 SSH 等效性已配置好了。 oracle$ ssh tqdb21 date oracle$ ssh tqdb22 date oracle$ ssh tqdb21-priv date oracle$ ssh tqdb22-priv date oracle$ date; ssh tqdb22 date oracle$ date; ssh tqdb22-priv date执行记录:

建立SSH等效性(两个节点在oracle用户下执行) 在 tqdb21 节点1 执行 ``` [root@tqdb21: ~]# su - oracle Last login: Tue Feb 11 23:23:44 CST 2020 on pts/0 [oracle@tqdb21: ~]$ la bash: la: command not found... [oracle@tqdb21: ~]$ l. . .. .bash_history .bash_logout .bash_profile .bashrc .cache .config .kshrc .mozilla .viminfo [oracle@tqdb21: ~]$ mkdir ~/.ssh [oracle@tqdb21: ~]$ chmod 700 ~/.ssh/ [oracle@tqdb21: ~]$ ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/home/oracle/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/oracle/.ssh/id_rsa. Your public key has been saved in /home/oracle/.ssh/id_rsa.pub. The key fingerprint is: SHA256:sKgc0lXiMqxIoB1c++ANDWQbG7um0Tbz+nM8PE1LpBc oracle@tqdb21 The key's randomart image is: +---[RSA 2048]----+ |...oO . | |oo.+ % | |..= X o | |oo * B o E | |+ + X + So . | | o B + . + | | + .o = . | | .. * o | | ...o o | +----[SHA256]-----+ [oracle@tqdb21: ~]$ ssh-keygen -t dsa Generating public/private dsa key pair. Enter file in which to save the key (/home/oracle/.ssh/id_dsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/oracle/.ssh/id_dsa. Your public key has been saved in /home/oracle/.ssh/id_dsa.pub. The key fingerprint is: SHA256:5TXoyCtm2RREUNtssej/nesLCv3DCgYr572hXJ48vzQ oracle@tqdb21 The key's randomart image is: +---[DSA 1024]----+ | .++ . | | . = + | | + B o | | o B . . | | . S o | | * + | | . B O E.. | | B O.* =oo . | | + *+=ooo*o | +----[SHA256]-----+ [oracle@tqdb21: ~]$ [oracle@tqdb21: ~]$ cd .ssh/ [oracle@tqdb21: ~/.ssh]$ ls id_dsa id_dsa.pub id_rsa id_rsa.pub [oracle@tqdb21: ~/.ssh]$ ll total 16 -rw-------. 1 oracle oinstall 668 Feb 11 23:31 id_dsa -rw-r--r--. 1 oracle oinstall 603 Feb 11 23:31 id_dsa.pub -rw-------. 1 oracle oinstall 1679 Feb 11 23:30 id_rsa -rw-r--r--. 1 oracle oinstall 395 Feb 11 23:30 id_rsa.pub [oracle@tqdb21: ~/.ssh]$ ``` 在 tqdb22 节点2 执行 ``` [root@tqdb22: ~]# su - oracle Last login: Tue Feb 11 23:25:32 CST 2020 on pts/0 [oracle@tqdb22: ~]$ l. . .. .bash_history .bash_logout .bash_profile .bashrc .cache .config .kshrc .mozilla .viminfo [oracle@tqdb22: ~]$ mkdir ~/.ssh [oracle@tqdb22: ~]$ chmod 700 ~/.ssh/ [oracle@tqdb22: ~]$ ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/home/oracle/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/oracle/.ssh/id_rsa. Your public key has been saved in /home/oracle/.ssh/id_rsa.pub. The key fingerprint is: SHA256:TwfmNqXPaYMjwC4dvIy4Qf+R5m4l57iee8qlOvxmeJA oracle@tqdb22 The key's randomart image is: +---[RSA 2048]----+ | | | | | o . | | o o + | | . .= S * . | | . oE=.=o+ * . | | o.+oO*o + * | | o+**=o. o . | | . .@&= | +----[SHA256]-----+ [oracle@tqdb22: ~]$ ssh-keygen -t dsa Generating public/private dsa key pair. Enter file in which to save the key (/home/oracle/.ssh/id_dsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/oracle/.ssh/id_dsa. Your public key has been saved in /home/oracle/.ssh/id_dsa.pub. The key fingerprint is: SHA256:JS6Z/5ztai4ZGn6jeprpJ7LO0GJMwzAPDduW92t7tY4 oracle@tqdb22 The key's randomart image is: +---[DSA 1024]----+ |. | | = . | |= = . . . | |o= . . + o | | +. = S | |o.. .+. . | |oo. .oo.+ . | |.+. .+=.*+oo | | .++**o+E*Boo | +----[SHA256]-----+ [oracle@tqdb22: ~]$ ll .ssh/ total 16 -rw-------. 1 oracle oinstall 668 Feb 11 23:33 id_dsa -rw-r--r--. 1 oracle oinstall 603 Feb 11 23:33 id_dsa.pub -rw-------. 1 oracle oinstall 1679 Feb 11 23:33 id_rsa -rw-r--r--. 1 oracle oinstall 395 Feb 11 23:33 id_rsa.pub [oracle@tqdb22: ~]$ ``` 在 tqdb21 节点1 执行: ``` [oracle@tqdb21: ~/.ssh]$ cat id_dsa.pub ssh-dss AAAAB3NzaC1kc3MAAACBAKUOvUgNh2W91m9nrftiov4cRsP8sdiz2Tnd4+6t0WCBgu+hcppe/RD2zv/Dn3Q3tmaGE7vkCzdMpvCuFr0dOX2bQZtu+e98itdn0s6iM1Wrbri1n6a9yNLbvNVXbW+WRpHMImePDS35C5zzQJFc0DXmxeZ0UQxsqR3ZE9NpFJ9/AAAAFQC1MRowodOePZVcMSunpKDL+SndowAAAIAMBGObmCEZZnCFfQ0NtT/YBNgdyBohULgUa+jUCWPJLXis1wNJjadoWVEW7+KKHPUdx7NfS4kmDKYQL4xkXLUBzRvQVYncskpWtxnZvNiw0g6iVrLc5+DCr2AOqz1rpaGQmsfunFOXAQ0OHgSf6bUzxdHcTK8sEL0dtBi1yNM+AgAAAIAN/3QY7mk2D6/dmpo9Mq75Mv+vDM4ln/9pApqJSgE/UEKre1v6VI73xIawV3eaetAdgbGDDhyEJYb8k0LI6b+Ptox0mtKFi92OmIIiDh07b/CmDucy8K7XM/NRjS4z5C4kuuhNODNK7XLZGUxYi0Pa78zVHaCaWTRskNBUqFBNAQ== oracle@tqdb21 [oracle@tqdb21: ~/.ssh]$ cat id_rsa.pub ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDJzgjh6y8oKYHf7ebuWmxjESIfoquJ9r+xdr3SCzS76pIjDIBq0+Awh2GafvlwDFd/FfmTDxcz6q1blp73NEDQq6RZA8nudSvER/qY7wrUW41RnrHzt2X7WrmAZ8KBWqvAjYD925jxfODwVROXzj7kzwWoR1jzsZvJiARDaWNKuiQSnMlkaluE3BaSNnacvlNGVkjNi6rgybTGDcalojiYvBuIIgOP7t5N4vxYbT1oACuGjs+vmoKeFnPJmbvZeWStTOsJMkVqz04WMquoXgULHtTBJocRf4mCLF8wAMU0me6K1ywxx4FZKP57Bqq1N70EF+t+XtXlIf3R4zq5AJsH oracle@tqdb21 [oracle@tqdb21: ~/.ssh]$ [oracle@tqdb21: ~/.ssh]$ [oracle@tqdb21: ~/.ssh]$ cd [oracle@tqdb21: ~]$ [oracle@tqdb21: ~]$ [oracle@tqdb21: ~]$ cd - /home/oracle/.ssh [oracle@tqdb21: ~/.ssh]$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys [oracle@tqdb21: ~/.ssh]$ ll total 20 -rw-r--r--. 1 oracle oinstall 395 Feb 11 23:38 authorized_keys -rw-------. 1 oracle oinstall 668 Feb 11 23:31 id_dsa -rw-r--r--. 1 oracle oinstall 603 Feb 11 23:31 id_dsa.pub -rw-------. 1 oracle oinstall 1679 Feb 11 23:30 id_rsa -rw-r--r--. 1 oracle oinstall 395 Feb 11 23:30 id_rsa.pub [oracle@tqdb21: ~/.ssh]$ less authorized_keys ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDJzgjh6y8oKYHf7ebuWmxjESIfoquJ9r+xdr3SCzS76pIjDIBq0+Awh2GafvlwDFd/FfmTDxcz6q1blp73NEDQq6RZA8nudSvER/qY7wrUW41RnrHzt2X7WrmAZ8KBWqvAjYD925jxfODwVROXzj7kzwWoR1jzsZvJiARDaWNKuiQSnMlkaluE3BaSNnacvlNGVkjNi6rgybTGDcalojiYvBuIIgOP7t5N4vxYbT1oACuGjs+vmoKeFnPJmbvZeWStTOsJMkVqz04WMquoXgULHtTBJocRf4mCLF8wAMU0me6K1ywxx4FZKP57Bqq1N70EF+t+XtXlIf3R4zq5AJsH oracle@tqdb21 [oracle@tqdb21: ~/.ssh]$ [oracle@tqdb21: ~/.ssh]$ cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys [oracle@tqdb21: ~/.ssh]$ [oracle@tqdb21: ~/.ssh]$ cat authorized_keys ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDJzgjh6y8oKYHf7ebuWmxjESIfoquJ9r+xdr3SCzS76pIjDIBq0+Awh2GafvlwDFd/FfmTDxcz6q1blp73NEDQq6RZA8nudSvER/qY7wrUW41RnrHzt2X7WrmAZ8KBWqvAjYD925jxfODwVROXzj7kzwWoR1jzsZvJiARDaWNKuiQSnMlkaluE3BaSNnacvlNGVkjNi6rgybTGDcalojiYvBuIIgOP7t5N4vxYbT1oACuGjs+vmoKeFnPJmbvZeWStTOsJMkVqz04WMquoXgULHtTBJocRf4mCLF8wAMU0me6K1ywxx4FZKP57Bqq1N70EF+t+XtXlIf3R4zq5AJsH oracle@tqdb21 ssh-dss AAAAB3NzaC1kc3MAAACBAKUOvUgNh2W91m9nrftiov4cRsP8sdiz2Tnd4+6t0WCBgu+hcppe/RD2zv/Dn3Q3tmaGE7vkCzdMpvCuFr0dOX2bQZtu+e98itdn0s6iM1Wrbri1n6a9yNLbvNVXbW+WRpHMImePDS35C5zzQJFc0DXmxeZ0UQxsqR3ZE9NpFJ9/AAAAFQC1MRowodOePZVcMSunpKDL+SndowAAAIAMBGObmCEZZnCFfQ0NtT/YBNgdyBohULgUa+jUCWPJLXis1wNJjadoWVEW7+KKHPUdx7NfS4kmDKYQL4xkXLUBzRvQVYncskpWtxnZvNiw0g6iVrLc5+DCr2AOqz1rpaGQmsfunFOXAQ0OHgSf6bUzxdHcTK8sEL0dtBi1yNM+AgAAAIAN/3QY7mk2D6/dmpo9Mq75Mv+vDM4ln/9pApqJSgE/UEKre1v6VI73xIawV3eaetAdgbGDDhyEJYb8k0LI6b+Ptox0mtKFi92OmIIiDh07b/CmDucy8K7XM/NRjS4z5C4kuuhNODNK7XLZGUxYi0Pa78zVHaCaWTRskNBUqFBNAQ== oracle@tqdb21 [oracle@tqdb21: ~/.ssh]$ [oracle@tqdb21: ~/.ssh]$ [oracle@tqdb21: ~/.ssh]$ ssh oracle@tqdb22 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys The authenticity of host 'tqdb22 (192.168.6.22)' can't be established. ECDSA key fingerprint is SHA256:QT8z0WN0dmX3S0jnMcLe/MeraabCFvwlYKTmX/kKJ+o. ECDSA key fingerprint is MD5:de:f8:90:99:5d:f1:05:5c:65:4b:fb:8b:0f:bc:63:7d. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'tqdb22,192.168.6.22' (ECDSA) to the list of known hosts. oracle@tqdb22's password: Permission denied, please try again. oracle@tqdb22's password: [oracle@tqdb21: ~/.ssh]$ cat authorized_keys ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDJzgjh6y8oKYHf7ebuWmxjESIfoquJ9r+xdr3SCzS76pIjDIBq0+Awh2GafvlwDFd/FfmTDxcz6q1blp73NEDQq6RZA8nudSvER/qY7wrUW41RnrHzt2X7WrmAZ8KBWqvAjYD925jxfODwVROXzj7kzwWoR1jzsZvJiARDaWNKuiQSnMlkaluE3BaSNnacvlNGVkjNi6rgybTGDcalojiYvBuIIgOP7t5N4vxYbT1oACuGjs+vmoKeFnPJmbvZeWStTOsJMkVqz04WMquoXgULHtTBJocRf4mCLF8wAMU0me6K1ywxx4FZKP57Bqq1N70EF+t+XtXlIf3R4zq5AJsH oracle@tqdb21 ssh-dss AAAAB3NzaC1kc3MAAACBAKUOvUgNh2W91m9nrftiov4cRsP8sdiz2Tnd4+6t0WCBgu+hcppe/RD2zv/Dn3Q3tmaGE7vkCzdMpvCuFr0dOX2bQZtu+e98itdn0s6iM1Wrbri1n6a9yNLbvNVXbW+WRpHMImePDS35C5zzQJFc0DXmxeZ0UQxsqR3ZE9NpFJ9/AAAAFQC1MRowodOePZVcMSunpKDL+SndowAAAIAMBGObmCEZZnCFfQ0NtT/YBNgdyBohULgUa+jUCWPJLXis1wNJjadoWVEW7+KKHPUdx7NfS4kmDKYQL4xkXLUBzRvQVYncskpWtxnZvNiw0g6iVrLc5+DCr2AOqz1rpaGQmsfunFOXAQ0OHgSf6bUzxdHcTK8sEL0dtBi1yNM+AgAAAIAN/3QY7mk2D6/dmpo9Mq75Mv+vDM4ln/9pApqJSgE/UEKre1v6VI73xIawV3eaetAdgbGDDhyEJYb8k0LI6b+Ptox0mtKFi92OmIIiDh07b/CmDucy8K7XM/NRjS4z5C4kuuhNODNK7XLZGUxYi0Pa78zVHaCaWTRskNBUqFBNAQ== oracle@tqdb21 ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDNLZOEguCk/87HUUtYnayz8klrehAk7bgK87F6zjdp6roaAXQDFiKKz5se2JmAoTKccZ2WvmYZvyRfhpyNJWV7ZdgsPwrk4iW/SpLDrH/m/5TaD3406ghp+rpziMdwpiHXeA6td00ZLA+ZL3HcIzG975K1PVurdZFBMj0uNPL3dJNwTKcdzEiXULgCLNSzbSvgmD8WZEarb9UfqS4uzq0jGct52uOELxHHwvlhAqCUDMma0wOcTLd/4eqCQUcqDCIjGpgiN7c2clLSJqLPGmiGx8S6rvg02AHxvxPm+2D3MvNpOwOkuJPbB9SQoyPyGroilslu+if7awbJYd6INpXP oracle@tqdb22 [oracle@tqdb21: ~/.ssh]$ [oracle@tqdb21: ~/.ssh]$ ssh oracle@tqdb22 cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys oracle@tqdb22's password: [oracle@tqdb21: ~/.ssh]$ [oracle@tqdb21: ~/.ssh]$ cat authorized_keys ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDJzgjh6y8oKYHf7ebuWmxjESIfoquJ9r+xdr3SCzS76pIjDIBq0+Awh2GafvlwDFd/FfmTDxcz6q1blp73NEDQq6RZA8nudSvER/qY7wrUW41RnrHzt2X7WrmAZ8KBWqvAjYD925jxfODwVROXzj7kzwWoR1jzsZvJiARDaWNKuiQSnMlkaluE3BaSNnacvlNGVkjNi6rgybTGDcalojiYvBuIIgOP7t5N4vxYbT1oACuGjs+vmoKeFnPJmbvZeWStTOsJMkVqz04WMquoXgULHtTBJocRf4mCLF8wAMU0me6K1ywxx4FZKP57Bqq1N70EF+t+XtXlIf3R4zq5AJsH oracle@tqdb21 ssh-dss AAAAB3NzaC1kc3MAAACBAKUOvUgNh2W91m9nrftiov4cRsP8sdiz2Tnd4+6t0WCBgu+hcppe/RD2zv/Dn3Q3tmaGE7vkCzdMpvCuFr0dOX2bQZtu+e98itdn0s6iM1Wrbri1n6a9yNLbvNVXbW+WRpHMImePDS35C5zzQJFc0DXmxeZ0UQxsqR3ZE9NpFJ9/AAAAFQC1MRowodOePZVcMSunpKDL+SndowAAAIAMBGObmCEZZnCFfQ0NtT/YBNgdyBohULgUa+jUCWPJLXis1wNJjadoWVEW7+KKHPUdx7NfS4kmDKYQL4xkXLUBzRvQVYncskpWtxnZvNiw0g6iVrLc5+DCr2AOqz1rpaGQmsfunFOXAQ0OHgSf6bUzxdHcTK8sEL0dtBi1yNM+AgAAAIAN/3QY7mk2D6/dmpo9Mq75Mv+vDM4ln/9pApqJSgE/UEKre1v6VI73xIawV3eaetAdgbGDDhyEJYb8k0LI6b+Ptox0mtKFi92OmIIiDh07b/CmDucy8K7XM/NRjS4z5C4kuuhNODNK7XLZGUxYi0Pa78zVHaCaWTRskNBUqFBNAQ== oracle@tqdb21 ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDNLZOEguCk/87HUUtYnayz8klrehAk7bgK87F6zjdp6roaAXQDFiKKz5se2JmAoTKccZ2WvmYZvyRfhpyNJWV7ZdgsPwrk4iW/SpLDrH/m/5TaD3406ghp+rpziMdwpiHXeA6td00ZLA+ZL3HcIzG975K1PVurdZFBMj0uNPL3dJNwTKcdzEiXULgCLNSzbSvgmD8WZEarb9UfqS4uzq0jGct52uOELxHHwvlhAqCUDMma0wOcTLd/4eqCQUcqDCIjGpgiN7c2clLSJqLPGmiGx8S6rvg02AHxvxPm+2D3MvNpOwOkuJPbB9SQoyPyGroilslu+if7awbJYd6INpXP oracle@tqdb22 ssh-dss AAAAB3NzaC1kc3MAAACBAJ5Uuh7XD2mJhJUeIP3r46augiN0wc3Qksou9DA+v2QXaoUzBFrdeIdQ7wSYuLkp/1rZm6imS8PlFBd8uVPudybmh+jNwVtk3d18eYgJ8lunY115/7yhsDvS7yt+cYSIVFqoiGQUWPBfXM/oGUnT+RzPqMdrEz0K7mrWpMJffFh5AAAAFQCd/xbvKCr5cYNWwqF/WUQ0mQ0U6QAAAIBwBDpTCszu1WFeYzX1o2WVSEtnnaIX+BkeHELXa90Co1F2EPTNqoA1KDoCalw0dPKyyQYeG4SDXQ7AhSSAuvIc+xherUciFDjtNYW+uVGNot+++1zMVwKaj5T0EWmoNsw60ALKeLbWniBKKahwwbRKsUL7A49D0iaqRDX6d2X2IwAAAIBztUTFji8KD/0j2N9D4pa+opeKjz571i88Iy/R9JpN8XRz1XBxP/dkfPIOTXebaY7vFSeHb0HSP2Fd70yFhqIm14Kn0A2Uf7XnSjTRvDTub51XLKI2cJKi16EwgcMOnFFJBD+A9HfYlXtVGBl+uag07sEenLW4F2FWK57TkrDRcQ== oracle@tqdb22 [oracle@tqdb21: ~/.ssh]$ [oracle@tqdb21: ~/.ssh]$ [oracle@tqdb21: ~/.ssh]$ [oracle@tqdb21: ~/.ssh]$ scp /home/oracle/.ssh/authorized_keys oracle@tqdb22:~/.ssh/authorized_keys oracle@tqdb22's password: authorized_keys 100% 1996 2.5MB/s 00:00 [oracle@tqdb21: ~/.ssh]$ ``` 在 tqdb22 节点2 执行: ( 查看 `authorized_keys` ) ``` [oracle@tqdb22: ~/.ssh]$ ll total 20 -rw-r--r--. 1 oracle oinstall 1996 Feb 11 23:46 authorized_keys -rw-------. 1 oracle oinstall 668 Feb 11 23:33 id_dsa -rw-r--r--. 1 oracle oinstall 603 Feb 11 23:33 id_dsa.pub -rw-------. 1 oracle oinstall 1679 Feb 11 23:33 id_rsa -rw-r--r--. 1 oracle oinstall 395 Feb 11 23:33 id_rsa.pub [oracle@tqdb22: ~/.ssh]$ cat authorized_keys ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDJzgjh6y8oKYHf7ebuWmxjESIfoquJ9r+xdr3SCzS76pIjDIBq0+Awh2GafvlwDFd/FfmTDxcz6q1blp73NEDQq6RZA8nudSvER/qY7wrUW41RnrHzt2X7WrmAZ8KBWqvAjYD925jxfODwVROXzj7kzwWoR1jzsZvJiARDaWNKuiQSnMlkaluE3BaSNnacvlNGVkjNi6rgybTGDcalojiYvBuIIgOP7t5N4vxYbT1oACuGjs+vmoKeFnPJmbvZeWStTOsJMkVqz04WMquoXgULHtTBJocRf4mCLF8wAMU0me6K1ywxx4FZKP57Bqq1N70EF+t+XtXlIf3R4zq5AJsH oracle@tqdb21 ssh-dss AAAAB3NzaC1kc3MAAACBAKUOvUgNh2W91m9nrftiov4cRsP8sdiz2Tnd4+6t0WCBgu+hcppe/RD2zv/Dn3Q3tmaGE7vkCzdMpvCuFr0dOX2bQZtu+e98itdn0s6iM1Wrbri1n6a9yNLbvNVXbW+WRpHMImePDS35C5zzQJFc0DXmxeZ0UQxsqR3ZE9NpFJ9/AAAAFQC1MRowodOePZVcMSunpKDL+SndowAAAIAMBGObmCEZZnCFfQ0NtT/YBNgdyBohULgUa+jUCWPJLXis1wNJjadoWVEW7+KKHPUdx7NfS4kmDKYQL4xkXLUBzRvQVYncskpWtxnZvNiw0g6iVrLc5+DCr2AOqz1rpaGQmsfunFOXAQ0OHgSf6bUzxdHcTK8sEL0dtBi1yNM+AgAAAIAN/3QY7mk2D6/dmpo9Mq75Mv+vDM4ln/9pApqJSgE/UEKre1v6VI73xIawV3eaetAdgbGDDhyEJYb8k0LI6b+Ptox0mtKFi92OmIIiDh07b/CmDucy8K7XM/NRjS4z5C4kuuhNODNK7XLZGUxYi0Pa78zVHaCaWTRskNBUqFBNAQ== oracle@tqdb21 ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDNLZOEguCk/87HUUtYnayz8klrehAk7bgK87F6zjdp6roaAXQDFiKKz5se2JmAoTKccZ2WvmYZvyRfhpyNJWV7ZdgsPwrk4iW/SpLDrH/m/5TaD3406ghp+rpziMdwpiHXeA6td00ZLA+ZL3HcIzG975K1PVurdZFBMj0uNPL3dJNwTKcdzEiXULgCLNSzbSvgmD8WZEarb9UfqS4uzq0jGct52uOELxHHwvlhAqCUDMma0wOcTLd/4eqCQUcqDCIjGpgiN7c2clLSJqLPGmiGx8S6rvg02AHxvxPm+2D3MvNpOwOkuJPbB9SQoyPyGroilslu+if7awbJYd6INpXP oracle@tqdb22 ssh-dss AAAAB3NzaC1kc3MAAACBAJ5Uuh7XD2mJhJUeIP3r46augiN0wc3Qksou9DA+v2QXaoUzBFrdeIdQ7wSYuLkp/1rZm6imS8PlFBd8uVPudybmh+jNwVtk3d18eYgJ8lunY115/7yhsDvS7yt+cYSIVFqoiGQUWPBfXM/oGUnT+RzPqMdrEz0K7mrWpMJffFh5AAAAFQCd/xbvKCr5cYNWwqF/WUQ0mQ0U6QAAAIBwBDpTCszu1WFeYzX1o2WVSEtnnaIX+BkeHELXa90Co1F2EPTNqoA1KDoCalw0dPKyyQYeG4SDXQ7AhSSAuvIc+xherUciFDjtNYW+uVGNot+++1zMVwKaj5T0EWmoNsw60ALKeLbWniBKKahwwbRKsUL7A49D0iaqRDX6d2X2IwAAAIBztUTFji8KD/0j2N9D4pa+opeKjz571i88Iy/R9JpN8XRz1XBxP/dkfPIOTXebaY7vFSeHb0HSP2Fd70yFhqIm14Kn0A2Uf7XnSjTRvDTub51XLKI2cJKi16EwgcMOnFFJBD+A9HfYlXtVGBl+uag07sEenLW4F2FWK57TkrDRcQ== oracle@tqdb22 [oracle@tqdb22: ~/.ssh]$ ``` 在每个节点上测试连接。验证当您再次运行以下命令时,系统是否不提示您输入口令。 在 tqdb21 节点1 执行: ``` [oracle@tqdb21: ~]$ ssh tqdb21 date The authenticity of host 'tqdb21 (192.168.6.21)' can't be established. ECDSA key fingerprint is SHA256:P/+G/d30l5VTPHHL9N6D+RpzvZ63gAIm+g9F6PeX80A. ECDSA key fingerprint is MD5:20:22:52:f2:51:c2:bf:a3:80:29:0b:e3:3c:c7:07:49. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'tqdb21,192.168.6.21' (ECDSA) to the list of known hosts. Tue Feb 11 23:48:12 CST 2020 [oracle@tqdb21: ~]$ ssh tqdb22 date Tue Feb 11 23:48:22 CST 2020 [oracle@tqdb21: ~]$ ssh tqdb21-priv date The authenticity of host 'tqdb21-priv (172.16.8.21)' can't be established. ECDSA key fingerprint is SHA256:P/+G/d30l5VTPHHL9N6D+RpzvZ63gAIm+g9F6PeX80A. ECDSA key fingerprint is MD5:20:22:52:f2:51:c2:bf:a3:80:29:0b:e3:3c:c7:07:49. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'tqdb21-priv,172.16.8.21' (ECDSA) to the list of known hosts. Tue Feb 11 23:48:42 CST 2020 [oracle@tqdb21: ~]$ ssh tqdb22-priv date The authenticity of host 'tqdb22-priv (172.16.8.22)' can't be established. ECDSA key fingerprint is SHA256:QT8z0WN0dmX3S0jnMcLe/MeraabCFvwlYKTmX/kKJ+o. ECDSA key fingerprint is MD5:de:f8:90:99:5d:f1:05:5c:65:4b:fb:8b:0f:bc:63:7d. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'tqdb22-priv,172.16.8.22' (ECDSA) to the list of known hosts. Tue Feb 11 23:49:06 CST 2020 [oracle@tqdb21: ~]$ [oracle@tqdb21: ~]$ ssh tqdb21 date Tue Feb 11 23:49:35 CST 2020 [oracle@tqdb21: ~]$ ssh tqdb22 date Tue Feb 11 23:49:39 CST 2020 [oracle@tqdb21: ~]$ ssh tqdb21-priv date Tue Feb 11 23:49:48 CST 2020 [oracle@tqdb21: ~]$ ssh tqdb22-priv date Tue Feb 11 23:49:52 CST 2020 [oracle@tqdb21: ~]$ date; ssh tqdb22 date Tue Feb 11 23:50:15 CST 2020 Tue Feb 11 23:50:15 CST 2020 [oracle@tqdb21: ~]$ date; ssh tqdb22-priv date Tue Feb 11 23:50:40 CST 2020 Tue Feb 11 23:50:40 CST 2020 [oracle@tqdb21: ~]$ ``` 在 tqdb22 节点2 执行: ``` [oracle@tqdb22: ~]$ ssh tqdb21 date The authenticity of host 'tqdb21 (192.168.6.21)' can't be established. ECDSA key fingerprint is SHA256:P/+G/d30l5VTPHHL9N6D+RpzvZ63gAIm+g9F6PeX80A. ECDSA key fingerprint is MD5:20:22:52:f2:51:c2:bf:a3:80:29:0b:e3:3c:c7:07:49. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'tqdb21,192.168.6.21' (ECDSA) to the list of known hosts. Tue Feb 11 23:53:12 CST 2020 [oracle@tqdb22: ~]$ ssh tqdb22 date The authenticity of host 'tqdb22 (192.168.6.22)' can't be established. ECDSA key fingerprint is SHA256:QT8z0WN0dmX3S0jnMcLe/MeraabCFvwlYKTmX/kKJ+o. ECDSA key fingerprint is MD5:de:f8:90:99:5d:f1:05:5c:65:4b:fb:8b:0f:bc:63:7d. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'tqdb22,192.168.6.22' (ECDSA) to the list of known hosts. Tue Feb 11 23:53:25 CST 2020 [oracle@tqdb22: ~]$ ssh tqdb21-priv date The authenticity of host 'tqdb21-priv (172.16.8.21)' can't be established. ECDSA key fingerprint is SHA256:P/+G/d30l5VTPHHL9N6D+RpzvZ63gAIm+g9F6PeX80A. ECDSA key fingerprint is MD5:20:22:52:f2:51:c2:bf:a3:80:29:0b:e3:3c:c7:07:49. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'tqdb21-priv,172.16.8.21' (ECDSA) to the list of known hosts. Tue Feb 11 23:53:35 CST 2020 [oracle@tqdb22: ~]$ ssh tqdb22-priv date The authenticity of host 'tqdb22-priv (172.16.8.22)' can't be established. ECDSA key fingerprint is SHA256:QT8z0WN0dmX3S0jnMcLe/MeraabCFvwlYKTmX/kKJ+o. ECDSA key fingerprint is MD5:de:f8:90:99:5d:f1:05:5c:65:4b:fb:8b:0f:bc:63:7d. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'tqdb22-priv,172.16.8.22' (ECDSA) to the list of known hosts. Tue Feb 11 23:53:42 CST 2020 [oracle@tqdb22: ~]$ ssh tqdb21 date Tue Feb 11 23:53:59 CST 2020 [oracle@tqdb22: ~]$ ssh tqdb22 date Tue Feb 11 23:54:03 CST 2020 [oracle@tqdb22: ~]$ ssh tqdb21-priv date Tue Feb 11 23:54:08 CST 2020 [oracle@tqdb22: ~]$ ssh tqdb22-priv date Tue Feb 11 23:54:12 CST 2020 [oracle@tqdb22: ~]$ date; ssh tqdb21 date Tue Feb 11 23:54:29 CST 2020 Tue Feb 11 23:54:29 CST 2020 [oracle@tqdb22: ~]$ date; ssh tqdb21-priv date Tue Feb 11 23:54:41 CST 2020 Tue Feb 11 23:54:41 CST 2020 [oracle@tqdb22: ~]$ ```

2.21.2 grid 用户的 SSH 等效性

命令: (建立grid 用户的SSH等效性(两个节点在grid用户下执行))

-- 1. 在 tqdb21 节点1 执行: root# su - grid grid$ mkdir ~/.ssh grid$ chmod 700 ~/.ssh/ grid$ ssh-keygen -t rsa grid$ ssh-keygen -t dsa -- 2. 在 tqdb22 节点2 执行: root# su - grid grid$ mkdir ~/.ssh grid$ chmod 700 ~/.ssh/ grid$ ssh-keygen -t rsa grid$ ssh-keygen -t dsa -- 3. 在 tqdb21 节点1 执行: grid$ cd ~/.ssh grid$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys grid$ cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys grid$ ssh grid@tqdb22 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys grid$ ssh grid@tqdb22 cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys grid$ scp /home/grid/.ssh/authorized_keys grid@tqdb22:~/.ssh/authorized_keys -- 4. 在 tqdb22 节点2 执行: ( 查看从 tqdb21 复制(scp) 过来的 `authorized_keys` 文件) grid$ cd ~/.ssh grid$ ll grid$ cat authorized_keys 在每个节点上测试连接。验证当您再次运行以下命令时,系统是否不再提示您输入口令。 -- 5. 在 tqdb21 节点1 执行: -- 第一次,需要输入 `yes` grid$ ssh tqdb21 date grid$ ssh tqdb22 date grid$ ssh tqdb21-priv date grid$ ssh tqdb22-priv date -- 第二次,不再需要输入 `yes`, 可以直接返回结果。 说明 SSH 等效性已配置好了。 grid$ ssh tqdb21 date grid$ ssh tqdb22 date grid$ ssh tqdb21-priv date grid$ ssh tqdb22-priv date grid$ date; ssh tqdb22 date grid$ date; ssh tqdb22-priv date -- 6. 在 tqdb22 节点2 执行: -- 第一次,需要输入 `yes` grid$ ssh tqdb21 date grid$ ssh tqdb22 date grid$ ssh tqdb21-priv date grid$ ssh tqdb22-priv date -- 第二次,不再需要输入 `yes`, 可以直接返回结果。 说明 SSH 等效性已配置好了。 grid$ ssh tqdb21 date grid$ ssh tqdb22 date grid$ ssh tqdb21-priv date grid$ ssh tqdb22-priv date grid$ date; ssh tqdb22 date grid$ date; ssh tqdb22-priv date执行记录:

建立SSH等效性(两个节点在grid用户下执行) -- 1. 在 tqdb21 节点1 执行: ``` [root@tqdb21: ~]# su - grid Last login: Tue Feb 11 23:19:09 CST 2020 on pts/0 [grid@tqdb21: ~]$ l. . .. .bash_history .bash_logout .bash_profile .bashrc .cache .config .kshrc .mozilla .viminfo [grid@tqdb21: ~]$ mkdir ~/.ssh [grid@tqdb21: ~]$ chmod 700 ~/.ssh/ [grid@tqdb21: ~]$ ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/home/grid/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/grid/.ssh/id_rsa. Your public key has been saved in /home/grid/.ssh/id_rsa.pub. The key fingerprint is: SHA256:+eIXXZgW7lXkgp8YS/AWr4NEqsk5a7brYSc4q8aODWw grid@tqdb21 The key's randomart image is: +---[RSA 2048]----+ | o . ..| | o o.+ ..| | . ..*+o..| | . + o +=*oo | | * S .+=oo | |. . o .. o. | |.E o B o .. | |.+o * * .. | |.o+...+... | +----[SHA256]-----+ [grid@tqdb21: ~]$ ssh-keygen -t dsa Generating public/private dsa key pair. Enter file in which to save the key (/home/grid/.ssh/id_dsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/grid/.ssh/id_dsa. Your public key has been saved in /home/grid/.ssh/id_dsa.pub. The key fingerprint is: SHA256:HIEiASc3W7qUMbqeHzLY22EyTtIRRg8ktp0zJ0ISax4 grid@tqdb21 The key's randomart image is: +---[DSA 1024]----+ |**@.. .. | |+O+@o . . | |oEBB.o . | |o+oo= . . | |..o S | |oo.. | |o=*.o | | ++*.. | | o.. | +----[SHA256]-----+ [grid@tqdb21: ~]$ ll .ssh/ total 16 -rw-------. 1 grid oinstall 668 Feb 12 00:37 id_dsa -rw-r--r--. 1 grid oinstall 601 Feb 12 00:37 id_dsa.pub -rw-------. 1 grid oinstall 1679 Feb 12 00:36 id_rsa -rw-r--r--. 1 grid oinstall 393 Feb 12 00:36 id_rsa.pub [grid@tqdb21: ~]$ ``` -- 2. 在 tqdb22 节点2 执行: ``` [root@tqdb22: ~]# su - grid Last login: Wed Feb 12 00:38:22 CST 2020 on pts/0 [grid@tqdb22: ~]$ l. . .. .bash_history .bash_logout .bash_profile .bashrc .cache .config .kshrc .mozilla .viminfo [grid@tqdb22: ~]$ mkdir ~/.ssh [grid@tqdb22: ~]$ chmod 700 ~/.ssh/ [grid@tqdb22: ~]$ ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/home/grid/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/grid/.ssh/id_rsa. Your public key has been saved in /home/grid/.ssh/id_rsa.pub. The key fingerprint is: SHA256:cUEInunmtLCI/lu1MEHLYMXHw3Jr02INyvrgKocA2NU grid@tqdb22 The key's randomart image is: +---[RSA 2048]----+ | oo++. oo | | . =+EX. . | |.. ..+O B . | |o . +.* = | |. oo*.S | |.. + *+.. | |o.o +.o. | |+ .... | |.+oo. | +----[SHA256]-----+ [grid@tqdb22: ~]$ ssh-keygen -t dsa Generating public/private dsa key pair. Enter file in which to save the key (/home/grid/.ssh/id_dsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/grid/.ssh/id_dsa. Your public key has been saved in /home/grid/.ssh/id_dsa.pub. The key fingerprint is: SHA256:Pss+L5zH1Gbyr+c8u3i79/R3UK0SJDzb6jzO6aPPplw grid@tqdb22 The key's randomart image is: +---[DSA 1024]----+ | . | | + . | | * .| | . o o| | S o . o | | . + = o | | .o*E= . ..| | o=*O..o+.=| | .B%Ooo*OB*| +----[SHA256]-----+ [grid@tqdb22: ~]$ ll .ssh/ total 16 -rw-------. 1 grid oinstall 668 Feb 12 00:39 id_dsa -rw-r--r--. 1 grid oinstall 601 Feb 12 00:39 id_dsa.pub -rw-------. 1 grid oinstall 1675 Feb 12 00:39 id_rsa -rw-r--r--. 1 grid oinstall 393 Feb 12 00:39 id_rsa.pub [grid@tqdb22: ~]$ ``` -- 3. 在 tqdb21 节点1 执行: ``` [grid@tqdb21: ~]$ cd ~/.ssh [grid@tqdb21: ~/.ssh]$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys [grid@tqdb21: ~/.ssh]$ cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys [grid@tqdb21: ~/.ssh]$ ssh grid@tqdb22 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys The authenticity of host 'tqdb22 (192.168.6.22)' can't be established. ECDSA key fingerprint is SHA256:QT8z0WN0dmX3S0jnMcLe/MeraabCFvwlYKTmX/kKJ+o. ECDSA key fingerprint is MD5:de:f8:90:99:5d:f1:05:5c:65:4b:fb:8b:0f:bc:63:7d. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'tqdb22,192.168.6.22' (ECDSA) to the list of known hosts. grid@tqdb22's password: [grid@tqdb21: ~/.ssh]$ ssh grid@tqdb22 cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys grid@tqdb22's password: [grid@tqdb21: ~/.ssh]$ cat authorized_keys ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQCyn//M6EKUXPcLk9lcennl3mN4CxFHupvFa6vR2eoGn40SNA1Yqtzhm+EI5I7O5JLT7zCUHu3aV8kIoXZdnivrw93VB8INeUIi9ZN98KDakq+nrORC3c9fZLnwCsNti+4Qy6vl2gy+fH0yR/vyhv8AVmIUe86jl8ql6TX5Xo2aS0YidD7okLumnKbCCK8HF58sPvr5j5fyMrq/w7xNhuq8jdrjOurVjcutu7u/xfSXCHvYnqbQDJbRj03bBOlhk63HYIgZYsB04/aMvngaq0lZ2XrXjBqfOq8OYBKLATgPyhoFDoD4IDymvrAm3Jsc3ZEy2wV3oOhiV895Kkvp4DtH grid@tqdb21 ssh-dss AAAAB3NzaC1kc3MAAACBAMmx0zcjtMOo8cIDWQamFqTKYN/ac0dHmRzpd2XeKTOUe7l2TeRiGilMIeyH+5i9CQ2bZzCPszd+KyJpf2BpKotsRKKlM+P09sDtDyteoTvCMyIGIvT24yQfriSFP3R5yKo0XJv0NEI6VX00wnsG5wzyaEad0FaSYdi/HlYar3MBAAAAFQCfQMu2F1kOm86SraJ2dL2m8ZZ8lwAAAIB9Tu2oCjL8wr2mDhBfaM7vg0bl6XEyYVm0b71uEzkklLKingyP38Jr3BIQ4+DSUv3+anfpJFGz1FouKI6Sow8oJCstJAx5CYM1AiHHVn5wTwBNW475kqWHTYaqwldYGjj2GwnB81QhVz+i4k4RLoMDi3BTOwoHC+hIwX6CrwumEgAAAIAWWDUYYg0b1ppVijCqU7VdXeS9FIdnlaA8puhVccSF5mzHcg4x0cQ1mWEnQjpFv1+/NsGdUoPidHWV2YQY6CRKP0xDN4nae/Cw1tKHOhnICGvrMVG8nZmDTnBUGDEGn22v4Mn5YGMAo+AclxcfYbpRnITCi4lqIHqgfm/YYWL1rA== grid@tqdb21 ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQCyTO5PjRN3LAYgvXN6vVgTuRT6/ZMIi1ZwKiKYAzfDECwfVMZpgO27/67JhsS4QYkf0qpAHrRrNsTLiMJJ2n0xAQ4y1PcGeKLttsUH3w5+bkwJtIyLN2uVdne6Knc/WOwB4RjNO/iNSxIQhwSiuxdp2vZqfHI0R7hAu4x7gSwMUhGTyu5kOlzpb5G0Y8bdr+U41Cc4hgSFeRkTs2fBe4aMZTz3tmX0Qie7xEZm8RtAoq3D0lEjNGq+YziBlrDLhwTldaiR3itsQdqhXVweEq0b6jotou4eFPYwya+DayQikFGXVczwaWEFNRljNzMZ3MWQSbghLFJ3ZGwwrgSBk6yx grid@tqdb22 ssh-dss AAAAB3NzaC1kc3MAAACBALXYa30uXp5U1GHO64E/5hXPBxnfs6yq3Snzz4omSSPbrTkXodWhXsUx5ywCEDC9j35KdjP6yFpzQgL5f+5/8PLsxuBoC/m/r3sMrjsTYXA/302WpalZuAXFUU8EkRdSYlpMhLB3PfWgwn9XYfAJJ3G1bDXmmAFECmlMhruGGI2DAAAAFQC4BY4Fjoh8CHdzZHEZwbC+iYYpgQAAAIBF5XDGw0oBoSAOpNysk+rI4AZSQmTwVhuNVnJIFkARdbW1rHLLALFak+BdOSgwg0JkPqPAA3l/cthT8TdzxDKE5H4WhQVpM8noYYo8V5MuE78vtHObRVwZ8APOr9NAbQ8QvdgG5huhnMx1M6esWFJ8GORtZ1r/pcyfHf1oDubOrwAAAIAjU9QOuuwNyKQaJZM2v+8l6T1Qv8psAtve1nHGOk0repiBvG5B6ucmB7e3Ae6EMj5Gw/M8jhocs+uspB1FcKNhHyT/SW7lMoAfFKtT+PzZmaWTKsNZSGQ/HVCWwUr8o3uIgcnW0SpEDrthfsApEM+d5Mpr7Hxuz2vyccBU9g0WJA== grid@tqdb22 [grid@tqdb21: ~/.ssh]$ scp /home/grid/.ssh/authorized_keys grid@tqdb22:~/.ssh/authorized_keys grid@tqdb22's password: authorized_keys 100% 1988 2.3MB/s 00:00 [grid@tqdb21: ~/.ssh]$ ``` -- 4. 在 tqdb22 节点2 执行: ( 查看从 tqdb21 复制(scp) 过来的 `authorized_keys` 文件) ``` [grid@tqdb22: ~]$ cd ~/.ssh/ [grid@tqdb22: ~/.ssh]$ ll total 20 -rw-r--r--. 1 grid oinstall 1988 Feb 12 00:43 authorized_keys -rw-------. 1 grid oinstall 668 Feb 12 00:39 id_dsa -rw-r--r--. 1 grid oinstall 601 Feb 12 00:39 id_dsa.pub -rw-------. 1 grid oinstall 1675 Feb 12 00:39 id_rsa -rw-r--r--. 1 grid oinstall 393 Feb 12 00:39 id_rsa.pub [grid@tqdb22: ~/.ssh]$ cat authorized_keys ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQCyn//M6EKUXPcLk9lcennl3mN4CxFHupvFa6vR2eoGn40SNA1Yqtzhm+EI5I7O5JLT7zCUHu3aV8kIoXZdnivrw93VB8INeUIi9ZN98KDakq+nrORC3c9fZLnwCsNti+4Qy6vl2gy+fH0yR/vyhv8AVmIUe86jl8ql6TX5Xo2aS0YidD7okLumnKbCCK8HF58sPvr5j5fyMrq/w7xNhuq8jdrjOurVjcutu7u/xfSXCHvYnqbQDJbRj03bBOlhk63HYIgZYsB04/aMvngaq0lZ2XrXjBqfOq8OYBKLATgPyhoFDoD4IDymvrAm3Jsc3ZEy2wV3oOhiV895Kkvp4DtH grid@tqdb21 ssh-dss AAAAB3NzaC1kc3MAAACBAMmx0zcjtMOo8cIDWQamFqTKYN/ac0dHmRzpd2XeKTOUe7l2TeRiGilMIeyH+5i9CQ2bZzCPszd+KyJpf2BpKotsRKKlM+P09sDtDyteoTvCMyIGIvT24yQfriSFP3R5yKo0XJv0NEI6VX00wnsG5wzyaEad0FaSYdi/HlYar3MBAAAAFQCfQMu2F1kOm86SraJ2dL2m8ZZ8lwAAAIB9Tu2oCjL8wr2mDhBfaM7vg0bl6XEyYVm0b71uEzkklLKingyP38Jr3BIQ4+DSUv3+anfpJFGz1FouKI6Sow8oJCstJAx5CYM1AiHHVn5wTwBNW475kqWHTYaqwldYGjj2GwnB81QhVz+i4k4RLoMDi3BTOwoHC+hIwX6CrwumEgAAAIAWWDUYYg0b1ppVijCqU7VdXeS9FIdnlaA8puhVccSF5mzHcg4x0cQ1mWEnQjpFv1+/NsGdUoPidHWV2YQY6CRKP0xDN4nae/Cw1tKHOhnICGvrMVG8nZmDTnBUGDEGn22v4Mn5YGMAo+AclxcfYbpRnITCi4lqIHqgfm/YYWL1rA== grid@tqdb21 ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQCyTO5PjRN3LAYgvXN6vVgTuRT6/ZMIi1ZwKiKYAzfDECwfVMZpgO27/67JhsS4QYkf0qpAHrRrNsTLiMJJ2n0xAQ4y1PcGeKLttsUH3w5+bkwJtIyLN2uVdne6Knc/WOwB4RjNO/iNSxIQhwSiuxdp2vZqfHI0R7hAu4x7gSwMUhGTyu5kOlzpb5G0Y8bdr+U41Cc4hgSFeRkTs2fBe4aMZTz3tmX0Qie7xEZm8RtAoq3D0lEjNGq+YziBlrDLhwTldaiR3itsQdqhXVweEq0b6jotou4eFPYwya+DayQikFGXVczwaWEFNRljNzMZ3MWQSbghLFJ3ZGwwrgSBk6yx grid@tqdb22 ssh-dss AAAAB3NzaC1kc3MAAACBALXYa30uXp5U1GHO64E/5hXPBxnfs6yq3Snzz4omSSPbrTkXodWhXsUx5ywCEDC9j35KdjP6yFpzQgL5f+5/8PLsxuBoC/m/r3sMrjsTYXA/302WpalZuAXFUU8EkRdSYlpMhLB3PfWgwn9XYfAJJ3G1bDXmmAFECmlMhruGGI2DAAAAFQC4BY4Fjoh8CHdzZHEZwbC+iYYpgQAAAIBF5XDGw0oBoSAOpNysk+rI4AZSQmTwVhuNVnJIFkARdbW1rHLLALFak+BdOSgwg0JkPqPAA3l/cthT8TdzxDKE5H4WhQVpM8noYYo8V5MuE78vtHObRVwZ8APOr9NAbQ8QvdgG5huhnMx1M6esWFJ8GORtZ1r/pcyfHf1oDubOrwAAAIAjU9QOuuwNyKQaJZM2v+8l6T1Qv8psAtve1nHGOk0repiBvG5B6ucmB7e3Ae6EMj5Gw/M8jhocs+uspB1FcKNhHyT/SW7lMoAfFKtT+PzZmaWTKsNZSGQ/HVCWwUr8o3uIgcnW0SpEDrthfsApEM+d5Mpr7Hxuz2vyccBU9g0WJA== grid@tqdb22 [grid@tqdb22: ~/.ssh]$ ``` 在每个节点上测试连接。验证当您再次运行以下命令时,系统是否不再提示您输入口令。 -- 5. 在 tqdb21 节点1 执行: -- 第一次,需要输入 `yes` ``` [grid@tqdb21: ~]$ ssh tqdb21 date The authenticity of host 'tqdb21 (192.168.6.21)' can't be established. ECDSA key fingerprint is SHA256:P/+G/d30l5VTPHHL9N6D+RpzvZ63gAIm+g9F6PeX80A. ECDSA key fingerprint is MD5:20:22:52:f2:51:c2:bf:a3:80:29:0b:e3:3c:c7:07:49. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'tqdb21,192.168.6.21' (ECDSA) to the list of known hosts. Wed Feb 12 00:45:51 CST 2020 [grid@tqdb21: ~]$ ssh tqdb22 date Wed Feb 12 00:45:59 CST 2020 [grid@tqdb21: ~]$ ssh tqdb21-priv date The authenticity of host 'tqdb21-priv (172.16.8.21)' can't be established. ECDSA key fingerprint is SHA256:P/+G/d30l5VTPHHL9N6D+RpzvZ63gAIm+g9F6PeX80A. ECDSA key fingerprint is MD5:20:22:52:f2:51:c2:bf:a3:80:29:0b:e3:3c:c7:07:49. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'tqdb21-priv,172.16.8.21' (ECDSA) to the list of known hosts. Wed Feb 12 00:46:14 CST 2020 [grid@tqdb21: ~]$ ssh tqdb22-priv date The authenticity of host 'tqdb22-priv (172.16.8.22)' can't be established. ECDSA key fingerprint is SHA256:QT8z0WN0dmX3S0jnMcLe/MeraabCFvwlYKTmX/kKJ+o. ECDSA key fingerprint is MD5:de:f8:90:99:5d:f1:05:5c:65:4b:fb:8b:0f:bc:63:7d. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'tqdb22-priv,172.16.8.22' (ECDSA) to the list of known hosts. Wed Feb 12 00:46:25 CST 2020 [grid@tqdb21: ~]$ ``` -- 第二次,不再需要输入 `yes`, 可以直接返回结果。 说明 SSH 等效性已配置好了。 ``` [grid@tqdb21: ~]$ ssh tqdb21 date Wed Feb 12 00:47:08 CST 2020 [grid@tqdb21: ~]$ ssh tqdb22 date Wed Feb 12 00:47:13 CST 2020 [grid@tqdb21: ~]$ ssh tqdb21-priv date Wed Feb 12 00:47:18 CST 2020 [grid@tqdb21: ~]$ ssh tqdb22-priv date Wed Feb 12 00:47:24 CST 2020 [grid@tqdb21: ~]$ date; ssh tqdb22 date Wed Feb 12 00:47:36 CST 2020 Wed Feb 12 00:47:36 CST 2020 [grid@tqdb21: ~]$ date; ssh tqdb22-priv date Wed Feb 12 00:47:52 CST 2020 Wed Feb 12 00:47:52 CST 2020 [grid@tqdb21: ~]$ ``` -- 6. 在 tqdb22 节点2 执行: -- 第一次,需要输入 `yes` ``` [grid@tqdb22: ~]$ ssh tqdb21 date The authenticity of host 'tqdb21 (192.168.6.21)' can't be established. ECDSA key fingerprint is SHA256:P/+G/d30l5VTPHHL9N6D+RpzvZ63gAIm+g9F6PeX80A. ECDSA key fingerprint is MD5:20:22:52:f2:51:c2:bf:a3:80:29:0b:e3:3c:c7:07:49. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'tqdb21,192.168.6.21' (ECDSA) to the list of known hosts. Wed Feb 12 00:49:04 CST 2020 [grid@tqdb22: ~]$ ssh tqdb22 date The authenticity of host 'tqdb22 (192.168.6.22)' can't be established. ECDSA key fingerprint is SHA256:QT8z0WN0dmX3S0jnMcLe/MeraabCFvwlYKTmX/kKJ+o. ECDSA key fingerprint is MD5:de:f8:90:99:5d:f1:05:5c:65:4b:fb:8b:0f:bc:63:7d. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'tqdb22,192.168.6.22' (ECDSA) to the list of known hosts. Wed Feb 12 00:49:14 CST 2020 [grid@tqdb22: ~]$ ssh tqdb21-priv date The authenticity of host 'tqdb21-priv (172.16.8.21)' can't be established. ECDSA key fingerprint is SHA256:P/+G/d30l5VTPHHL9N6D+RpzvZ63gAIm+g9F6PeX80A. ECDSA key fingerprint is MD5:20:22:52:f2:51:c2:bf:a3:80:29:0b:e3:3c:c7:07:49. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'tqdb21-priv,172.16.8.21' (ECDSA) to the list of known hosts. Wed Feb 12 00:49:24 CST 2020 [grid@tqdb22: ~]$ ssh tqdb22-priv date The authenticity of host 'tqdb22-priv (172.16.8.22)' can't be established. ECDSA key fingerprint is SHA256:QT8z0WN0dmX3S0jnMcLe/MeraabCFvwlYKTmX/kKJ+o. ECDSA key fingerprint is MD5:de:f8:90:99:5d:f1:05:5c:65:4b:fb:8b:0f:bc:63:7d. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'tqdb22-priv,172.16.8.22' (ECDSA) to the list of known hosts. Wed Feb 12 00:49:34 CST 2020 [grid@tqdb22: ~]$ ``` -- 第二次,不再需要输入 `yes`, 可以直接返回结果。 说明 SSH 等效性已配置好了。 ``` [grid@tqdb22: ~]$ ssh tqdb21 date Wed Feb 12 00:49:58 CST 2020 [grid@tqdb22: ~]$ ssh tqdb22 date Wed Feb 12 00:50:02 CST 2020 [grid@tqdb22: ~]$ ssh tqdb21-priv date Wed Feb 12 00:50:08 CST 2020 [grid@tqdb22: ~]$ ssh tqdb22-priv date Wed Feb 12 00:50:13 CST 2020 [grid@tqdb22: ~]$ date; ssh tqdb22 date Wed Feb 12 00:50:27 CST 2020 Wed Feb 12 00:50:27 CST 2020 [grid@tqdb22: ~]$ date; ssh tqdb22-priv date Wed Feb 12 00:50:39 CST 2020 Wed Feb 12 00:50:39 CST 2020 [grid@tqdb22: ~]$ ```

2.22 配置 udev

说明:

/dev/sda为本地系统盘;/dev/sdb/dev/sdc/dev/sdd/dev/sde/dev/sdf为共享磁盘.

其中:

/dev/sdb/dev/sdc/dev/sdd大小为 2G, 用于: OCR & voting disk

/dev/sde/dev/sdf大小为 50G, 用于: DATA DiskGroup

2.22.1 配置 multipath 多路径

# 获取本地硬盘在系统中的`scsi id` [root@tqdb21: /dev]# /lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/sda 1ATA_VBOX_HARDDISK_VB83906d1c-ce109a80 [root@tqdb21: /dev]# # 修改配置文件,将上面 `/dev/sda` 本地系统盘的 `scsi id` 加入黑名单 # 将 sdb ~ sdf 配置多路径别名 root# vim /etc/multipath.conf ``` ## virtualbox 虚拟环境中,这里必须添加getuid_callout字段中的"--replace-whitespace",因为上面的scsi_id拥有"_" # 注释掉 getuid_callout 参数 # vbox中,wwid 为 `multipath -v3` 命令中 `paths list` 的 `uuid` (如:`VBOX_HARDDISK_VB043f2aa4-f6c46e2f`) # 而使用 `/lib/udev/scsi_id` 命令输出的结果为 `1ATA_VBOX_HARDDISK_VB043f2aa4-f6c46e2f` (多了 `1ATA_`),所以如下报错。 # ``` #[root@tq1: /etc/multipath]# multipath -ll #Jan 15 17:20:22 | /etc/multipath.conf line 3, invalid keyword: getuid_callout # ``` # # defaults { #getuid_callout "/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/%n" #getuid_callout "/lib/udev/scsi_id -g -u -d /dev/%n" user_friendly_names no } # 禁掉本地磁盘 (/dev/sda) blacklist { wwid VBOX_HARDDISK_VB83906d1c-ce109a80 devnode "^(ram|raw|loop|fd|md|dm-|sr|scd|st)[0-9]*" devnode "^hd[a-z]" devnode "^cciss.*" } # 多路径别名 multipaths { multipath { wwid VBOX_HARDDISK_VB0ca65770-cab71c4b alias ocr1 } multipath { wwid VBOX_HARDDISK_VB724aeb83-ea9f4e0d alias ocr2 } multipath { wwid VBOX_HARDDISK_VBe8c6318c-edea7981 alias ocr3 } multipath { wwid VBOX_HARDDISK_VBa1560564-d49dac72 alias data01 } multipath { wwid VBOX_HARDDISK_VB27f01f95-b61e143b alias data02 } } ``` -- 开机启动 multipathd.service # systemctl enable multipathd.service # systemctl restart multipathd.service # systemctl status multipathd.service

2.22.2 配置 udev (99-oracle-asmdevices.rules) 规则

``` -- 两个节点的 `99-oracle-asmdevices.rules` 规则内容相同. [root@tqdb21: /etc/udev/rules.d]# vim 99-oracle-asmdevices.rules ``` # /dev/sdb multipath ==> `/dev/mapper/ocr1 -> ../dm-0` KERNEL=="dm*",SUBSYSTEM=="block", PROGRAM=="/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="1ATA_VBOX_HARDDISK_VB0ca65770-cab71c4b", SYMLINK+="asm-ocr1", OWNER="grid",GROUP="asmadmin", MODE="0660" # /dev/sdc multipath ==> `/dev/mapper/ocr2 -> ../dm-1` KERNEL=="dm*",SUBSYSTEM=="block", PROGRAM=="/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="1ATA_VBOX_HARDDISK_VB724aeb83-ea9f4e0d", SYMLINK+="asm-ocr2", OWNER="grid",GROUP="asmadmin", MODE="0660" # /dev/sdd multipath ==> `/dev/mapper/ocr3 -> ../dm-2` KERNEL=="dm*",SUBSYSTEM=="block", PROGRAM=="/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="1ATA_VBOX_HARDDISK_VBe8c6318c-edea7981", SYMLINK+="asm-ocr3", OWNER="grid",GROUP="asmadmin", MODE="0660" # /dev/sde multipath ==> `/dev/mapper/data01 -> ../dm-3` KERNEL=="dm*",SUBSYSTEM=="block", PROGRAM=="/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="1ATA_VBOX_HARDDISK_VBa1560564-d49dac72", SYMLINK+="asm-data01", OWNER="grid",GROUP="asmadmin", MODE="0660" # /dev/sdf multipath ==> `/dev/mapper/data02 -> ../dm-4` KERNEL=="dm*",SUBSYSTEM=="block", PROGRAM=="/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="1ATA_VBOX_HARDDISK_VB27f01f95-b61e143b", SYMLINK+="asm-data02", OWNER="grid",GROUP="asmadmin", MODE="0660" ``` [root@tqdb21: /etc/udev/rules.d]# ``` -- 加载规则并重启 udev 规则 # udevadm control --reload-rules # udevadm trigger # systemctl status systemd-udevd.service # systemctl enable systemd-udevd.service -- 查看 `99-oracle-asmdevices.rules` 规则是否生效 (包括: 规则名 和 权限) # ll /dev/asm-* # ll /dev/dm-* -- 查看块设备相关存储信息 (echo -e "\n输出结果: \n1. 查看'/dev'和'/dev/mapper'目录: (注意查看权限)" && ls -l /dev/asm* /dev/mapper/data* /dev/mapper/ocr* /dev/dm* /dev/sd[b-f]) && (echo -e "\n2. 查看块(block)设备: "&& lsblk -f) && (echo -e "\n3. 查看多路径配置" && cat /etc/multipath.conf | grep -A3 "multipath {") && (echo -e "\n4. '/dev/sdb'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_')" && /lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/sdb) && (echo -e "\n '/dev/sdc'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_')" && /lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/sdc) && (echo -e "\n '/dev/sdd'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_')" && /lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/sdd) && (echo -e "\n '/dev/sde'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_')" && /lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/sde) && (echo -e "\n '/dev/sdf'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_')" && /lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/sdf)tqdb21 操作记录:

[root@tqdb21: /etc/udev/rules.d]# cat 99-oracle-asmdevices.rules # /dev/sdb multipath ==> `/dev/mapper/ocr1 -> ../dm-0` KERNEL=="dm*",SUBSYSTEM=="block", PROGRAM=="/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="1ATA_VBOX_HARDDISK_VB0ca65770-cab71c4b", SYMLINK+="asm-ocr1", OWNER="grid",GROUP="asmadmin", MODE="0660" # /dev/sdc multipath ==> `/dev/mapper/ocr2 -> ../dm-1` KERNEL=="dm*",SUBSYSTEM=="block", PROGRAM=="/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="1ATA_VBOX_HARDDISK_VB724aeb83-ea9f4e0d", SYMLINK+="asm-ocr2", OWNER="grid",GROUP="asmadmin", MODE="0660" # /dev/sdd multipath ==> `/dev/mapper/ocr3 -> ../dm-2` KERNEL=="dm*",SUBSYSTEM=="block", PROGRAM=="/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="1ATA_VBOX_HARDDISK_VBe8c6318c-edea7981", SYMLINK+="asm-ocr3", OWNER="grid",GROUP="asmadmin", MODE="0660" # /dev/sde multipath ==> `/dev/mapper/data01 -> ../dm-3` KERNEL=="dm*",SUBSYSTEM=="block", PROGRAM=="/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="1ATA_VBOX_HARDDISK_VBa1560564-d49dac72", SYMLINK+="asm-data01", OWNER="grid",GROUP="asmadmin", MODE="0660" # /dev/sdf multipath ==> `/dev/mapper/data02 -> ../dm-4` KERNEL=="dm*",SUBSYSTEM=="block", PROGRAM=="/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="1ATA_VBOX_HARDDISK_VB27f01f95-b61e143b", SYMLINK+="asm-data02", OWNER="grid",GROUP="asmadmin", MODE="0660" [root@tqdb21: /etc/udev/rules.d]# [root@tqdb21: /etc/udev/rules.d]# udevadm control --reload-rules [root@tqdb21: /etc/udev/rules.d]# udevadm trigger [root@tqdb21: /etc/udev/rules.d]# systemctl status systemd-udevd.service ● systemd-udevd.service - udev Kernel Device Manager Loaded: loaded (/usr/lib/systemd/system/systemd-udevd.service; static; vendor preset: disabled) Active: active (running) since Tue 2020-02-11 22:06:25 CST; 4h 34min ago Docs: man:systemd-udevd.service(8) man:udev(7) Main PID: 484 (systemd-udevd) Status: "Processing with 10 children at max" Tasks: 1 CGroup: /system.slice/systemd-udevd.service └─484 /usr/lib/systemd/systemd-udevd Feb 11 22:06:25 tqdb21 systemd[1]: Starting udev Kernel Device Manager... Feb 11 22:06:25 tqdb21 systemd-udevd[484]: starting version 219 Feb 11 22:06:25 tqdb21 systemd[1]: Started udev Kernel Device Manager. Feb 11 22:06:36 tqdb21 kvm[1374]: 1 guest now active Feb 11 22:06:36 tqdb21 kvm[1375]: 0 guests now active Feb 11 22:06:36 tqdb21 kvm[1377]: 1 guest now active Feb 11 22:06:36 tqdb21 kvm[1379]: 0 guests now active Feb 11 22:06:36 tqdb21 kvm[1381]: 1 guest now active Feb 11 22:06:36 tqdb21 kvm[1386]: 0 guests now active [root@tqdb21: /etc/udev/rules.d]# systemctl enable systemd-udevd.service [root@tqdb21: /etc/udev/rules.d]# [root@tqdb21: /etc/udev/rules.d]# ll /dev/asm-* lrwxrwxrwx. 1 root root 4 Feb 12 02:40 /dev/asm-data01 -> dm-3 lrwxrwxrwx. 1 root root 4 Feb 12 02:40 /dev/asm-data02 -> dm-4 lrwxrwxrwx. 1 root root 4 Feb 12 02:40 /dev/asm-ocr1 -> dm-0 lrwxrwxrwx. 1 root root 4 Feb 12 02:40 /dev/asm-ocr2 -> dm-1 lrwxrwxrwx. 1 root root 4 Feb 12 02:40 /dev/asm-ocr3 -> dm-2 [root@tqdb21: /etc/udev/rules.d]# [root@tqdb21: /etc/udev/rules.d]# ll /dev/dm-* brw-rw----. 1 grid asmadmin 253, 0 Feb 12 02:40 /dev/dm-0 brw-rw----. 1 grid asmadmin 253, 1 Feb 12 02:40 /dev/dm-1 brw-rw----. 1 grid asmadmin 253, 2 Feb 12 02:40 /dev/dm-2 brw-rw----. 1 grid asmadmin 253, 3 Feb 12 02:40 /dev/dm-3 brw-rw----. 1 grid asmadmin 253, 4 Feb 12 02:40 /dev/dm-4 [root@tqdb21: /etc/udev/rules.d]# [root@tqdb21: ~]# (echo -e "\n输出结果: \n1. 查看'/dev'和'/dev/mapper'目录: (注意查看权限)" && ls -l /dev/asm* /dev/mapper/data* /dev/mapper/ocr* /dev/dm* /dev/sd[b-f]) && > (echo -e "\n2. 查看块(block)设备: "&& lsblk -f) && > (echo -e "\n3. 查看多路径配置" && cat /etc/multipath.conf | grep -A3 "multipath {") && > (echo -e "\n4. '/dev/sdb'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_')" && /lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/sdb) && > (echo -e "\n '/dev/sdc'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_')" && /lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/sdc) && > (echo -e "\n '/dev/sdd'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_')" && /lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/sdd) && > (echo -e "\n '/dev/sde'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_')" && /lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/sde) && > (echo -e "\n '/dev/sdf'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_')" && /lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/sdf) 输出结果: 1. 查看'/dev'和'/dev/mapper'目录: (注意查看权限) lrwxrwxrwx. 1 root root 4 Feb 12 03:24 /dev/asm-data01 -> dm-3 lrwxrwxrwx. 1 root root 4 Feb 12 03:24 /dev/asm-data02 -> dm-4 lrwxrwxrwx. 1 root root 4 Feb 12 03:24 /dev/asm-ocr1 -> dm-0 lrwxrwxrwx. 1 root root 4 Feb 12 03:24 /dev/asm-ocr2 -> dm-1 lrwxrwxrwx. 1 root root 4 Feb 12 03:24 /dev/asm-ocr3 -> dm-2 brw-rw----. 1 grid asmadmin 253, 0 Feb 12 03:24 /dev/dm-0 brw-rw----. 1 grid asmadmin 253, 1 Feb 12 03:24 /dev/dm-1 brw-rw----. 1 grid asmadmin 253, 2 Feb 12 03:24 /dev/dm-2 brw-rw----. 1 grid asmadmin 253, 3 Feb 12 03:24 /dev/dm-3 brw-rw----. 1 grid asmadmin 253, 4 Feb 12 03:24 /dev/dm-4 lrwxrwxrwx. 1 root root 7 Feb 12 03:24 /dev/mapper/data01 -> ../dm-3 lrwxrwxrwx. 1 root root 7 Feb 12 03:24 /dev/mapper/data02 -> ../dm-4 lrwxrwxrwx. 1 root root 7 Feb 12 03:24 /dev/mapper/ocr1 -> ../dm-0 lrwxrwxrwx. 1 root root 7 Feb 12 03:24 /dev/mapper/ocr2 -> ../dm-1 lrwxrwxrwx. 1 root root 7 Feb 12 03:24 /dev/mapper/ocr3 -> ../dm-2 brw-rw----. 1 root disk 8, 16 Feb 12 02:40 /dev/sdb brw-rw----. 1 root disk 8, 32 Feb 12 02:40 /dev/sdc brw-rw----. 1 root disk 8, 48 Feb 12 02:40 /dev/sdd brw-rw----. 1 root disk 8, 64 Feb 12 02:40 /dev/sde brw-rw----. 1 root disk 8, 80 Feb 12 02:40 /dev/sdf 2. 查看块(block)设备: NAME FSTYPE LABEL UUID MOUNTPOINT sda ├─sda1 swap 323f3142-ccef-4b0d-a799-04007c4aa0a6 [SWAP] └─sda2 xfs 2579915f-aead-4a30-977c-8e39f5f4d491 / sdb mpath_member └─ocr1 sdc mpath_member └─ocr2 sdd mpath_member └─ocr3 sde mpath_member └─data01 sdf mpath_member └─data02 sr0 3. 查看多路径配置 multipath { wwid VBOX_HARDDISK_VB0ca65770-cab71c4b alias ocr1 } multipath { wwid VBOX_HARDDISK_VB724aeb83-ea9f4e0d alias ocr2 } multipath { wwid VBOX_HARDDISK_VBe8c6318c-edea7981 alias ocr3 } multipath { wwid VBOX_HARDDISK_VBa1560564-d49dac72 alias data01 } multipath { wwid VBOX_HARDDISK_VB27f01f95-b61e143b alias data02 } 4. '/dev/sdb'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_') 1ATA_VBOX_HARDDISK_VB0ca65770-cab71c4b '/dev/sdc'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_') 1ATA_VBOX_HARDDISK_VB724aeb83-ea9f4e0d '/dev/sdd'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_') 1ATA_VBOX_HARDDISK_VBe8c6318c-edea7981 '/dev/sde'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_') 1ATA_VBOX_HARDDISK_VBa1560564-d49dac72 '/dev/sdf'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_') 1ATA_VBOX_HARDDISK_VB27f01f95-b61e143b [root@tqdb21: ~]#tqdb22 操作记录:

[root@tqdb22: /etc/udev/rules.d]# cat 99-oracle-asmdevices.rules # /dev/sdb multipath ==> `/dev/mapper/ocr1 -> ../dm-0` KERNEL=="dm*",SUBSYSTEM=="block", PROGRAM=="/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="1ATA_VBOX_HARDDISK_VB0ca65770-cab71c4b", SYMLINK+="asm-ocr1", OWNER="grid",GROUP="asmadmin", MODE="0660" # /dev/sdc multipath ==> `/dev/mapper/ocr2 -> ../dm-1` KERNEL=="dm*",SUBSYSTEM=="block", PROGRAM=="/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="1ATA_VBOX_HARDDISK_VB724aeb83-ea9f4e0d", SYMLINK+="asm-ocr2", OWNER="grid",GROUP="asmadmin", MODE="0660" # /dev/sdd multipath ==> `/dev/mapper/ocr3 -> ../dm-2` KERNEL=="dm*",SUBSYSTEM=="block", PROGRAM=="/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="1ATA_VBOX_HARDDISK_VBe8c6318c-edea7981", SYMLINK+="asm-ocr3", OWNER="grid",GROUP="asmadmin", MODE="0660" # /dev/sde multipath ==> `/dev/mapper/data01 -> ../dm-3` KERNEL=="dm*",SUBSYSTEM=="block", PROGRAM=="/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="1ATA_VBOX_HARDDISK_VBa1560564-d49dac72", SYMLINK+="asm-data01", OWNER="grid",GROUP="asmadmin", MODE="0660" # /dev/sdf multipath ==> `/dev/mapper/data02 -> ../dm-4` KERNEL=="dm*",SUBSYSTEM=="block", PROGRAM=="/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="1ATA_VBOX_HARDDISK_VB27f01f95-b61e143b", SYMLINK+="asm-data02", OWNER="grid",GROUP="asmadmin", MODE="0660" [root@tqdb22: /etc/udev/rules.d]# [root@tqdb22: /etc/udev/rules.d]# udevadm control --reload-rules [root@tqdb22: /etc/udev/rules.d]# udevadm trigger [root@tqdb22: /etc/udev/rules.d]# systemctl status systemd-udevd.service ● systemd-udevd.service - udev Kernel Device Manager Loaded: loaded (/usr/lib/systemd/system/systemd-udevd.service; static; vendor preset: disabled) Active: active (running) since Tue 2020-02-11 22:06:28 CST; 4h 44min ago Docs: man:systemd-udevd.service(8) man:udev(7) Main PID: 486 (systemd-udevd) Status: "Processing with 10 children at max" Tasks: 1 CGroup: /system.slice/systemd-udevd.service └─486 /usr/lib/systemd/systemd-udevd Feb 11 22:06:28 tqdb22 systemd[1]: Starting udev Kernel Device Manager... Feb 11 22:06:28 tqdb22 systemd-udevd[486]: starting version 219 Feb 11 22:06:28 tqdb22 systemd[1]: Started udev Kernel Device Manager. Feb 11 22:06:39 tqdb22 kvm[1426]: 1 guest now active Feb 11 22:06:39 tqdb22 kvm[1432]: 0 guests now active Feb 11 22:06:39 tqdb22 kvm[1436]: 1 guest now active Feb 11 22:06:39 tqdb22 kvm[1441]: 0 guests now active Feb 11 22:06:39 tqdb22 kvm[1444]: 1 guest now active Feb 11 22:06:39 tqdb22 kvm[1448]: 0 guests now active [root@tqdb22: /etc/udev/rules.d]# systemctl enable systemd-udevd.service [root@tqdb22: /etc/udev/rules.d]# [root@tqdb22: /etc/udev/rules.d]# ll /dev/asm-* lrwxrwxrwx. 1 root root 4 Feb 12 02:50 /dev/asm-data01 -> dm-3 lrwxrwxrwx. 1 root root 4 Feb 12 02:50 /dev/asm-data02 -> dm-4 lrwxrwxrwx. 1 root root 4 Feb 12 02:50 /dev/asm-ocr1 -> dm-0 lrwxrwxrwx. 1 root root 4 Feb 12 02:50 /dev/asm-ocr2 -> dm-1 lrwxrwxrwx. 1 root root 4 Feb 12 02:50 /dev/asm-ocr3 -> dm-2 [root@tqdb22: /etc/udev/rules.d]# ll /dev/dm-* brw-rw----. 1 grid asmadmin 253, 0 Feb 12 02:50 /dev/dm-0 brw-rw----. 1 grid asmadmin 253, 1 Feb 12 02:50 /dev/dm-1 brw-rw----. 1 grid asmadmin 253, 2 Feb 12 02:50 /dev/dm-2 brw-rw----. 1 grid asmadmin 253, 3 Feb 12 02:50 /dev/dm-3 brw-rw----. 1 grid asmadmin 253, 4 Feb 12 02:50 /dev/dm-4 [root@tqdb22: /etc/udev/rules.d]# [root@tqdb22: ~]# (echo -e "\n输出结果: \n1. 查看'/dev'和'/dev/mapper'目录: (注意查看权限)" && ls -l /dev/asm* /dev/mapper/data* /dev/mapper/ocr* /dev/dm* /dev/sd[b-f]) && > (echo -e "\n2. 查看块(block)设备: "&& lsblk -f) && > (echo -e "\n3. 查看多路径配置" && cat /etc/multipath.conf | grep -A3 "multipath {") && > (echo -e "\n4. '/dev/sdb'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_')" && /lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/sdb) && > (echo -e "\n '/dev/sdc'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_')" && /lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/sdc) && > (echo -e "\n '/dev/sdd'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_')" && /lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/sdd) && > (echo -e "\n '/dev/sde'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_')" && /lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/sde) && > (echo -e "\n '/dev/sdf'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_')" && /lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/sdf) 输出结果: 1. 查看'/dev'和'/dev/mapper'目录: (注意查看权限) lrwxrwxrwx. 1 root root 4 Feb 12 03:30 /dev/asm-data01 -> dm-3 lrwxrwxrwx. 1 root root 4 Feb 12 03:30 /dev/asm-data02 -> dm-4 lrwxrwxrwx. 1 root root 4 Feb 12 03:30 /dev/asm-ocr1 -> dm-0 lrwxrwxrwx. 1 root root 4 Feb 12 03:30 /dev/asm-ocr2 -> dm-1 lrwxrwxrwx. 1 root root 4 Feb 12 03:30 /dev/asm-ocr3 -> dm-2 brw-rw----. 1 grid asmadmin 253, 0 Feb 12 03:30 /dev/dm-0 brw-rw----. 1 grid asmadmin 253, 1 Feb 12 03:30 /dev/dm-1 brw-rw----. 1 grid asmadmin 253, 2 Feb 12 03:30 /dev/dm-2 brw-rw----. 1 grid asmadmin 253, 3 Feb 12 03:30 /dev/dm-3 brw-rw----. 1 grid asmadmin 253, 4 Feb 12 03:30 /dev/dm-4 lrwxrwxrwx. 1 root root 7 Feb 12 03:30 /dev/mapper/data01 -> ../dm-3 lrwxrwxrwx. 1 root root 7 Feb 12 03:30 /dev/mapper/data02 -> ../dm-4 lrwxrwxrwx. 1 root root 7 Feb 12 03:30 /dev/mapper/ocr1 -> ../dm-0 lrwxrwxrwx. 1 root root 7 Feb 12 03:30 /dev/mapper/ocr2 -> ../dm-1 lrwxrwxrwx. 1 root root 7 Feb 12 03:30 /dev/mapper/ocr3 -> ../dm-2 brw-rw----. 1 root disk 8, 16 Feb 12 02:50 /dev/sdb brw-rw----. 1 root disk 8, 32 Feb 12 02:50 /dev/sdc brw-rw----. 1 root disk 8, 48 Feb 12 02:50 /dev/sdd brw-rw----. 1 root disk 8, 64 Feb 12 02:50 /dev/sde brw-rw----. 1 root disk 8, 80 Feb 12 02:50 /dev/sdf 2. 查看块(block)设备: NAME FSTYPE LABEL UUID MOUNTPOINT sda ├─sda1 swap 3114372b-8427-47ed-b2a6-092d33efcf5a [SWAP] └─sda2 xfs 4e2d3b8d-2afa-447c-ae56-1cc0e2d39fe2 / sdb mpath_member └─ocr1 sdc mpath_member └─ocr2 sdd mpath_member └─ocr3 sde mpath_member └─data01 sdf mpath_member └─data02 sr0 3. 查看多路径配置 multipath { wwid VBOX_HARDDISK_VB0ca65770-cab71c4b alias ocr1 } multipath { wwid VBOX_HARDDISK_VB724aeb83-ea9f4e0d alias ocr2 } multipath { wwid VBOX_HARDDISK_VBe8c6318c-edea7981 alias ocr3 } multipath { wwid VBOX_HARDDISK_VBa1560564-d49dac72 alias data01 } multipath { wwid VBOX_HARDDISK_VB27f01f95-b61e143b alias data02 } 4. '/dev/sdb'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_') 1ATA_VBOX_HARDDISK_VB0ca65770-cab71c4b '/dev/sdc'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_') 1ATA_VBOX_HARDDISK_VB724aeb83-ea9f4e0d '/dev/sdd'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_') 1ATA_VBOX_HARDDISK_VBe8c6318c-edea7981 '/dev/sde'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_') 1ATA_VBOX_HARDDISK_VBa1560564-d49dac72 '/dev/sdf'的设备ID: (注意:'设备ID' 比 'wwid' 多了前缀 '1ATA_') 1ATA_VBOX_HARDDISK_VB27f01f95-b61e143b [root@tqdb22: ~]#

2.23 重启 OS

当上述操作执行完毕, 重启操作系统。

3. 软件安装与配置

3.1 GRID 安装

将介质解压到

GRID的$ORACLE_HOME中:这里一定要将文件解压到$OACLE_HOME中不然将会将当前目录设置为$ORACLE_HOME[grid@tqdb21: /Software]$ ll total 5922412 drwxr-xr-x 2 root root 47 Feb 12 18:53 DB RU 19.6.0.0.200114 drwxr-xr-x 2 root root 47 Feb 12 18:53 GI RU 19.6.0.0.200114 -rwx------ 1 root root 3059705302 Feb 12 18:13 LINUX.X64_193000_db_home.zip -rwx------ 1 grid oinstall 2889184573 Feb 12 18:13 LINUX.X64_193000_grid_home.zip -rwx------ 1 root root 115653541 Feb 12 18:53 p6880880_190000_Linux-x86-64.zip [grid@tqdb21: /Software]$ echo $ORACLE_HOME /u01/app/19c/grid [grid@tqdb21: /Software]$ unzip LINUX.X64_193000_grid_home.zip -d $ORACLE_HOME [grid@tqdb21: /Software]$安装

cvuqdisk-1.0.10-1.rpm包,这个包linux的光盘内并不包含,需要到解压后的grid的安装文件中去找,在cv目录下面的rpm目录里面。(即:步骤「2.11 安装 cvuqdisk 包」)

[root@tqdb21: ~]# cd $ORACLE_HOME/cv/rpm [root@tqdb21: /u01/app/19c/grid/cv/rpm]# ll cvuqdisk-1.0.10-1.rpm -rw-r--r-- 1 grid oinstall 11412 Mar 13 2019 cvuqdisk-1.0.10-1.rpm [root@tqdb21: /u01/app/19c/grid/cv/rpm]# yum install cvuqdisk-1.0.10-1.rpm Loaded plugins: fastestmirror, langpacks Examining cvuqdisk-1.0.10-1.rpm: cvuqdisk-1.0.10-1.x86_64 Marking cvuqdisk-1.0.10-1.rpm to be installed Resolving Dependencies --> Running transaction check ---> Package cvuqdisk.x86_64 0:1.0.10-1 will be installed --> Finished Dependency Resolution Dependencies Resolved ======================================================================================================================================================================================================= Package Arch Version Repository Size ======================================================================================================================================================================================================= Installing: cvuqdisk x86_64 1.0.10-1 /cvuqdisk-1.0.10-1 22 k Transaction Summary ======================================================================================================================================================================================================= Install 1 Package Total size: 22 k Installed size: 22 k Is this ok [y/d/N]: y Downloading packages: Running transaction check Running transaction test Transaction test succeeded Running transaction Using default group oinstall to install package Installing : cvuqdisk-1.0.10-1.x86_64 1/1 Verifying : cvuqdisk-1.0.10-1.x86_64 1/1 Installed: cvuqdisk.x86_64 0:1.0.10-1 Complete! [root@tqdb21: /u01/app/19c/grid/cv/rpm]#

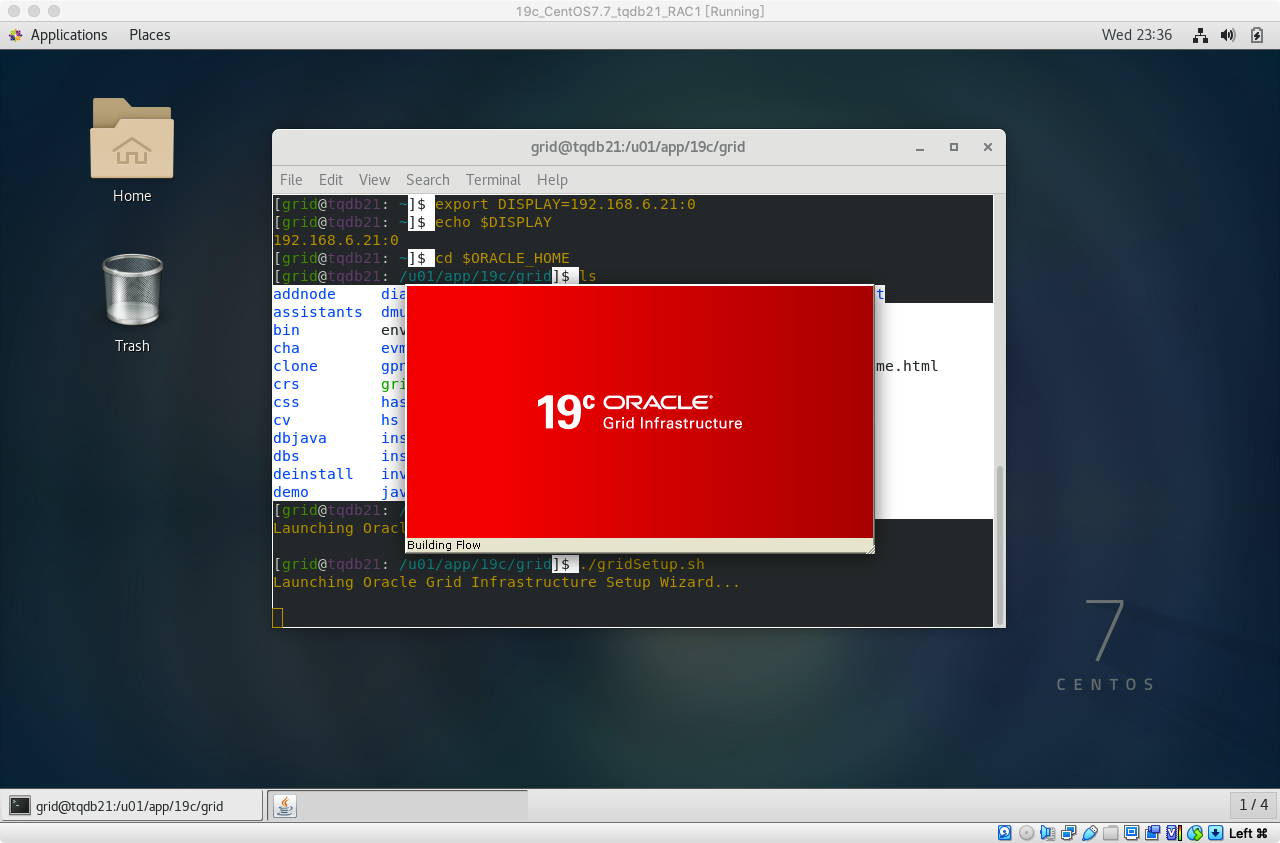

3.1.1 启动 sshd 的 X11 转发,启动图形界面

启动 sshd 的 X11 转发,启动图形界面

开启 ssh 的 X11 转发,用于开启图形界面 # vim /etc/ssh/sshd_config ``` X11Forwarding yes X11DisplayOffset 10 X11UseLocalhost no ``` 重启 sshd 服务 # systemctl restart sshd.service # systemctl status sshd.service操作记录:

[root@tqdb21: ~]# vim /etc/ssh/sshd_config ``` X11Forwarding yes X11DisplayOffset 10 X11UseLocalhost no ``` [root@tqdb21: ~]# systemctl restart sshd.service [root@tqdb21: ~]# systemctl status sshd.service ● sshd.service - OpenSSH server daemon Loaded: loaded (/usr/lib/systemd/system/sshd.service; enabled; vendor preset: enabled) Active: active (running) since Wed 2020-02-12 23:18:33 CST; 2s ago Docs: man:sshd(8) man:sshd_config(5) Main PID: 5748 (sshd) Tasks: 1 CGroup: /system.slice/sshd.service └─5748 /usr/sbin/sshd -D Feb 12 23:18:33 tqdb21 systemd[1]: Starting OpenSSH server daemon... Feb 12 23:18:33 tqdb21 sshd[5748]: Server listening on 0.0.0.0 port 22. Feb 12 23:18:33 tqdb21 systemd[1]: Started OpenSSH server daemon. [root@tqdb21: ~]#[root@tqdb21: ~]# xdpyinfo | head name of display: :0 version number: 11.0 vendor string: The X.Org Foundation vendor release number: 12004000 X.Org version: 1.20.4 maximum request size: 16777212 bytes motion buffer size: 256 bitmap unit, bit order, padding: 32, LSBFirst, 32 image byte order: LSBFirst number of supported pixmap formats: 7 [root@tqdb21: ~]# [root@tqdb21: ~]# xdpyinfo | head name of display: :0 version number: 11.0 vendor string: The X.Org Foundation vendor release number: 12004000 X.Org version: 1.20.4 maximum request size: 16777212 bytes motion buffer size: 256 bitmap unit, bit order, padding: 32, LSBFirst, 32 image byte order: LSBFirst number of supported pixmap formats: 7 [root@tqdb21: ~]# su - grid Last login: Wed Feb 12 23:24:52 CST 2020 on pts/1 [grid@tqdb21: ~]$ export DISPLAY=192.168.6.21:0 [grid@tqdb21: ~]$ echo $DISPLAY 192.168.6.21:0 [grid@tqdb21: ~]$ [grid@tqdb21: /u01/app/19c/grid]$ ./gridSetup.sh Launching Oracle Grid Infrastructure Setup Wizard...macOS 使用 XQuartz 启动图形说明

-------------------------------------------------------------------------------- -- 启动 sshd 的 X11 转发,启动图形界面 -- Begin ------------------------------------ -------------------------------------------------------------------------------- 开启 ssh 的 X11 转发,用于开启图形界面 vim /etc/ssh/sshd_config ``` X11Forwarding yes X11DisplayOffset 10 X11UseLocalhost no ``` 重启 sshd 服务 systemctl restart sshd.service systemctl status sshd.service ``` [root@tq1: ~]# systemctl status sshd.service ● sshd.service - OpenSSH server daemon Loaded: loaded (/usr/lib/systemd/system/sshd.service; enabled; vendor preset: enabled) Active: active (running) since Fri 2020-01-17 12:47:42 CST; 5s ago Docs: man:sshd(8) man:sshd_config(5) Main PID: 18971 (sshd) Tasks: 1 CGroup: /system.slice/sshd.service └─18971 /usr/sbin/sshd -D Jan 17 12:47:42 tq1 systemd[1]: Stopped OpenSSH server daemon. Jan 17 12:47:42 tq1 systemd[1]: Starting OpenSSH server daemon... Jan 17 12:47:42 tq1 sshd[18971]: Server listening on 0.0.0.0 port 22. Jan 17 12:47:42 tq1 systemd[1]: Started OpenSSH server daemon. [root@tq1: ~]# ``` ``` [root@tq1: ~]# xdpyinfo | head name of display: 192.168.6.10:10.0 version number: 11.0 vendor string: The X.Org Foundation vendor release number: 11804000 X.Org version: 1.18.4 maximum request size: 16777212 bytes motion buffer size: 256 bitmap unit, bit order, padding: 32, LSBFirst, 32 image byte order: LSBFirst number of supported pixmap formats: 7 [root@tq1: ~]# su - grid Last login: Fri Jan 17 11:44:18 CST 2020 on pts/4 [grid@tq1: ~]$ export DISPLAY=192.168.6.10:10.0 [grid@tq1: ~]$ echo $DISPLAY 192.168.6.10:10.0 [grid@tq1: ~]$ ``` 实际操作: 1. 使用 XQuartz 登陆服务器 root 用户,执行 `xhost +` â /Users/tq > ssh -X [email protected] ``` â /Users/tq > ssh -X [email protected] [email protected]'s password: Last login: Fri Jan 17 11:43:40 2020 from 192.168.6.6 [root@tq1: ~]# xhost + access control disabled, clients can connect from any host [root@tq1: ~]# ``` 2. 使用 XQuartz 登陆服务器 grid/oracle 用户,就可以直接启用图形了。 ``` â /Users/tq > ssh -X [email protected] [email protected]'s password: Last login: Fri Jan 17 12:41:26 2020 from 192.168.6.6 [grid@tq1: ~]$ echo $DISPLAY 192.168.6.10:10.0 [grid@tq1: ~]$ xauth list tq1:10 MIT-MAGIC-COOKIE-1 62299f9d0c67b1804c36fe7ea6783fda [grid@tq1: ~]$ [grid@tq1: ~]$ xclock [grid@tq1: ~]$ xeyes [grid@tq1: ~]$ ``` -------------------------------------------------------------------------------- -- 启动 sshd 的 X11 转发,启动图形界面 -- End -------------------------------------- --------------------------------------------------------------------------------

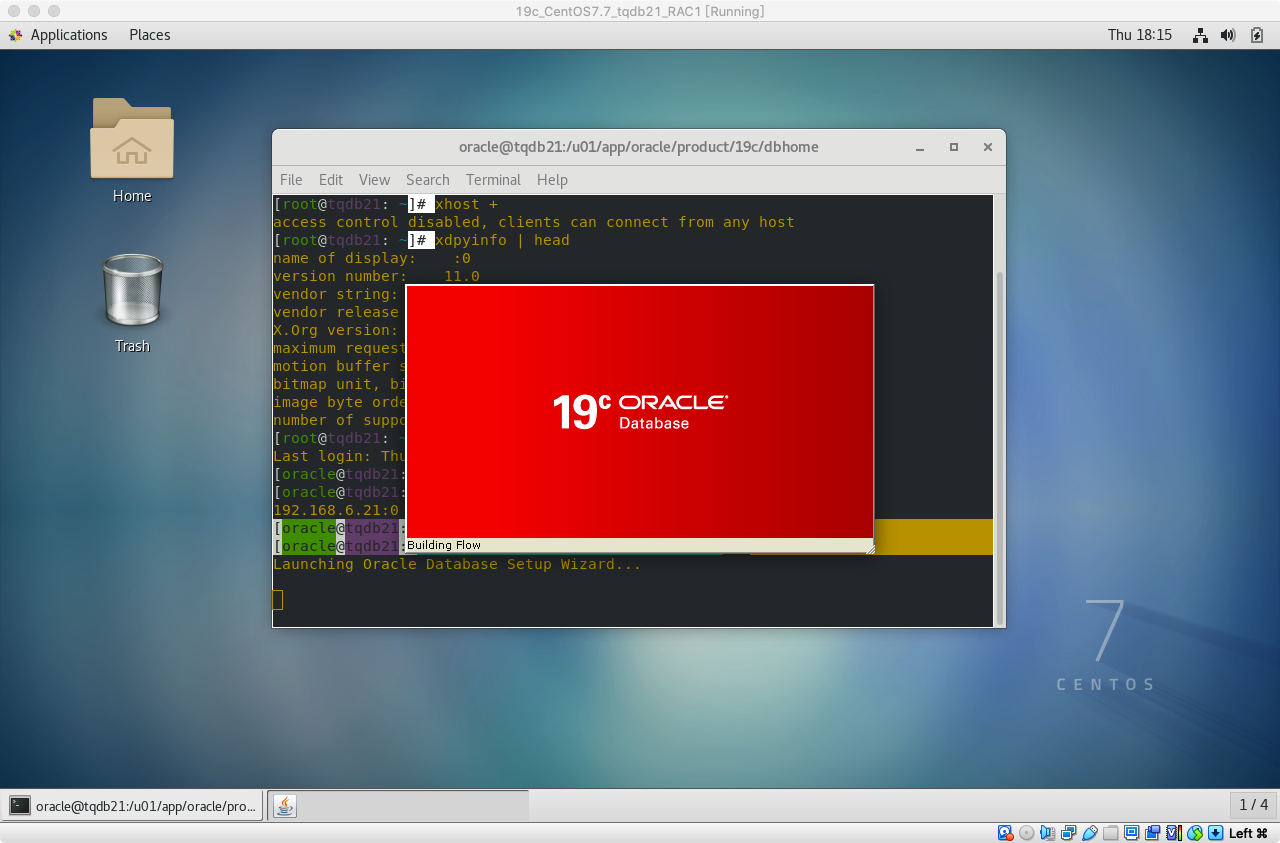

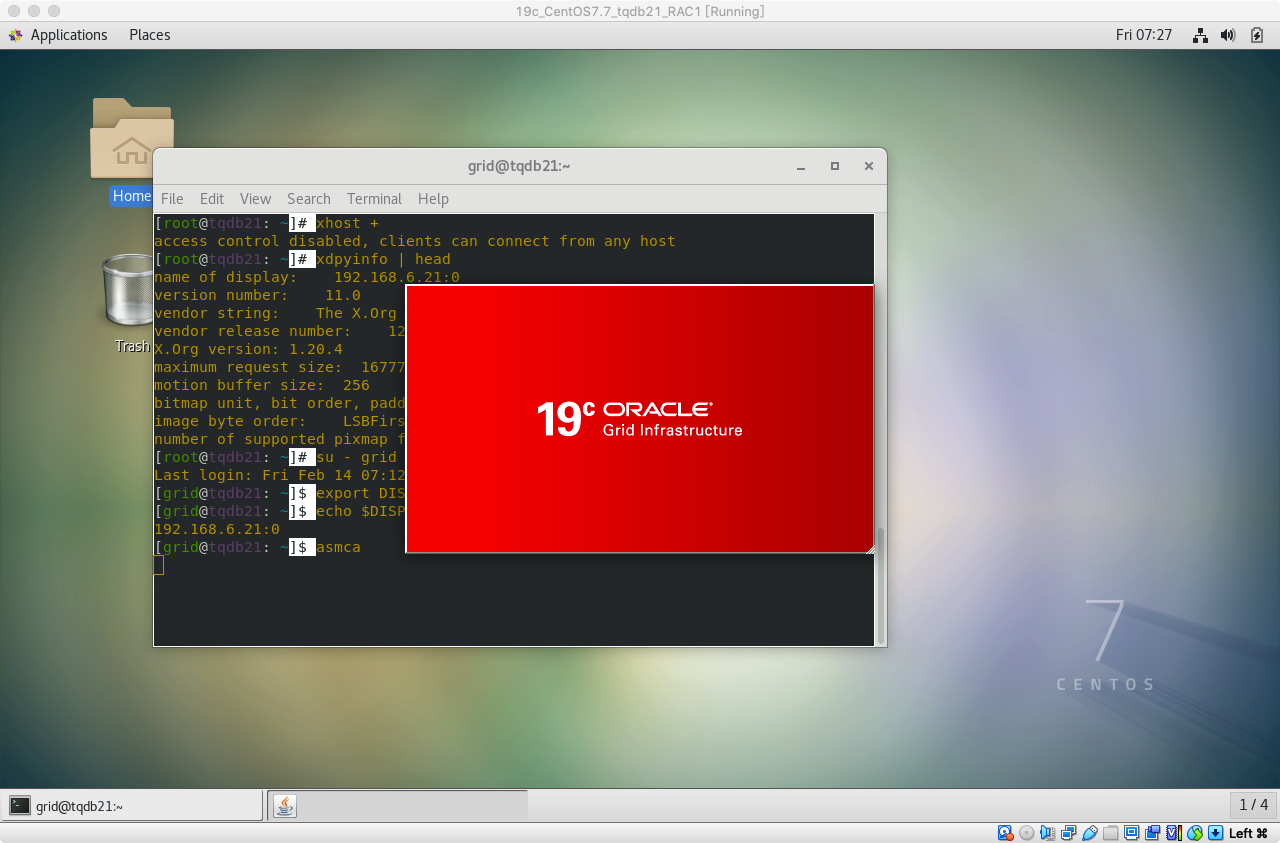

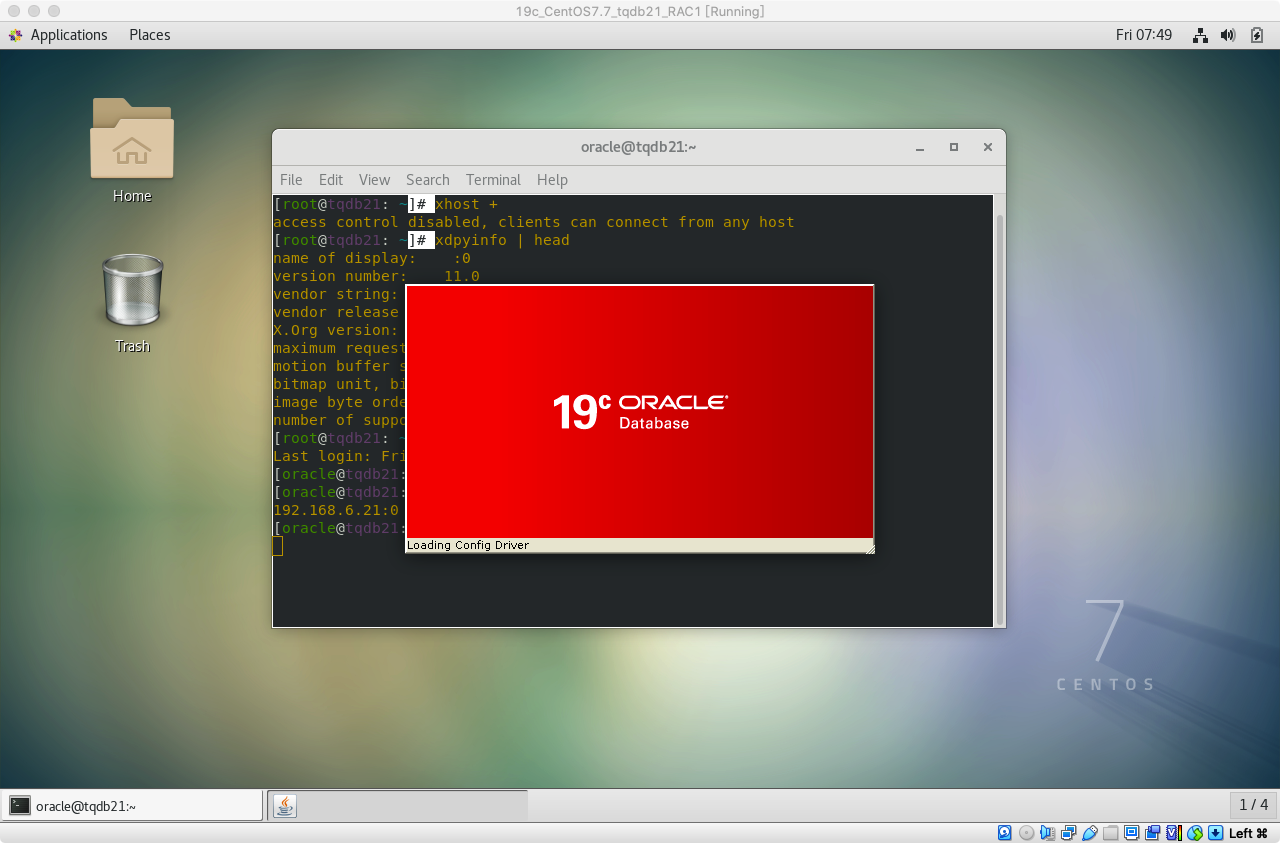

3.1.2 使用grid用户登入图形安装:

[root@tqdb21: ~]# xhost +

access control disabled, clients can connect from any host

[root@tqdb21: ~]#

[root@tqdb21: ~]# xdpyinfo | head

name of display: :0

version number: 11.0

vendor string: The X.Org Foundation

vendor release number: 12004000

X.Org version: 1.20.4

maximum request size: 16777212 bytes

motion buffer size: 256

bitmap unit, bit order, padding: 32, LSBFirst, 32

image byte order: LSBFirst

number of supported pixmap formats: 7

[root@tqdb21: ~]# su - grid

Last login: Wed Feb 12 23:24:52 CST 2020 on pts/1

[grid@tqdb21: ~]$ export DISPLAY=192.168.6.21:0

[grid@tqdb21: ~]$ echo $DISPLAY

192.168.6.21:0

[grid@tqdb21: ~]$ cd $ORACLE_HOME

[grid@tqdb21: /u01/app/19c/grid]$ ./gridSetup.sh

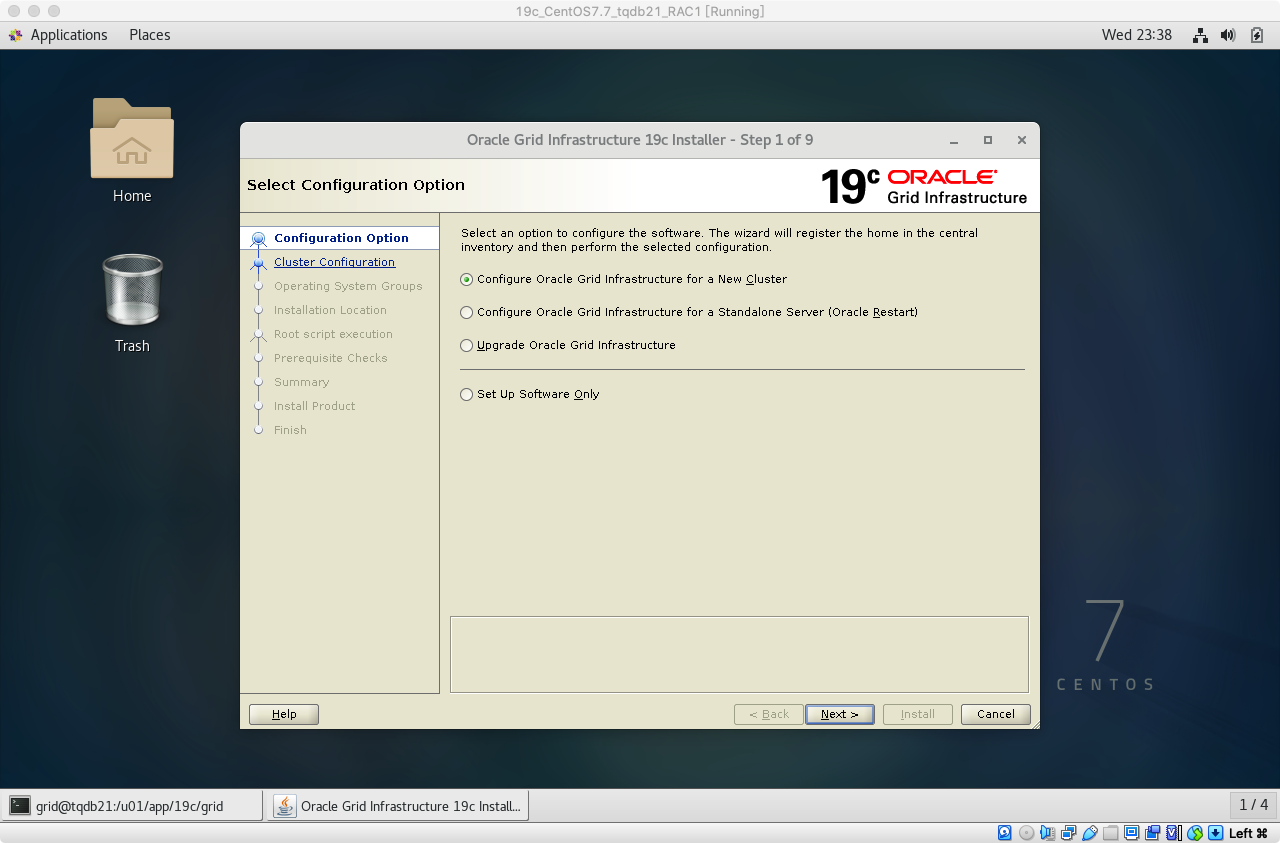

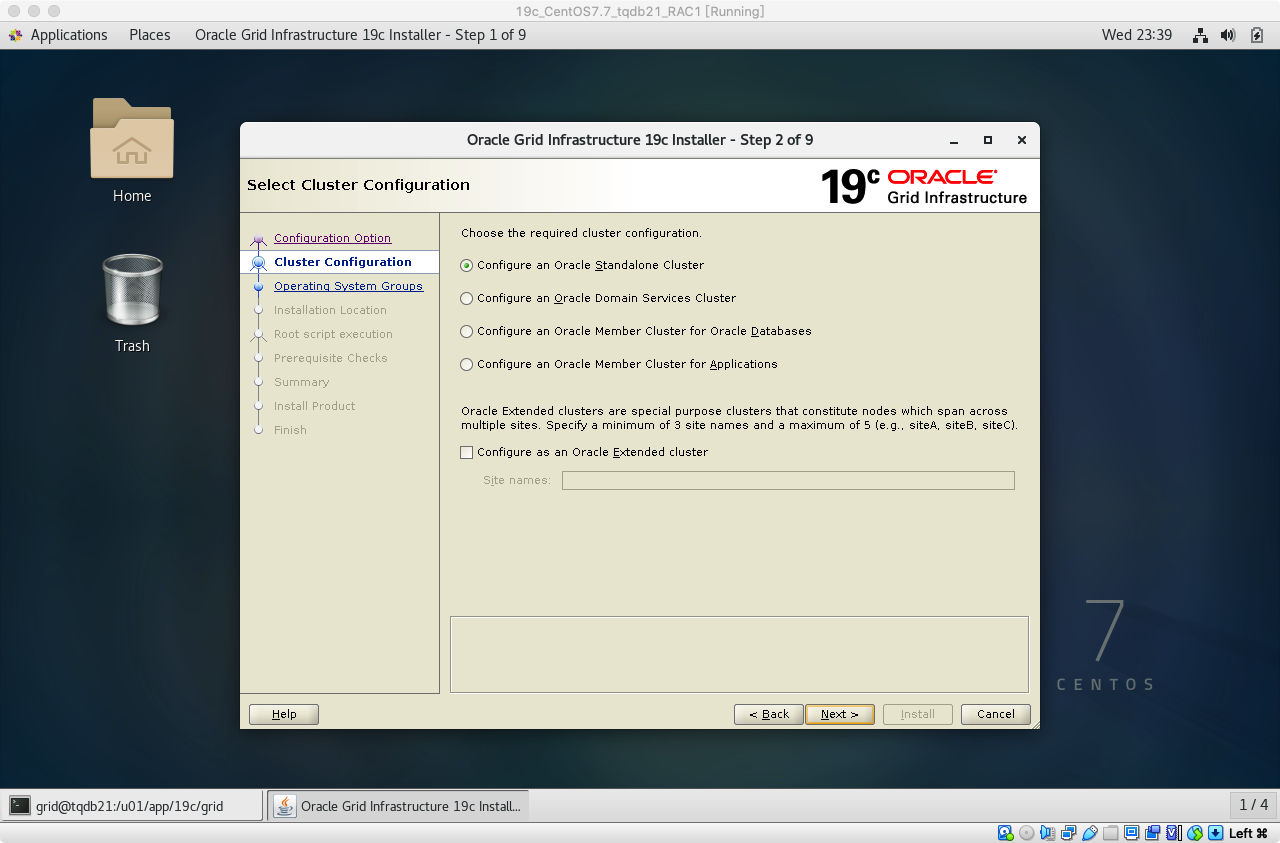

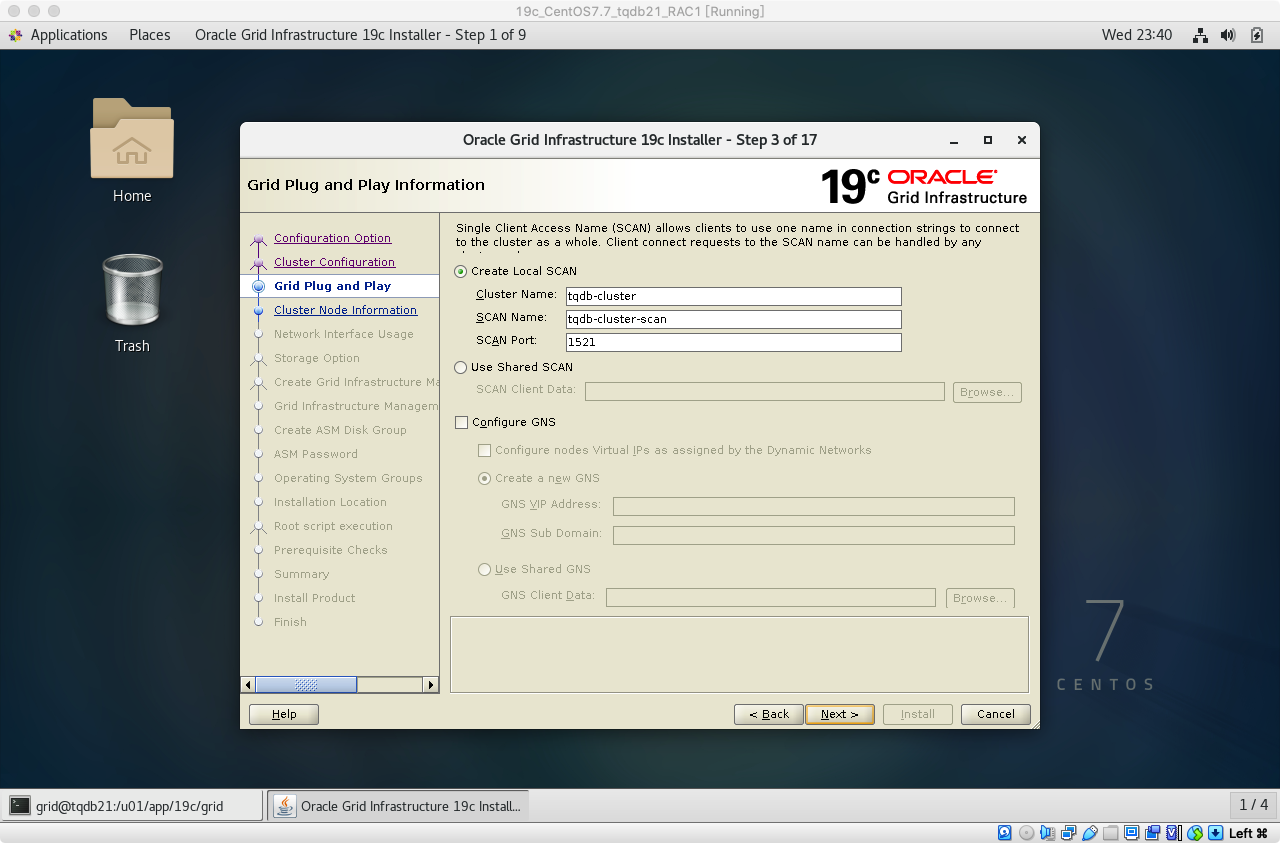

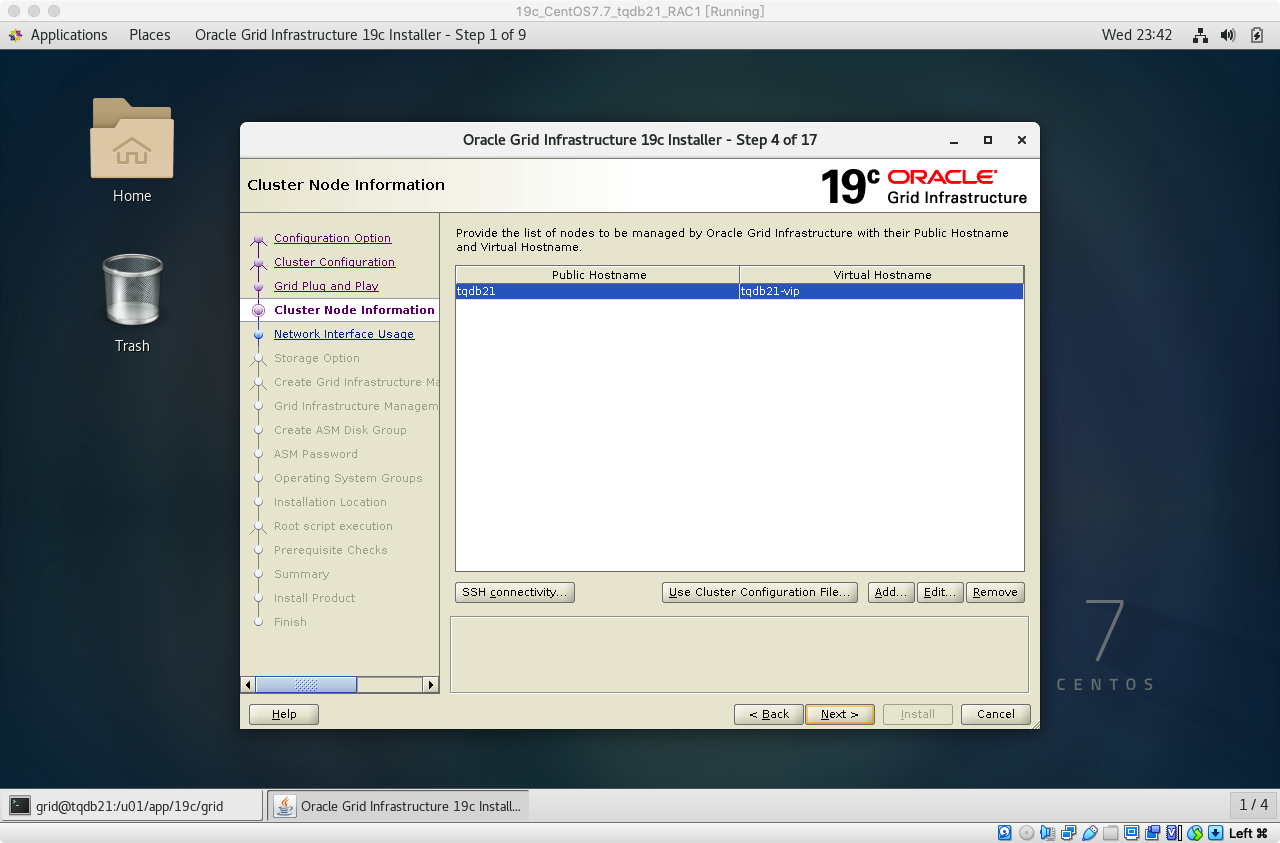

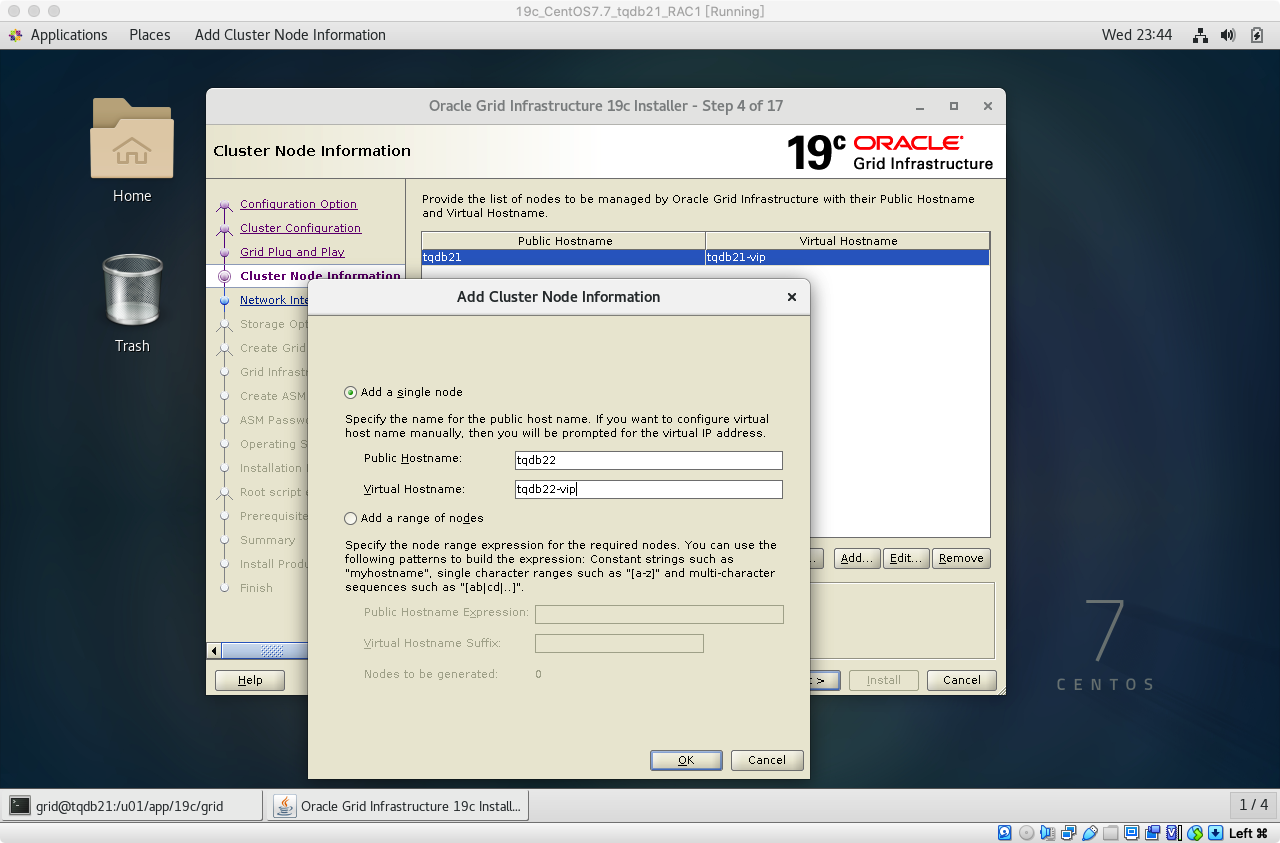

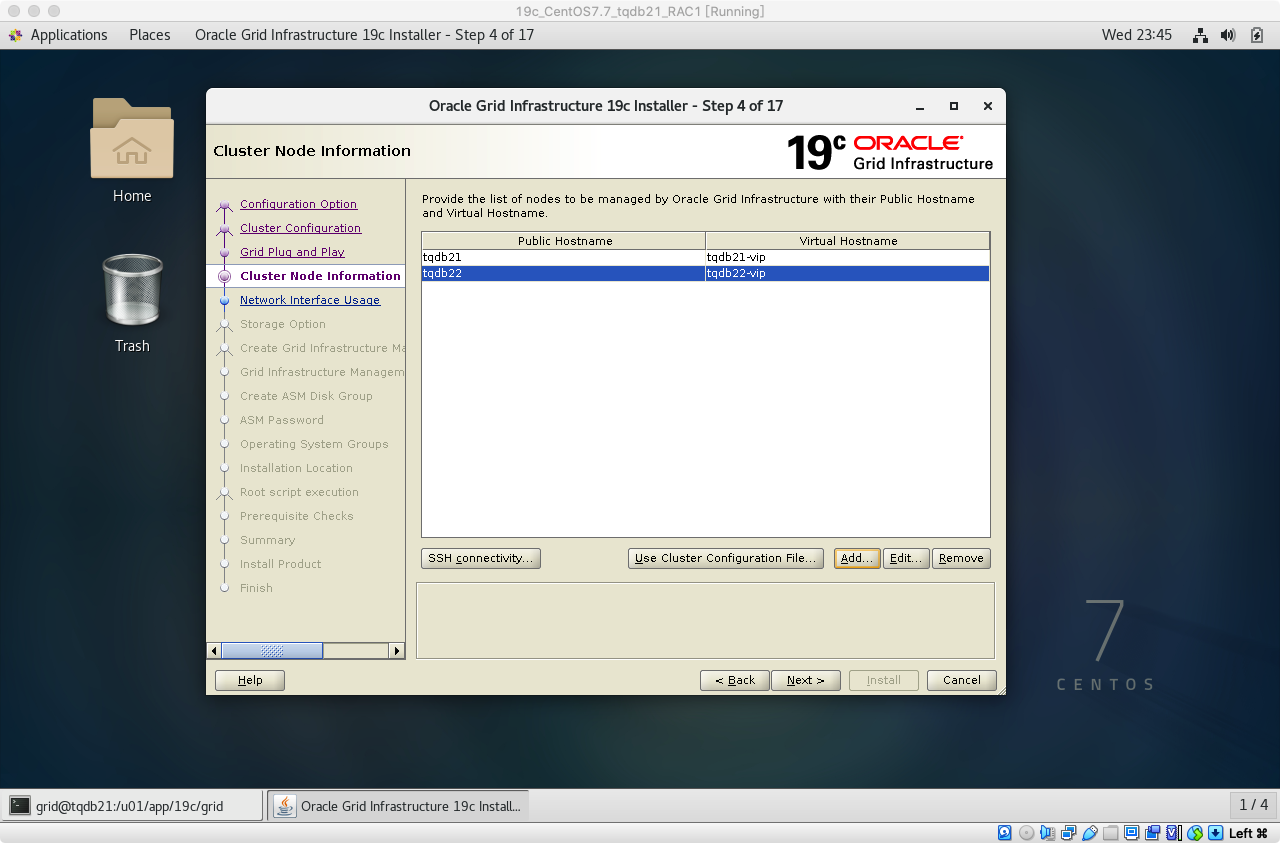

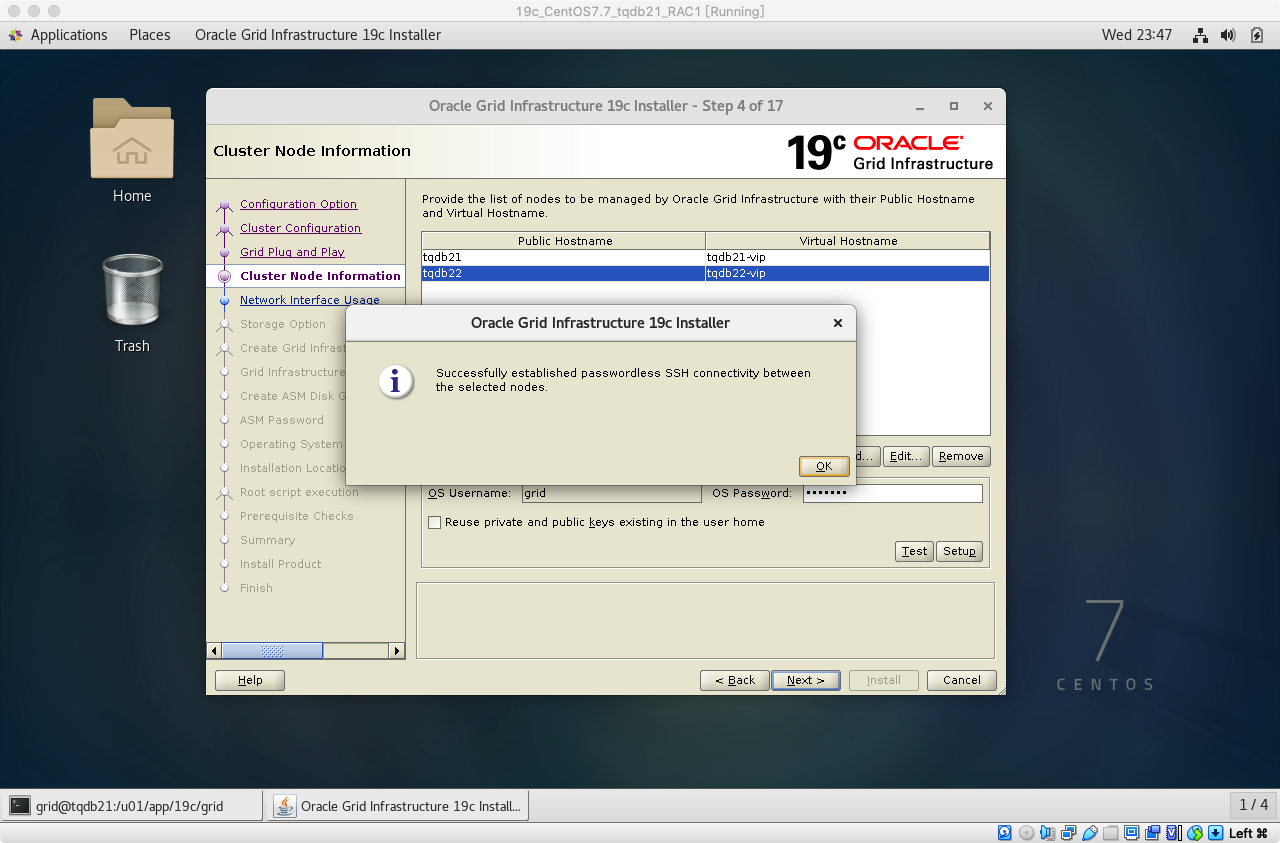

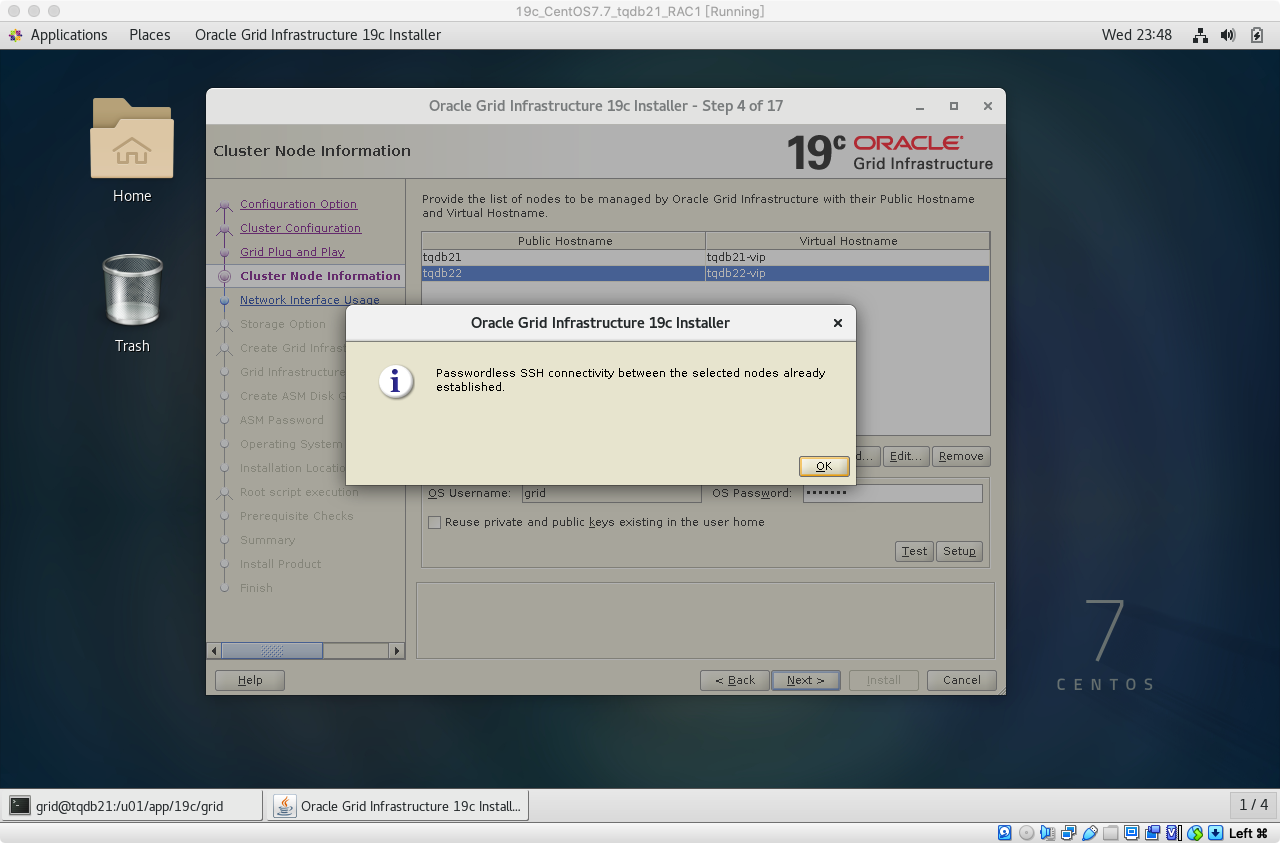

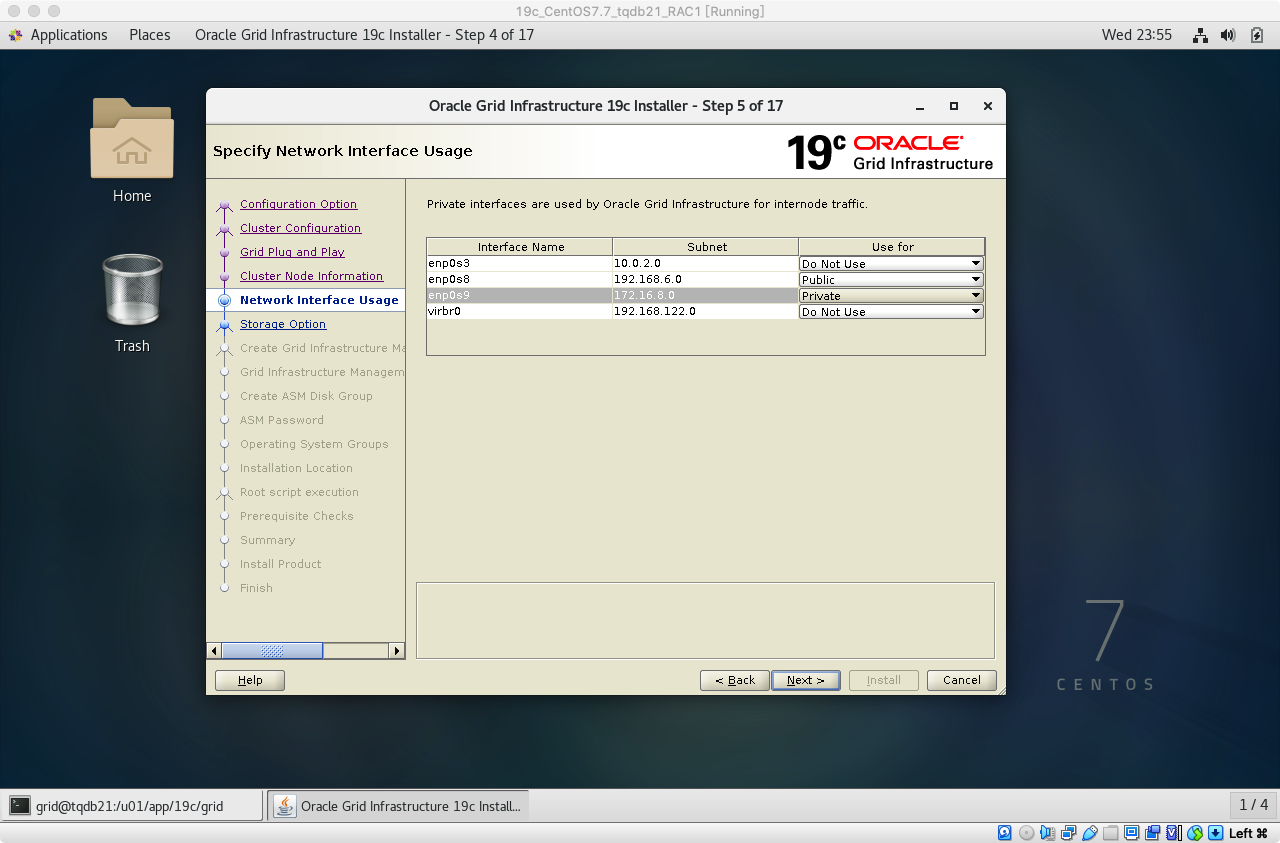

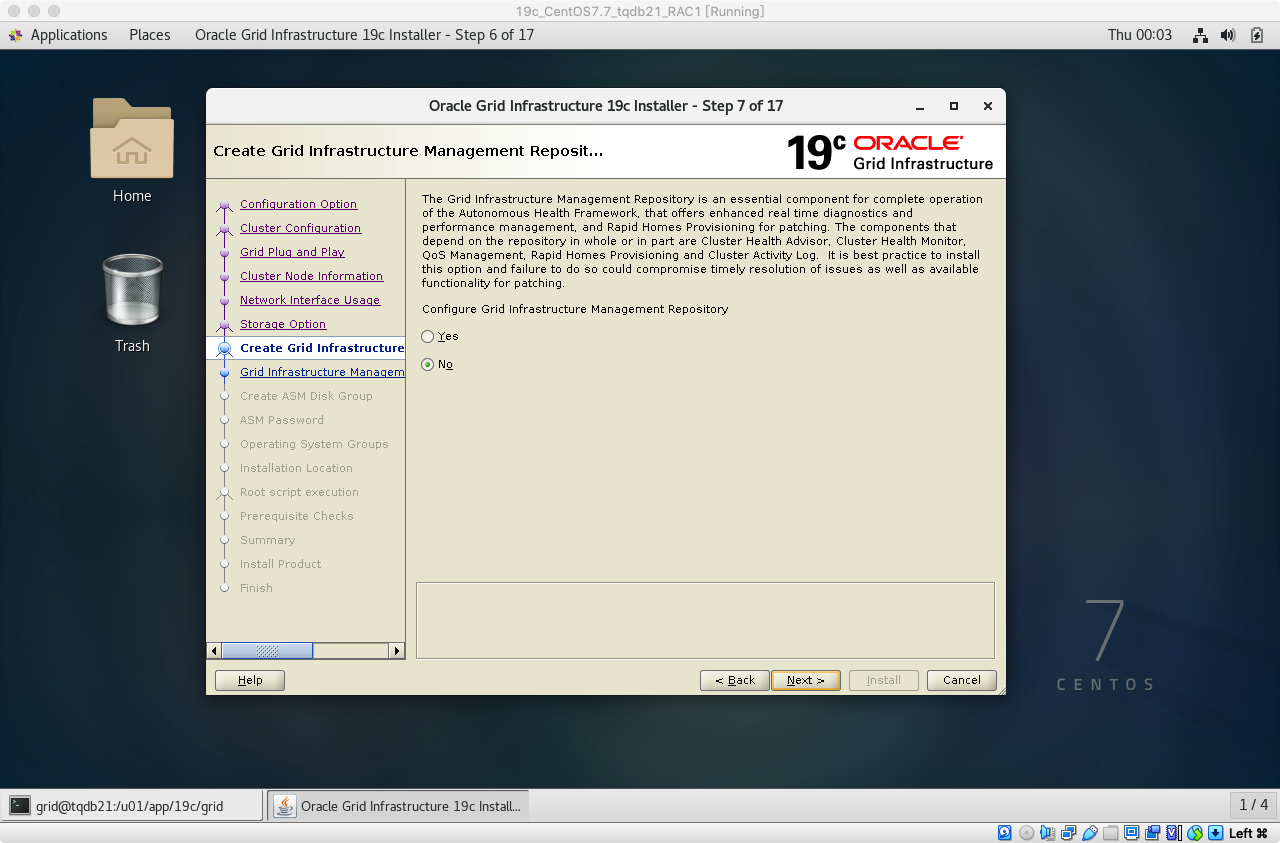

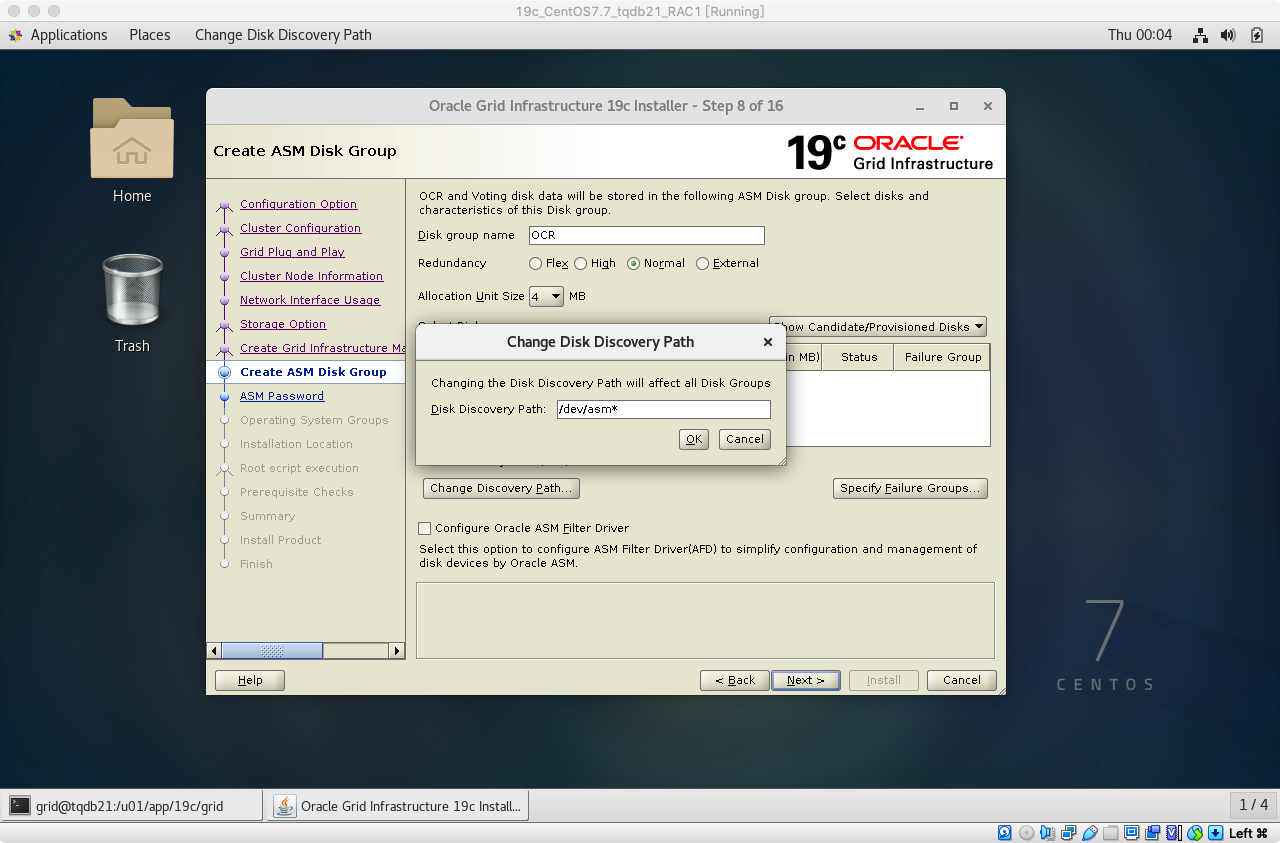

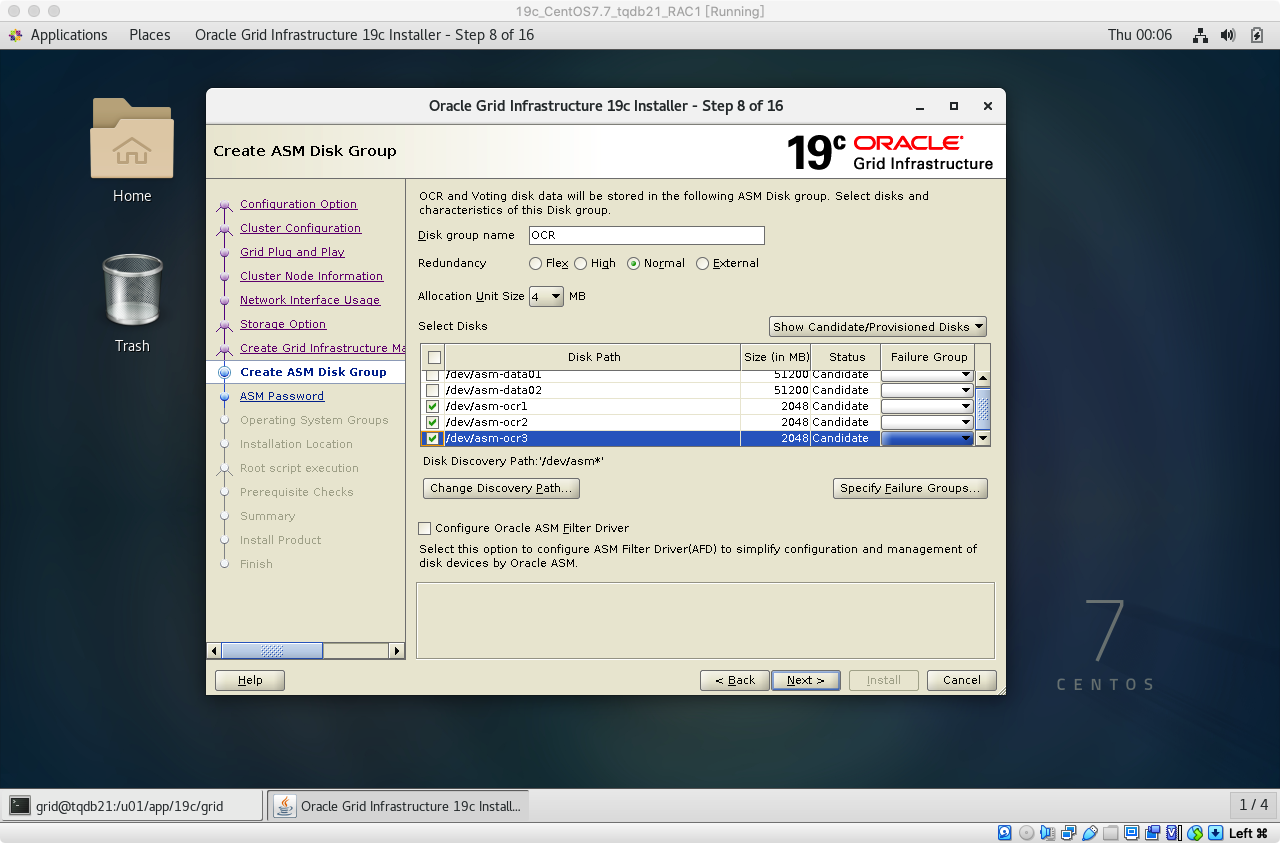

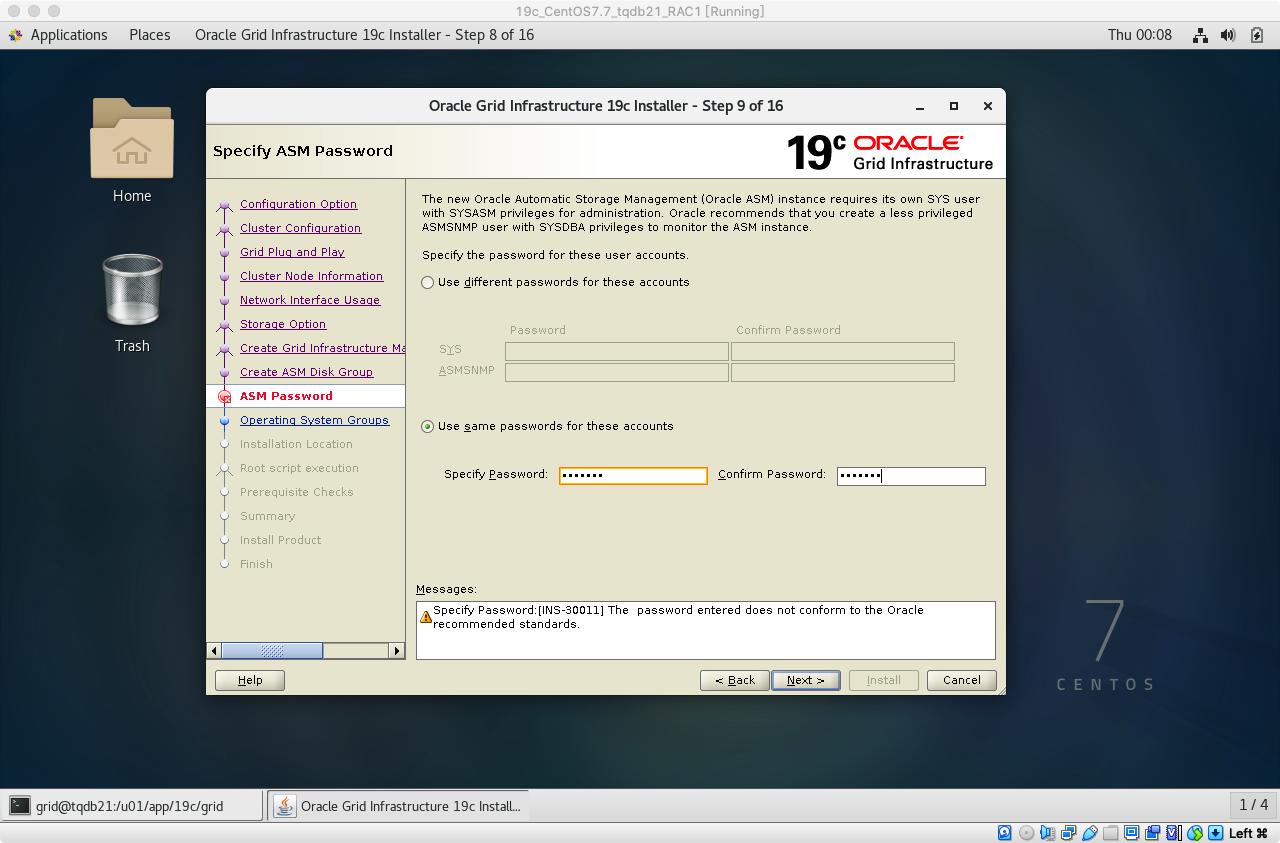

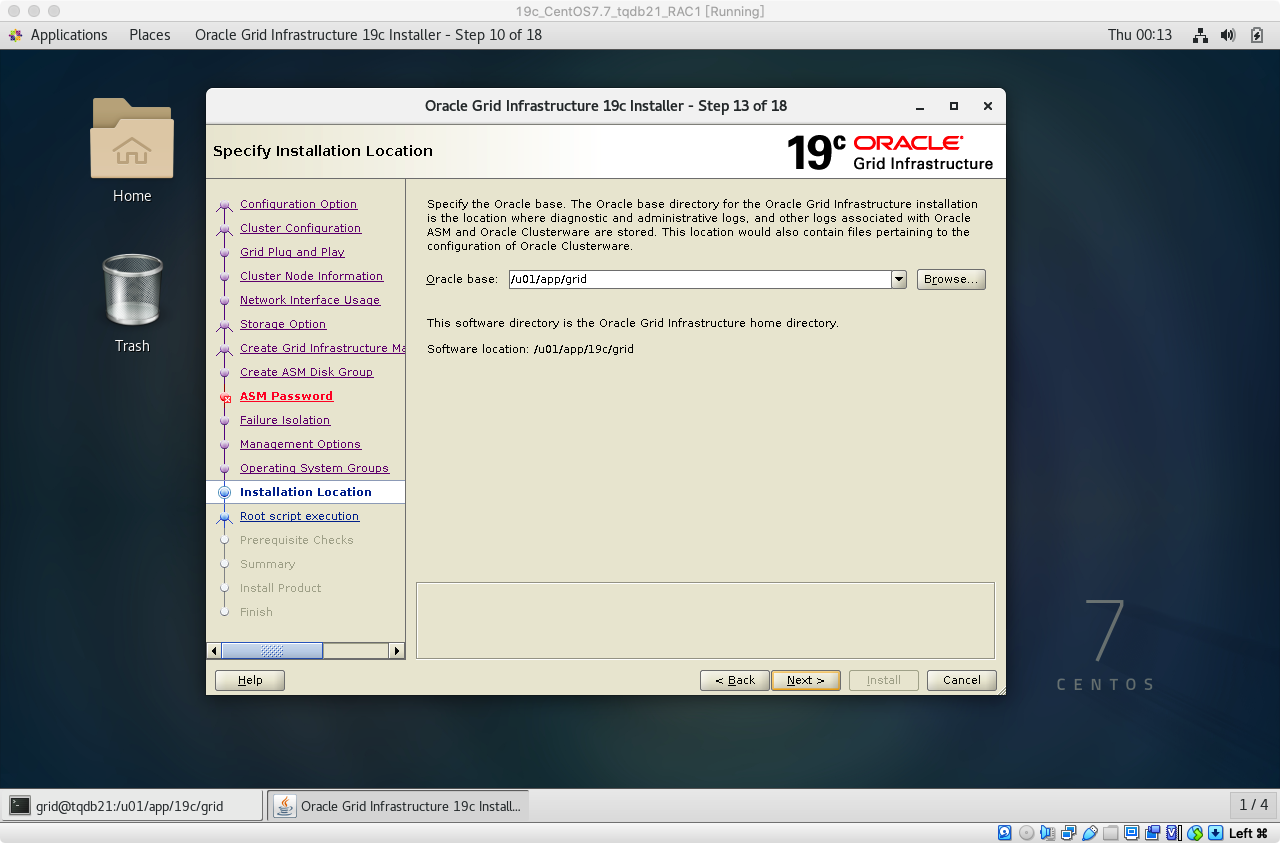

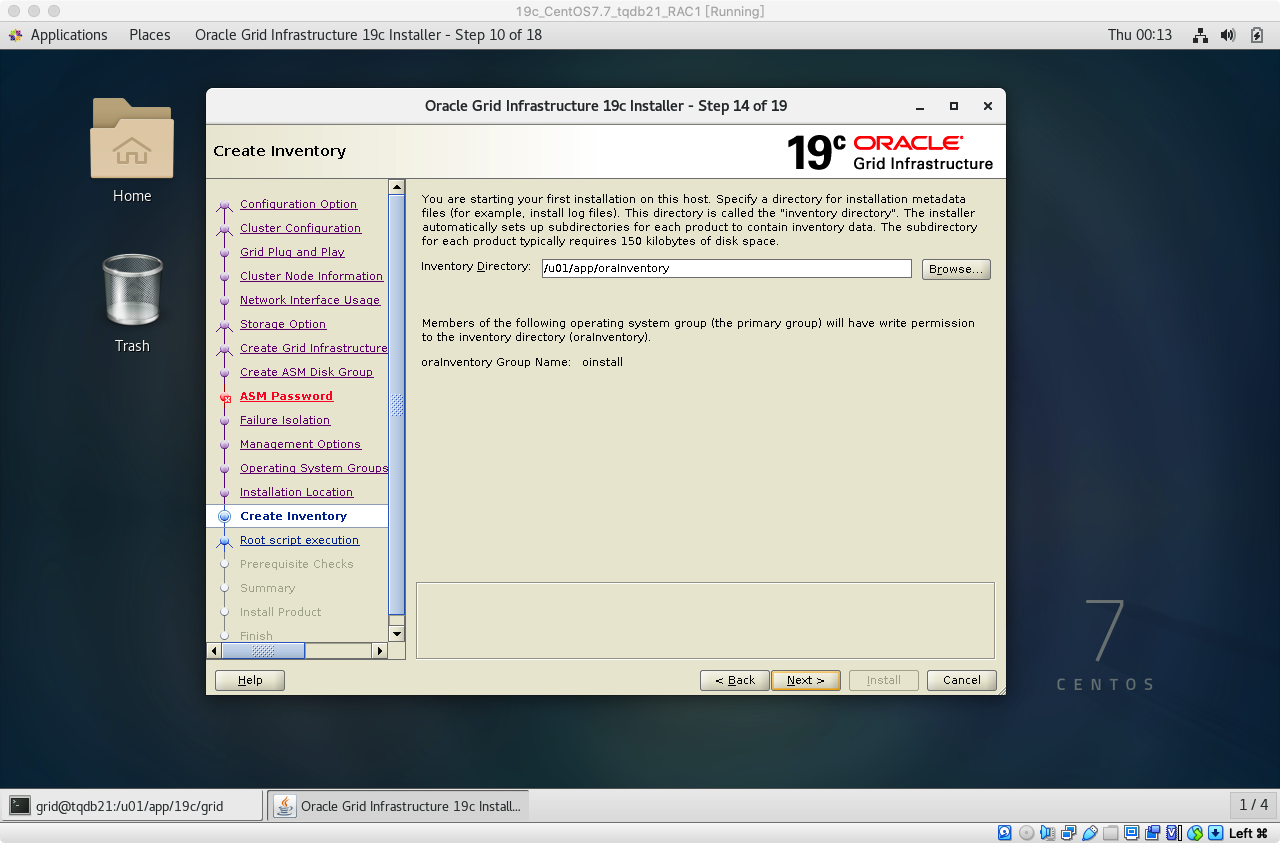

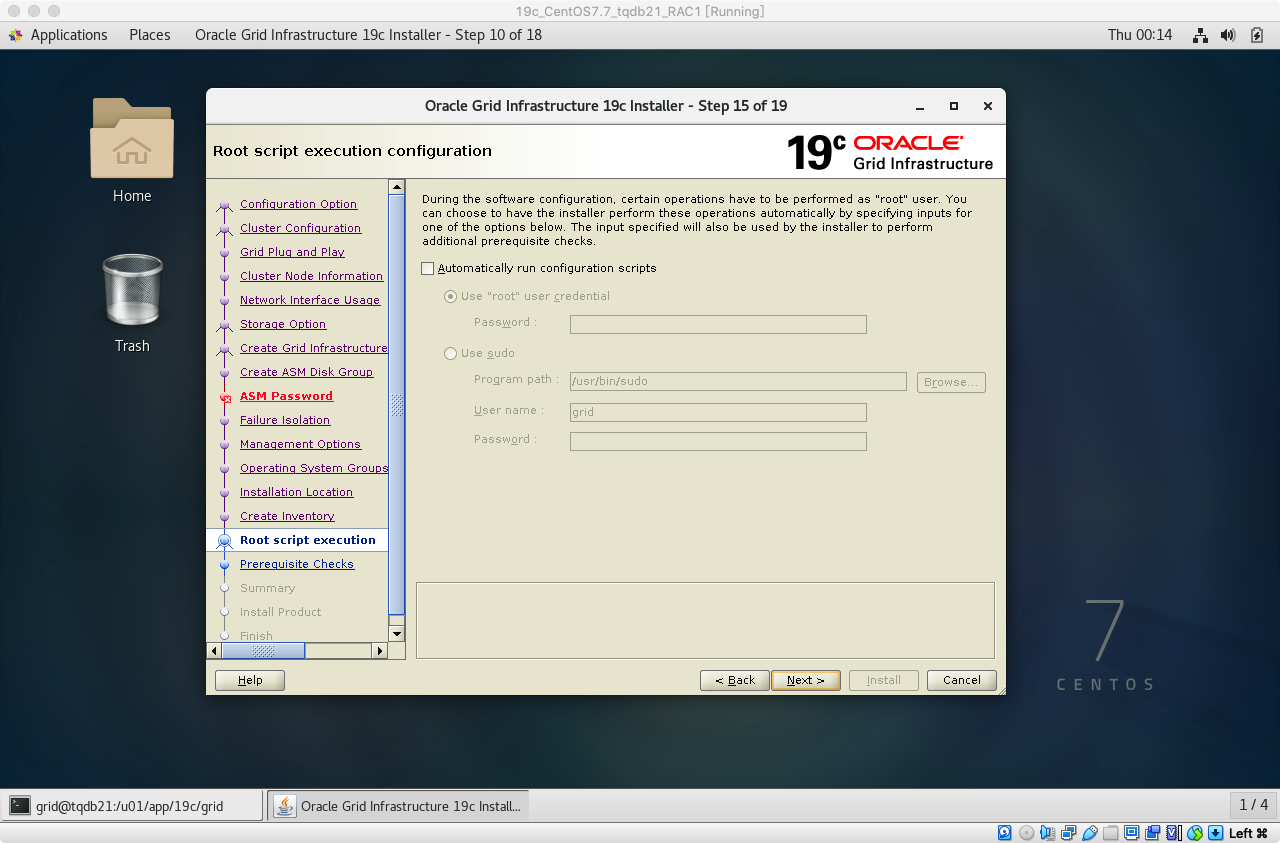

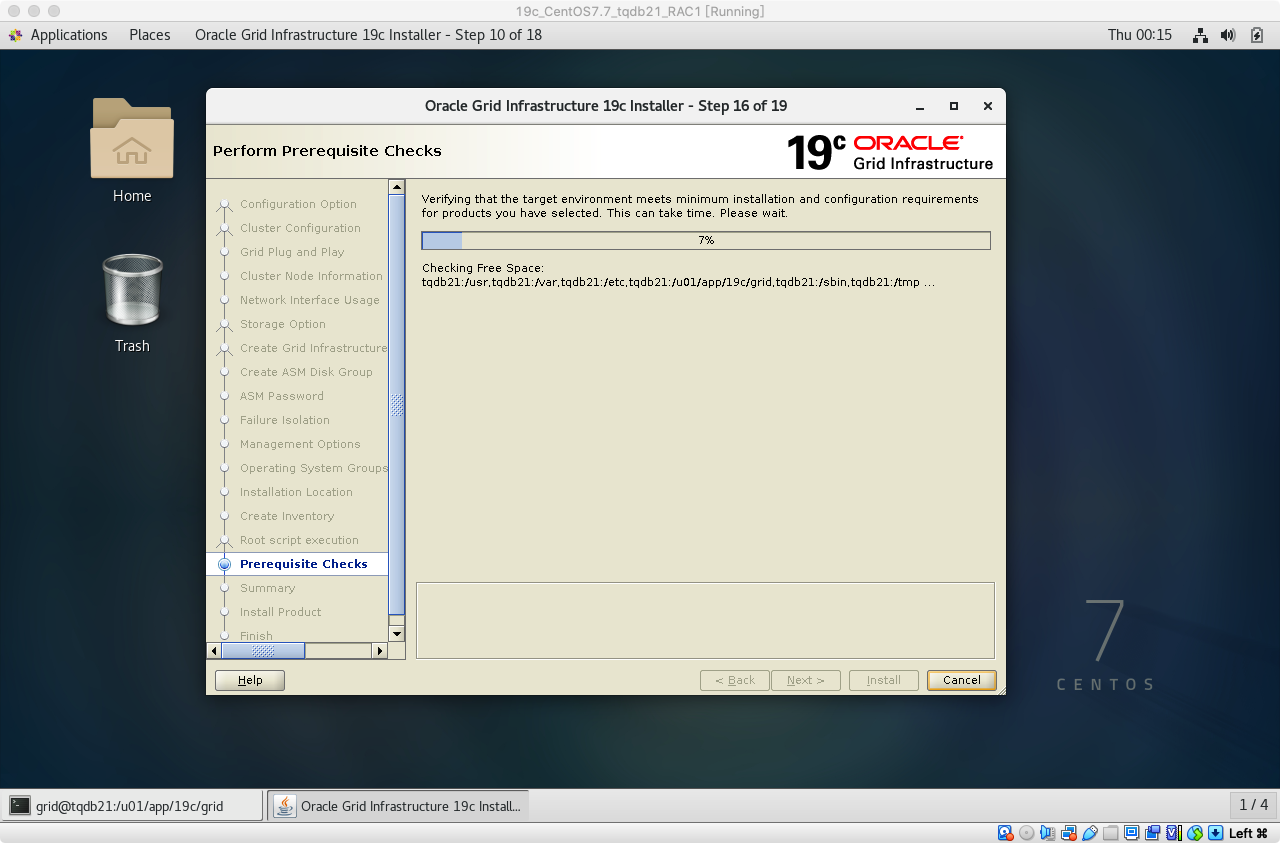

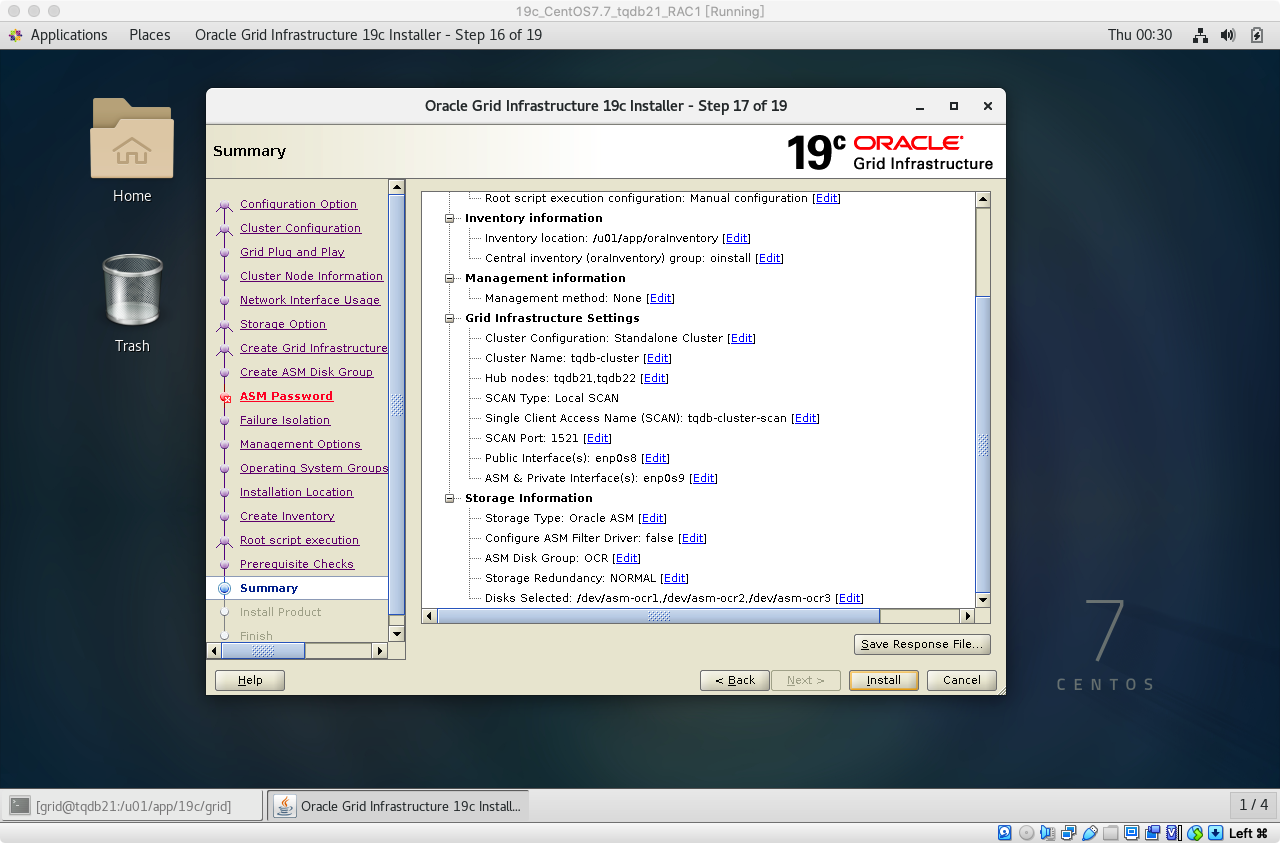

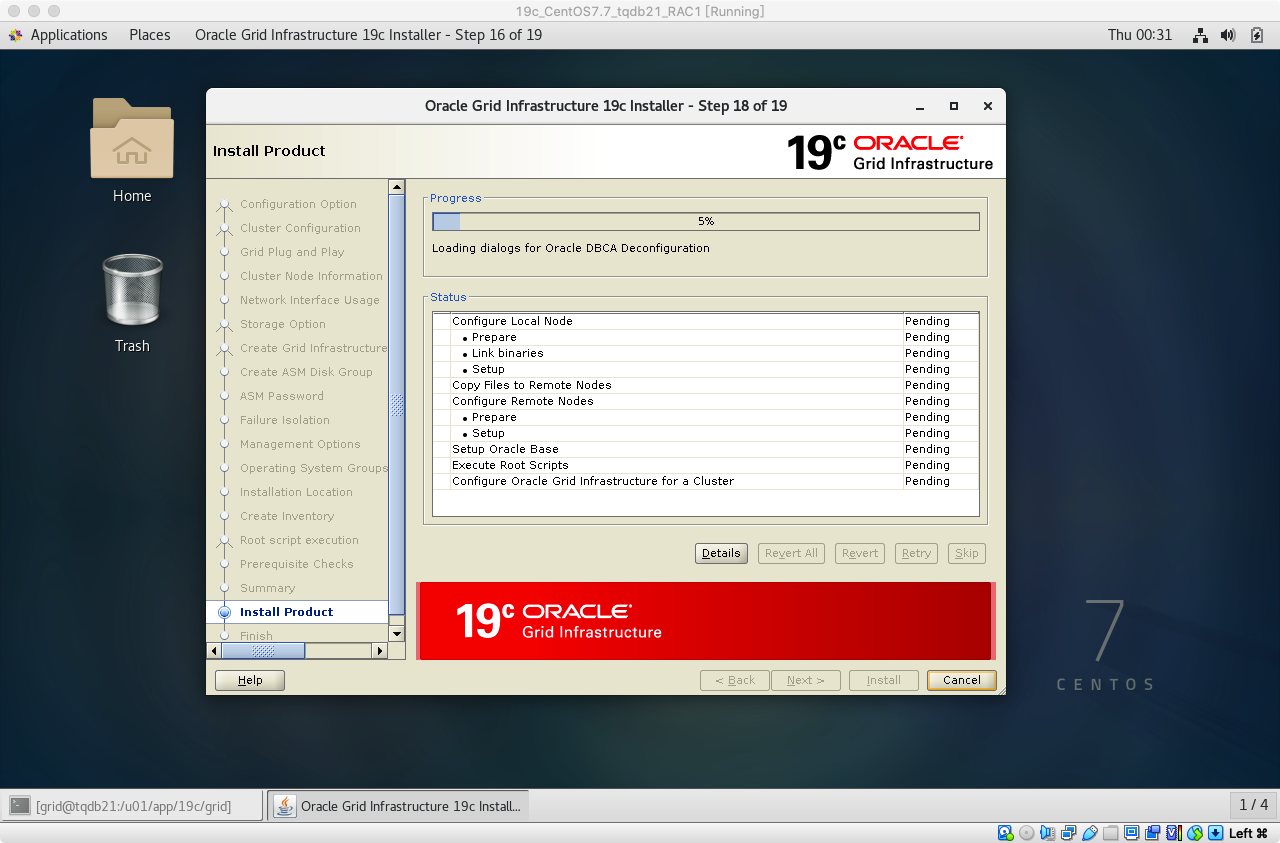

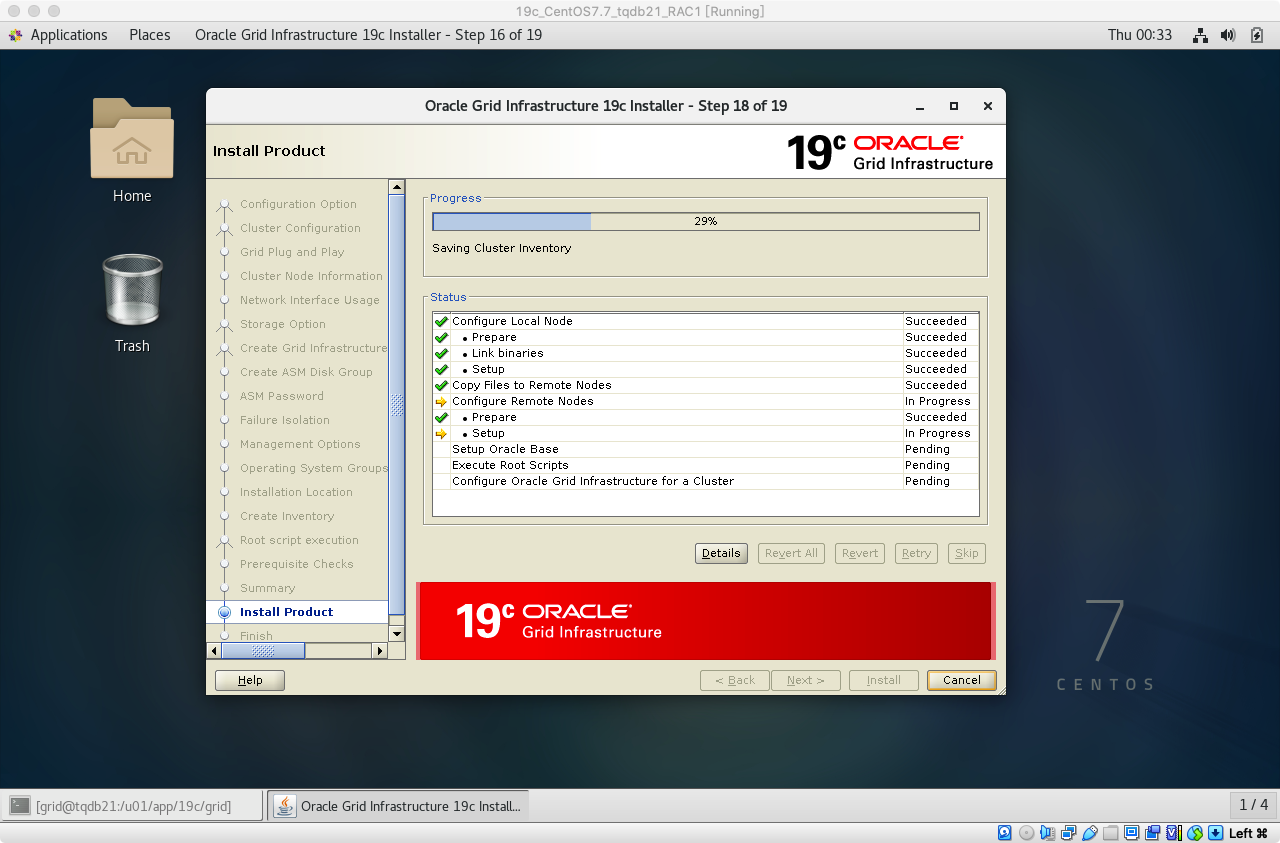

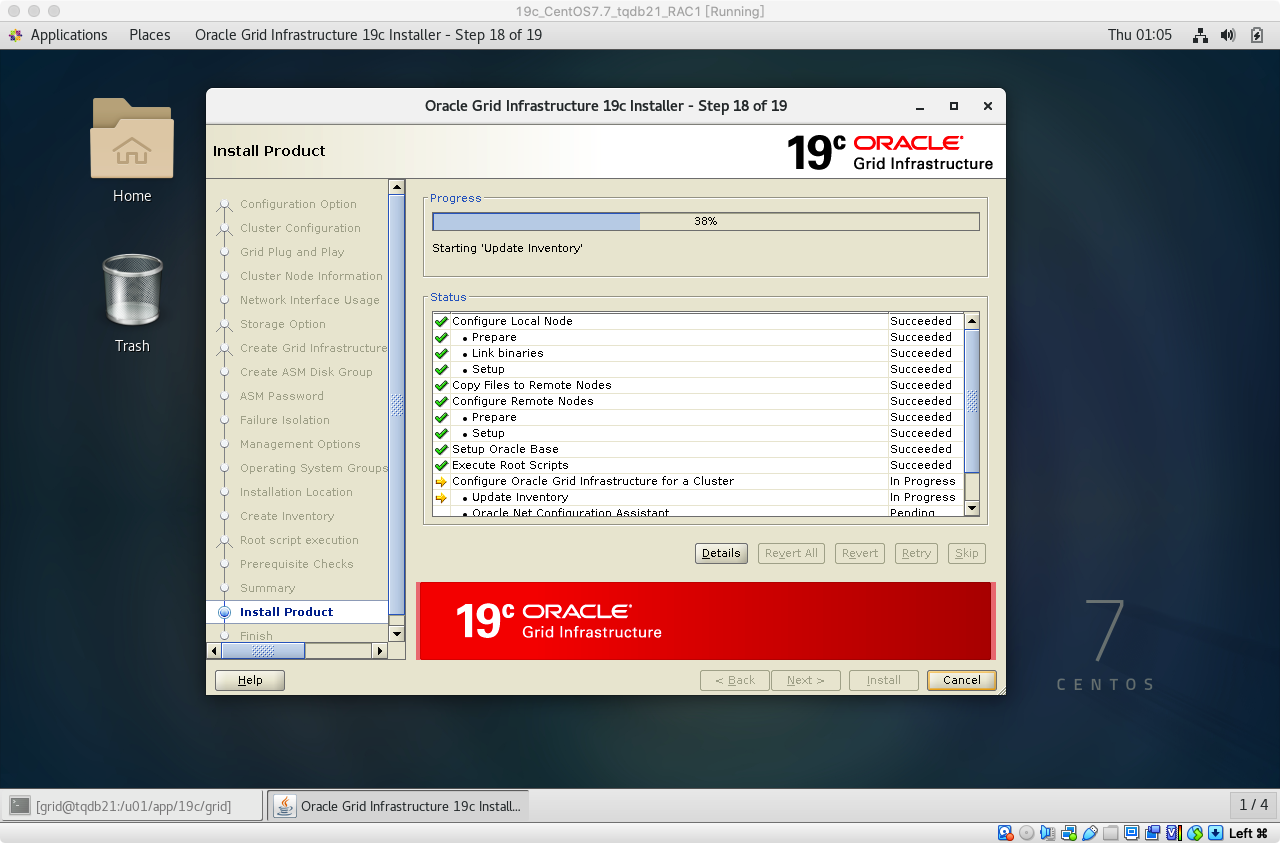

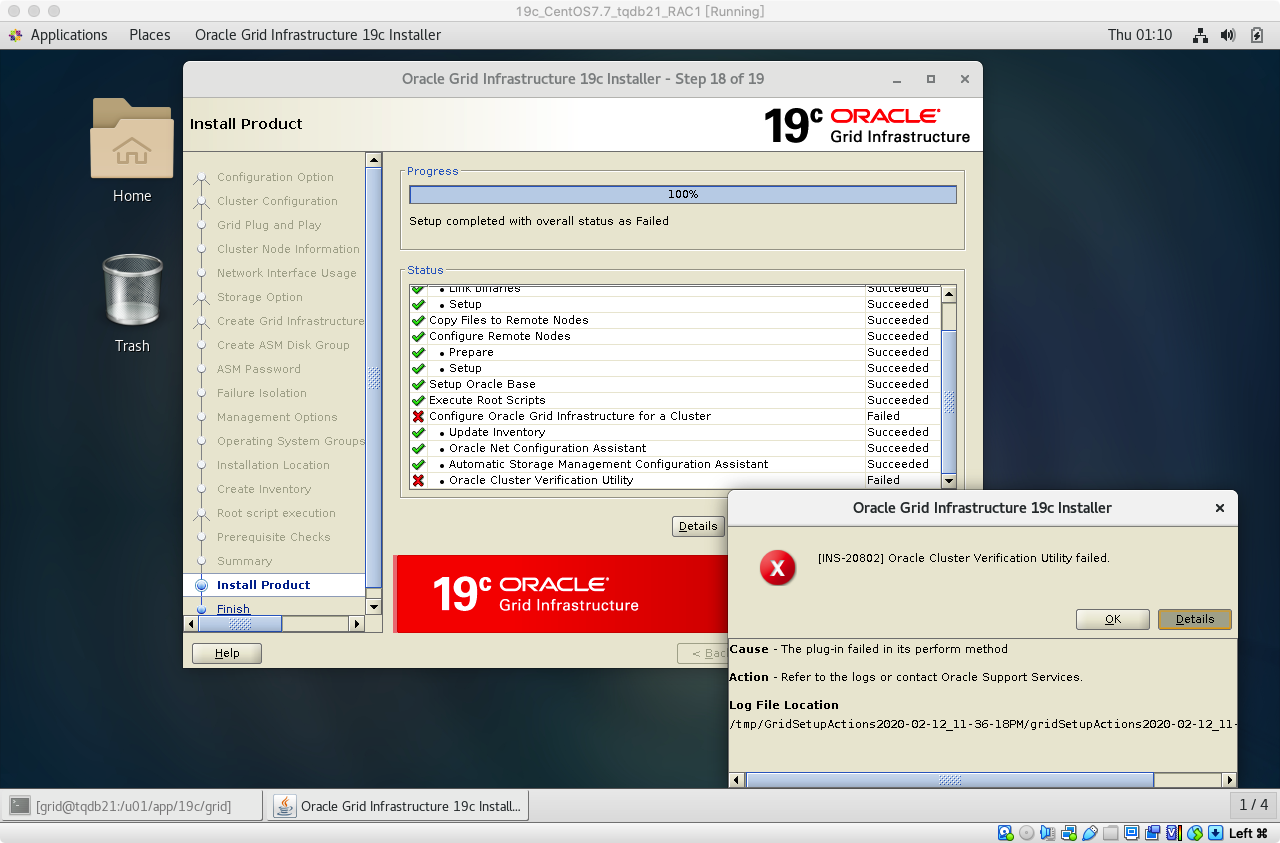

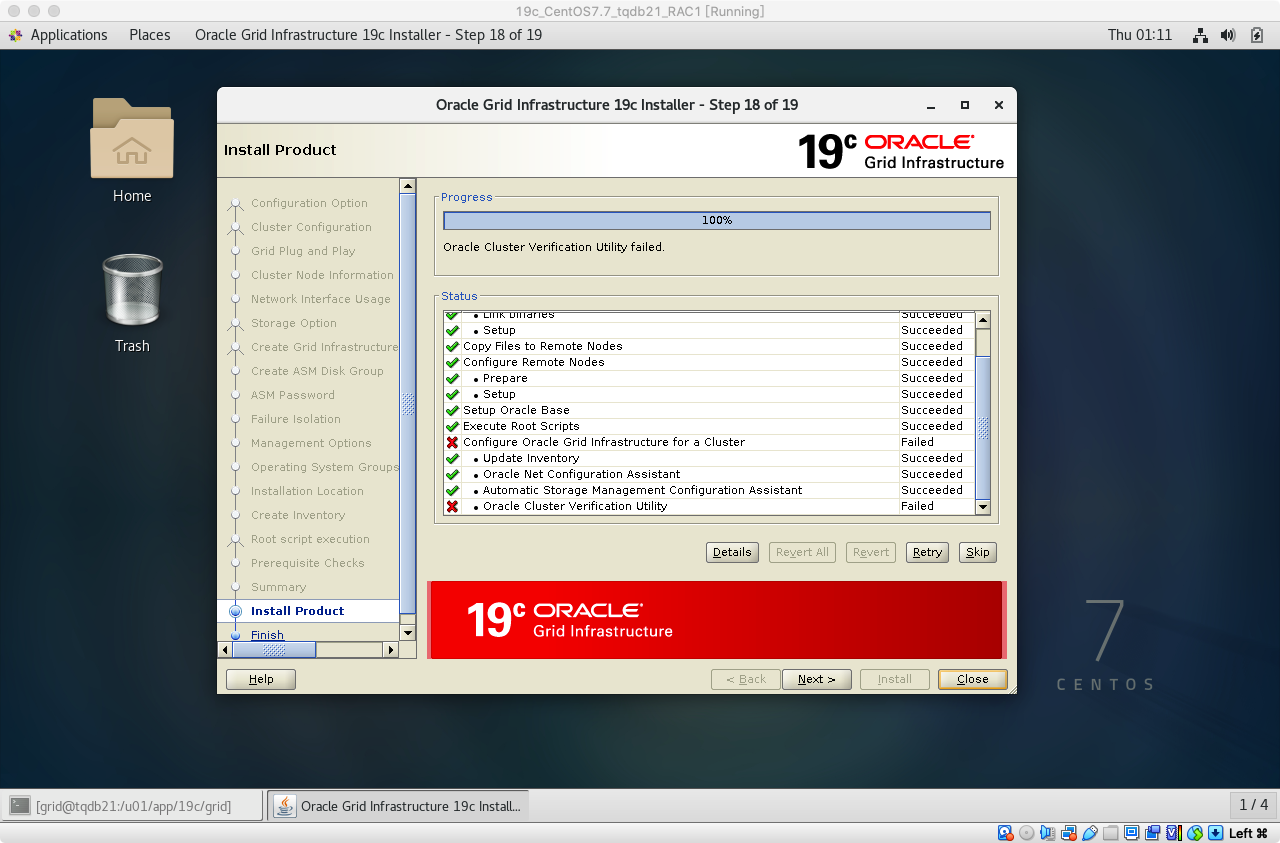

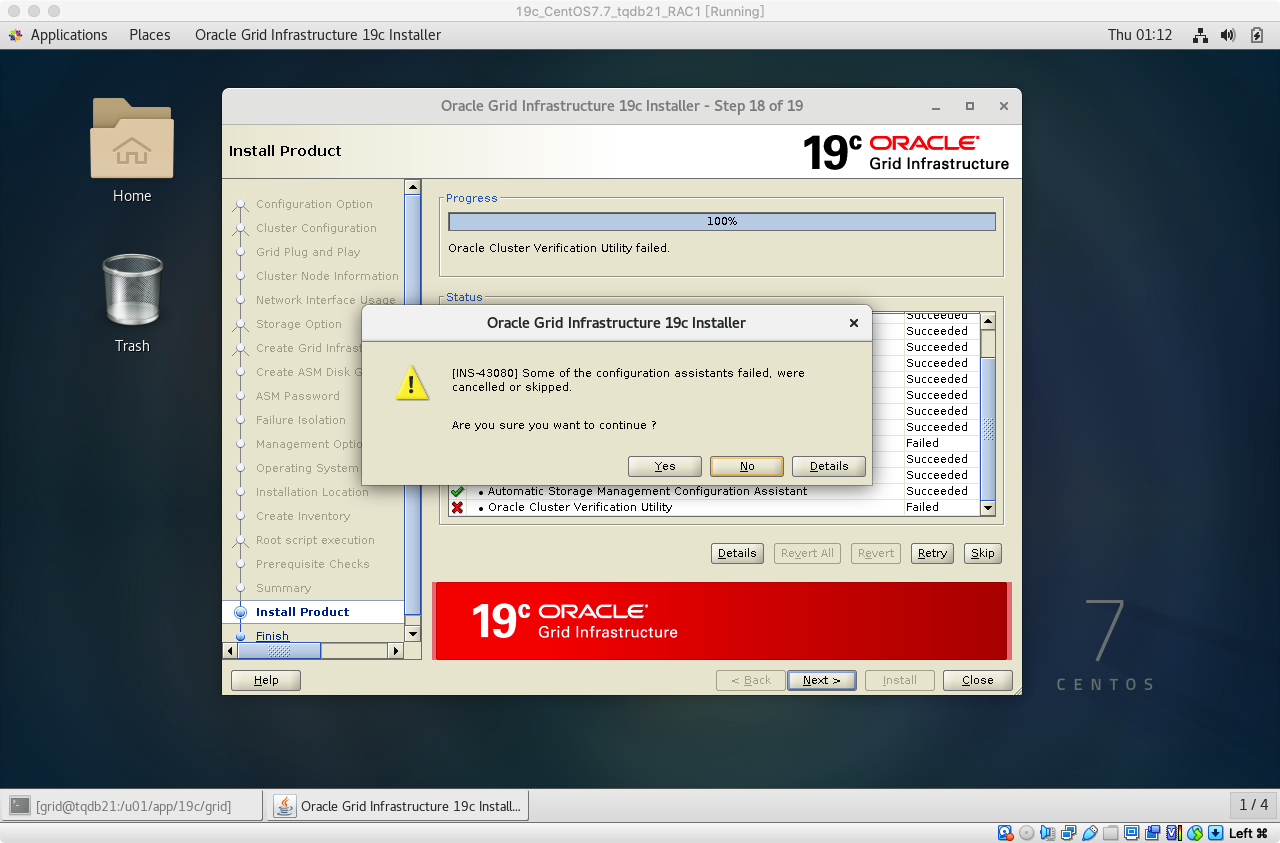

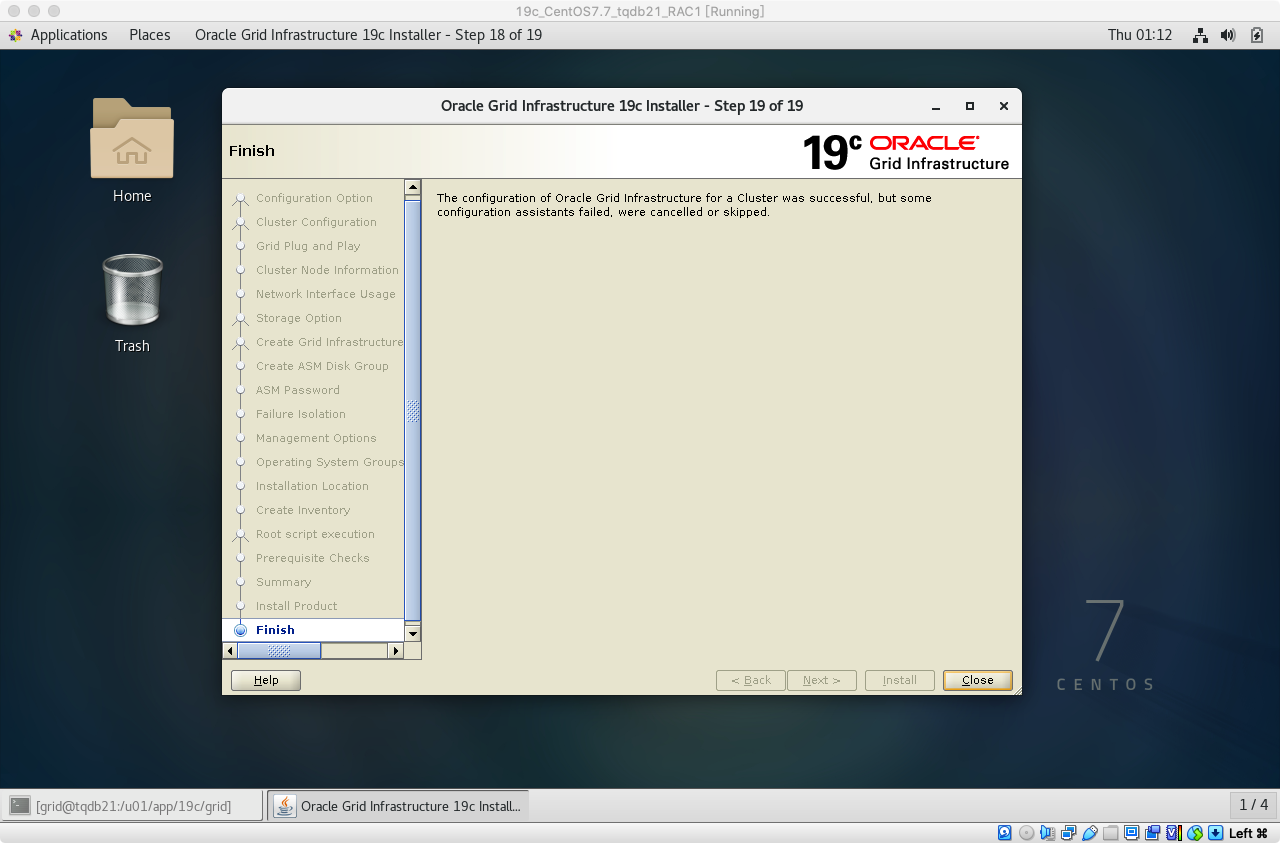

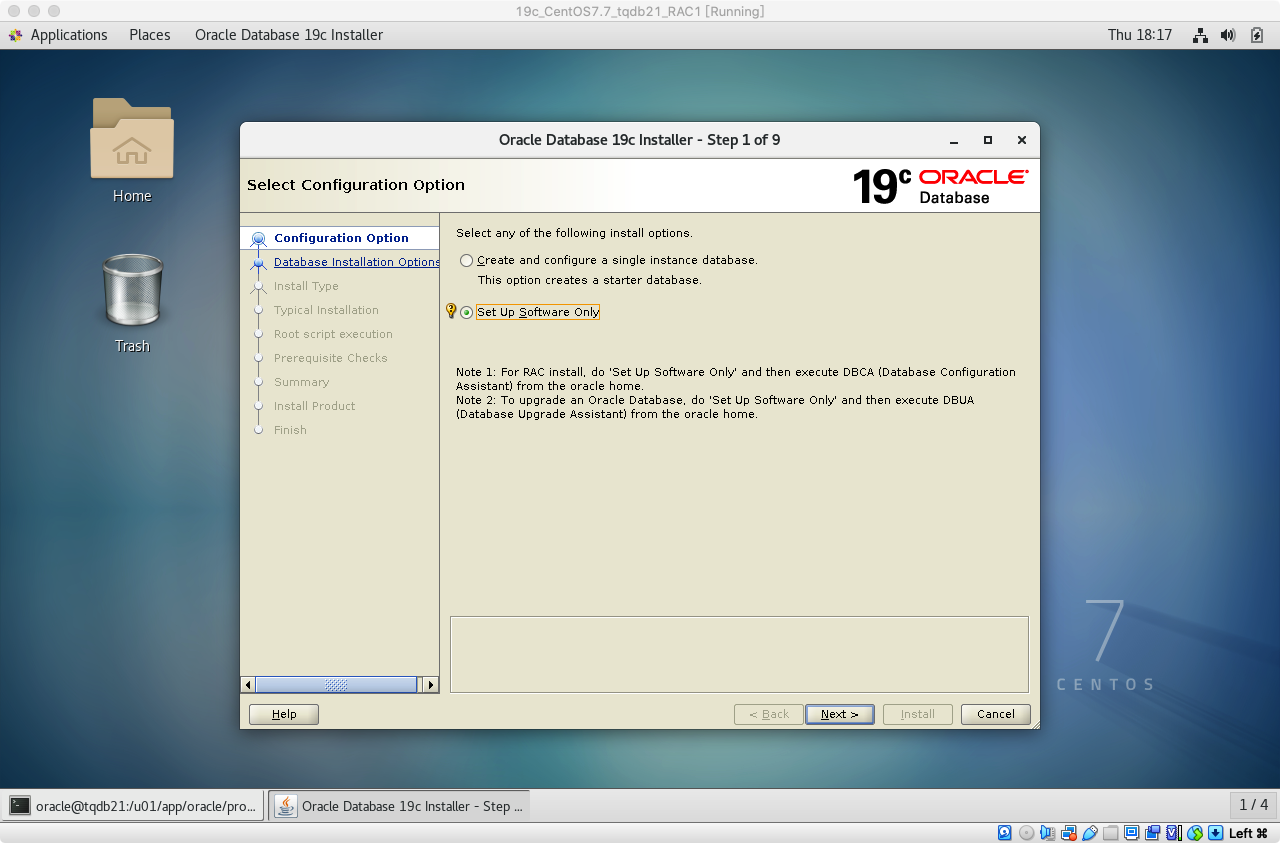

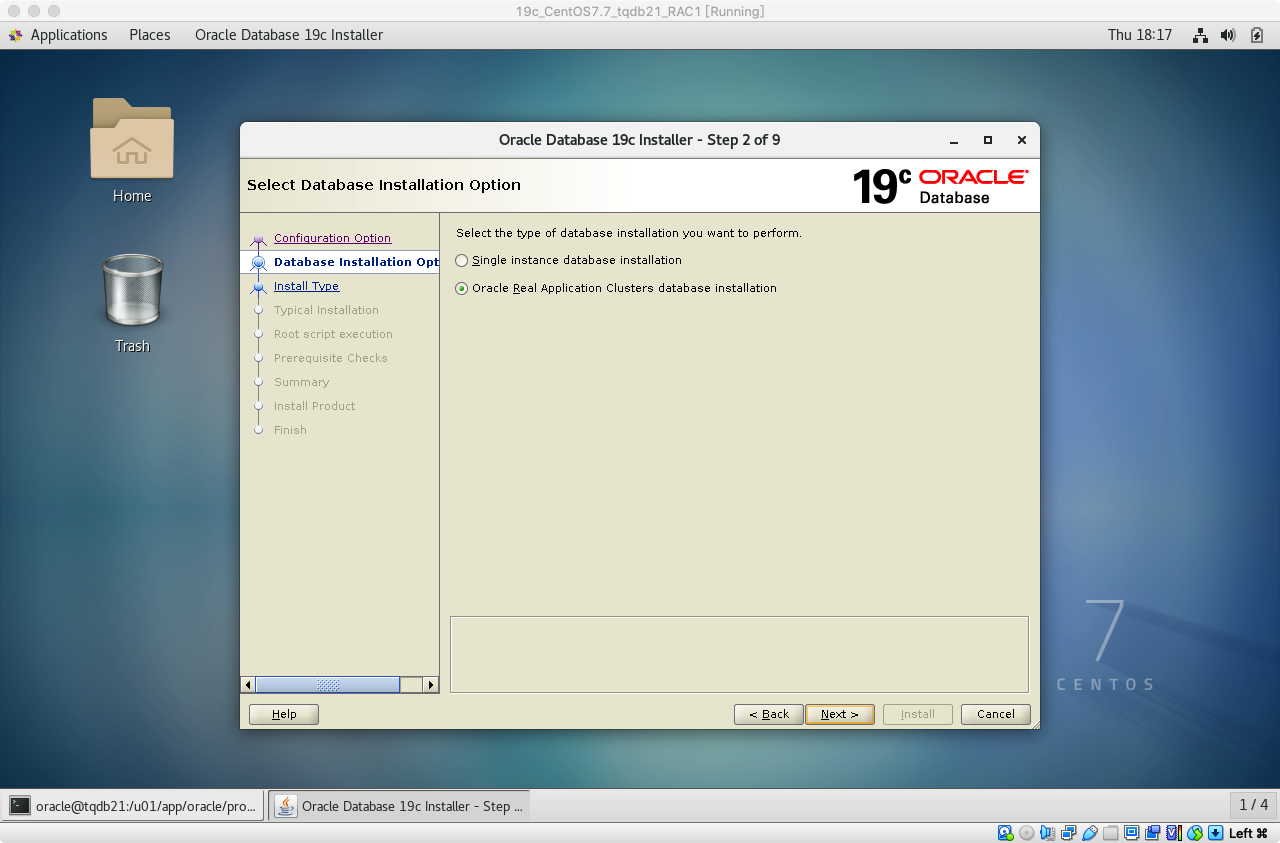

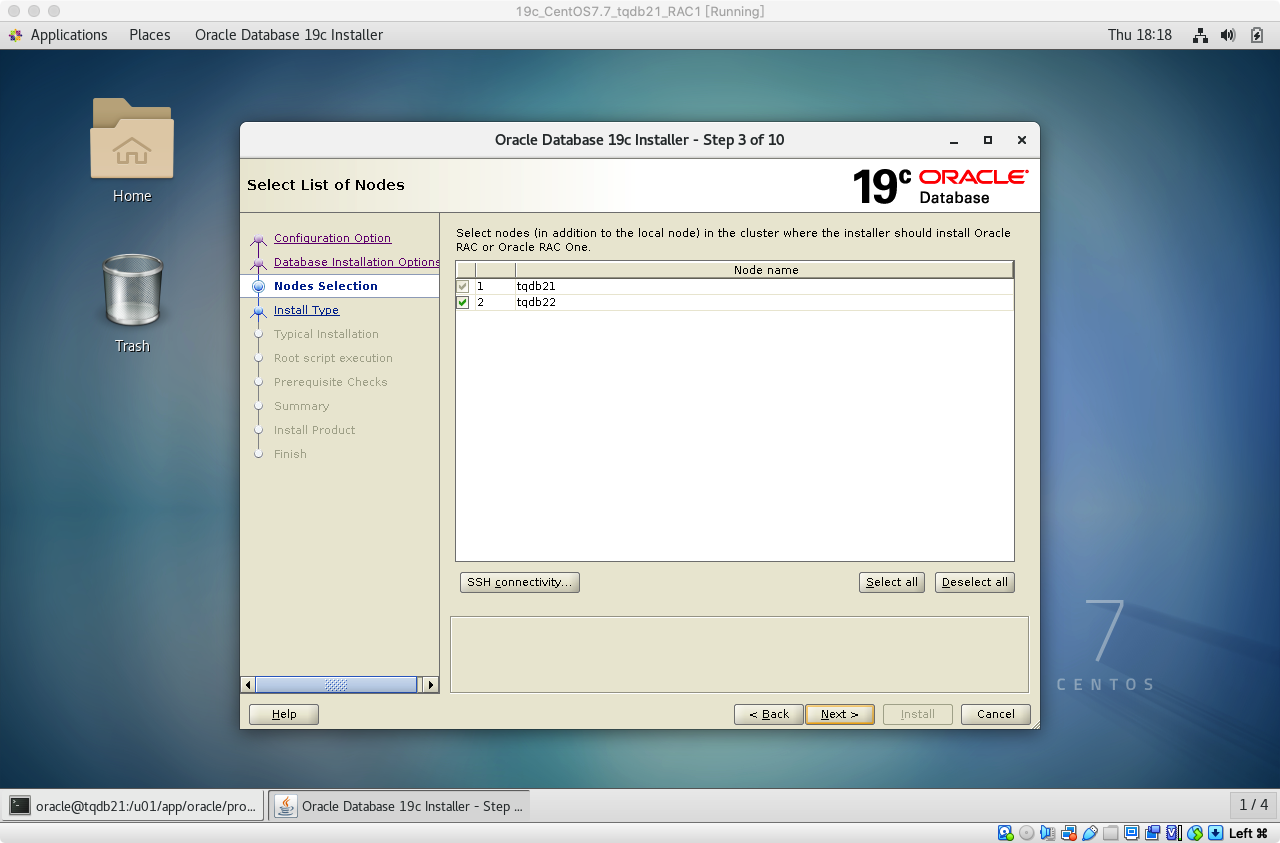

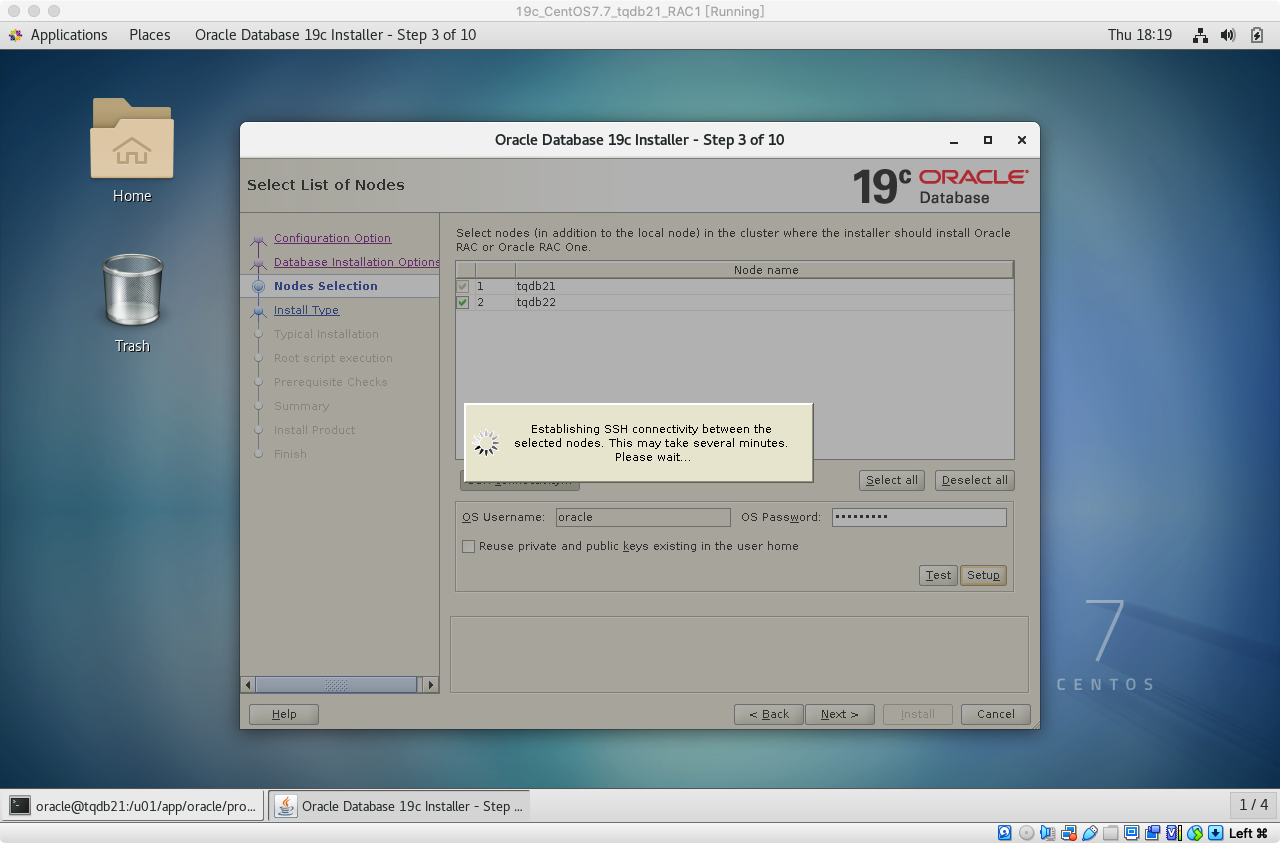

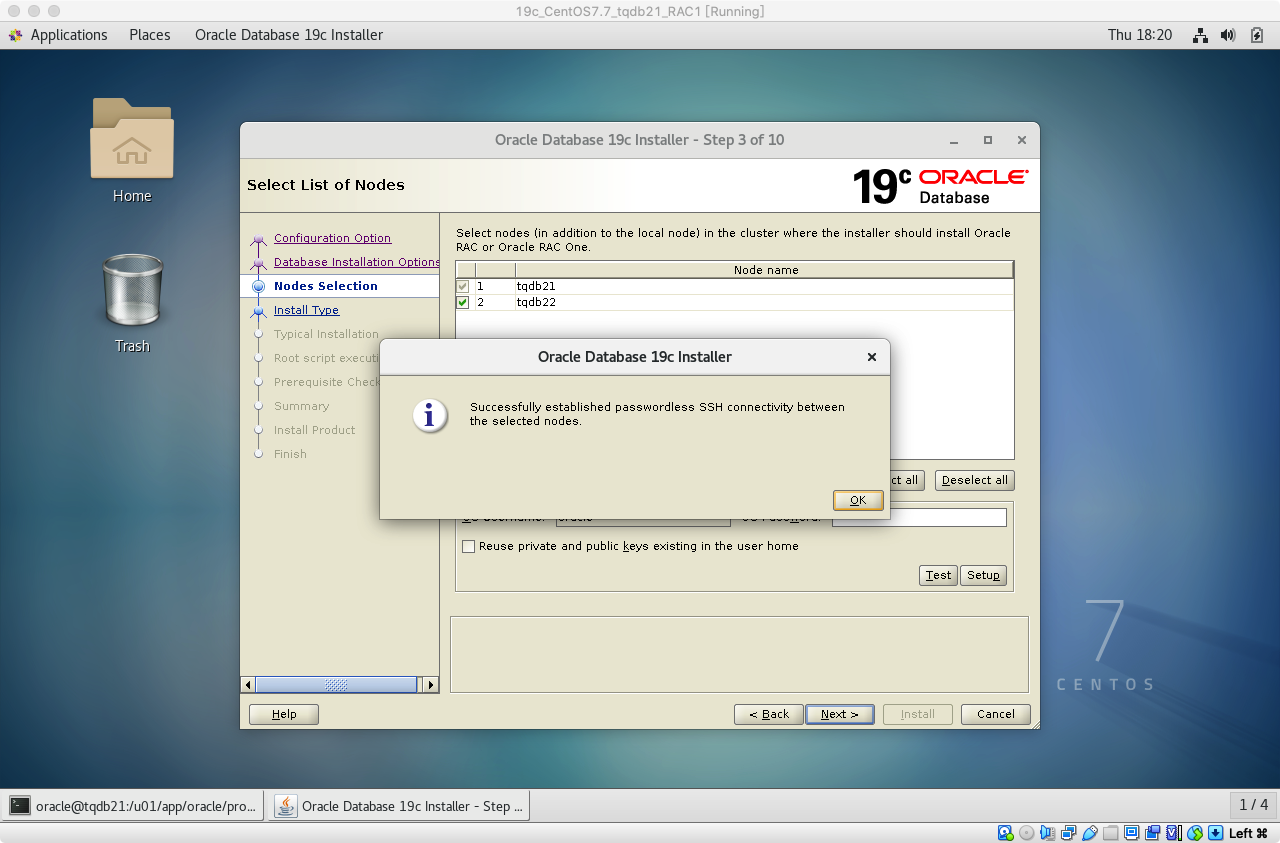

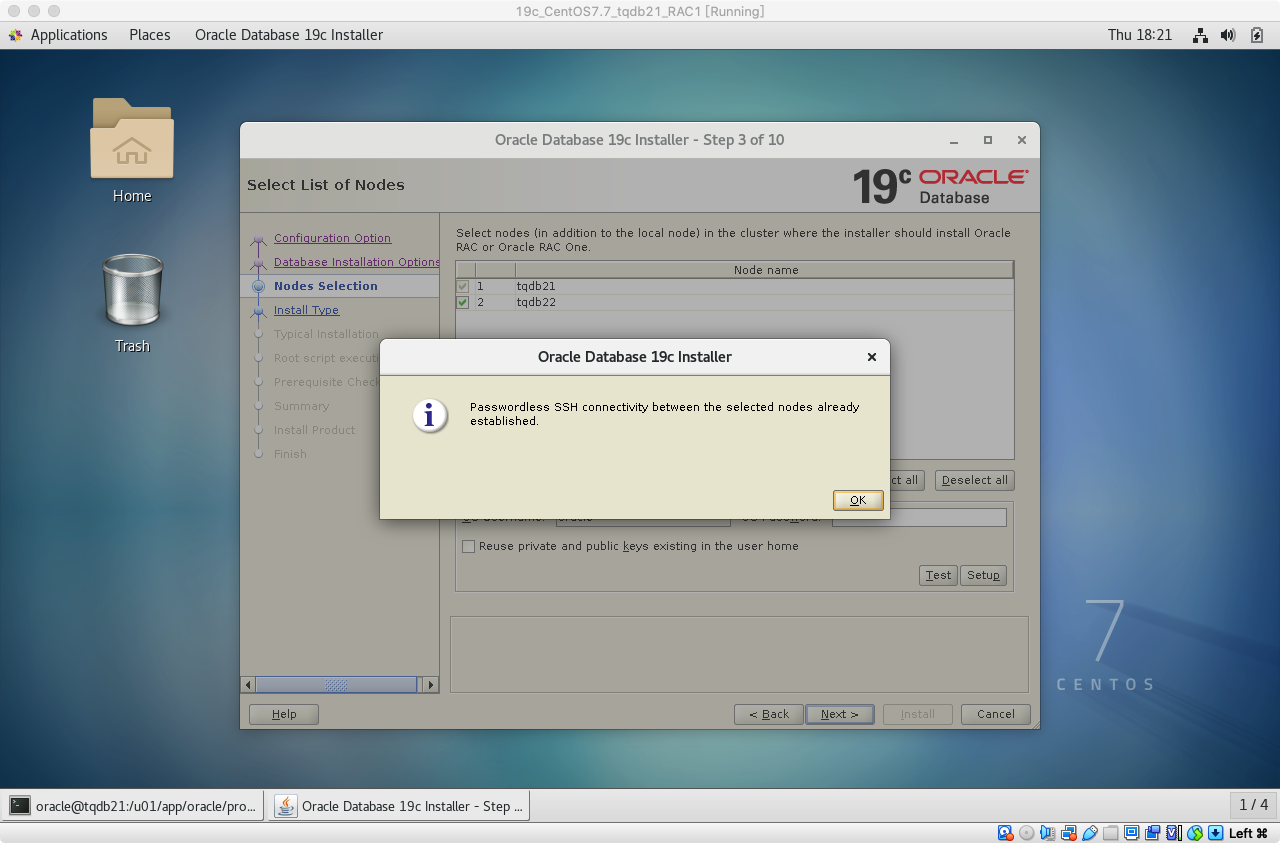

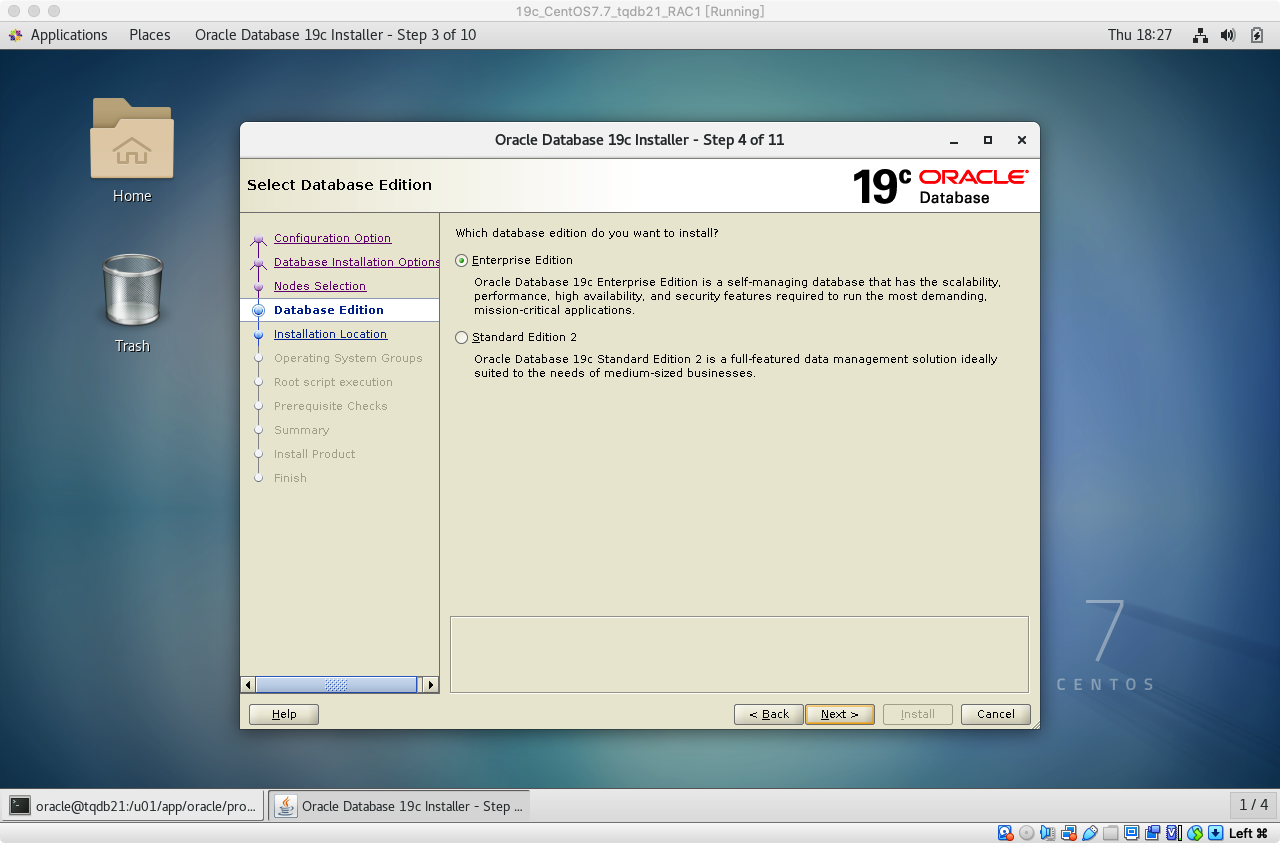

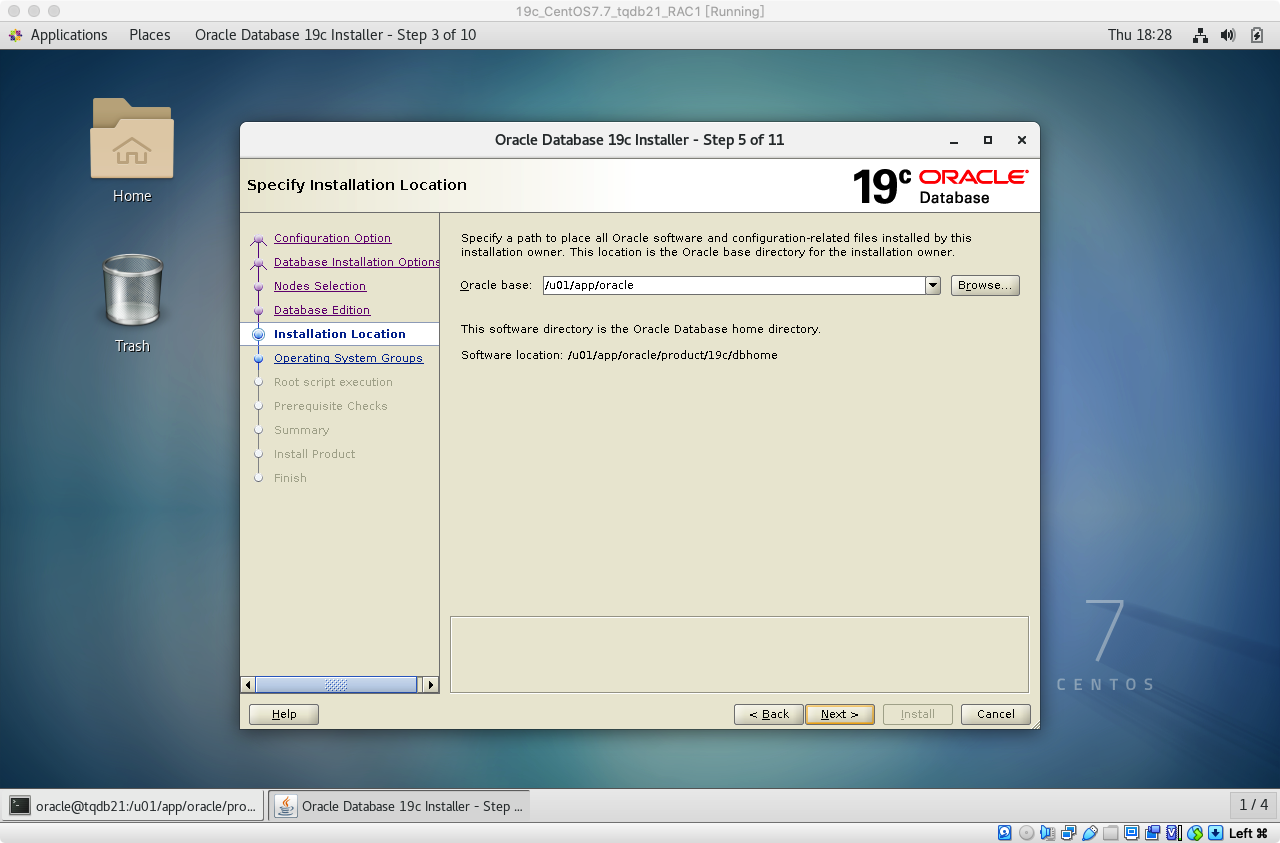

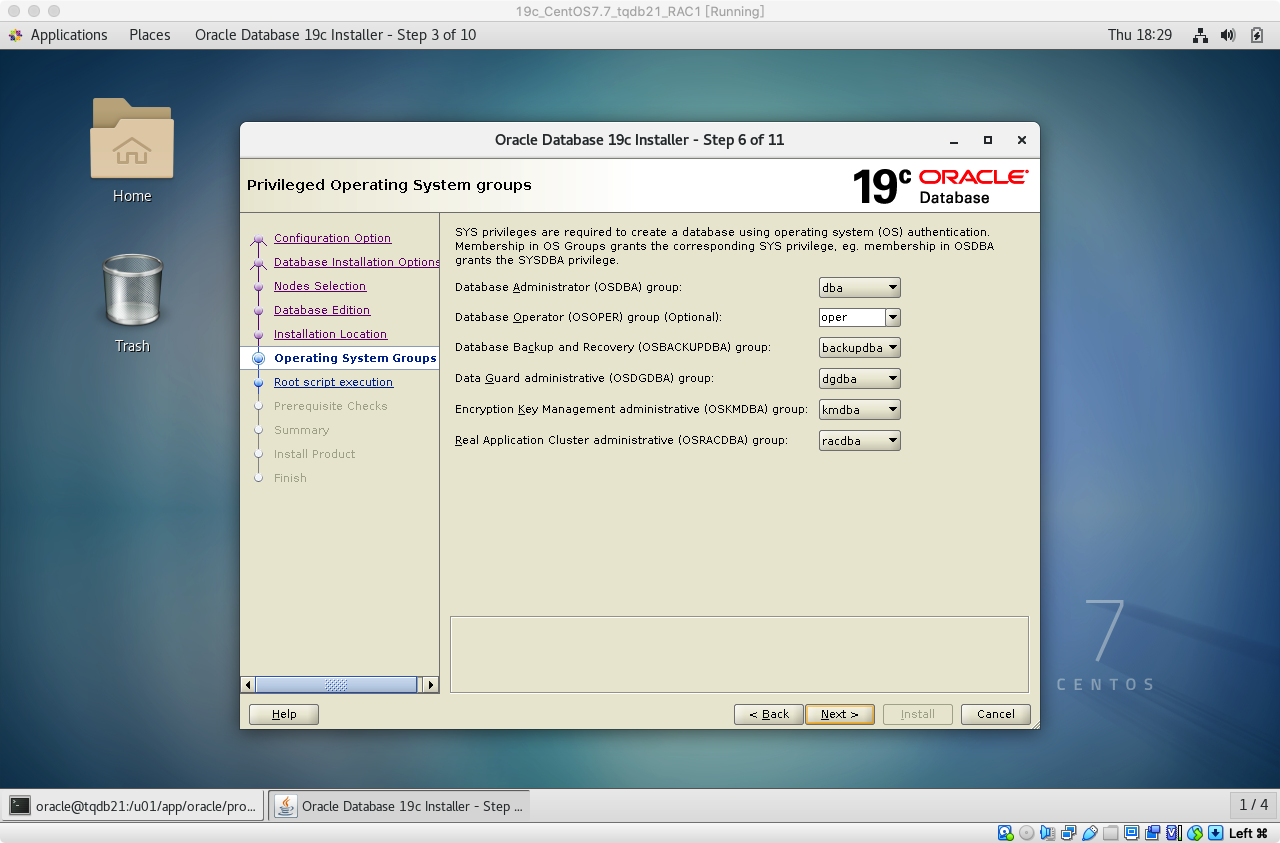

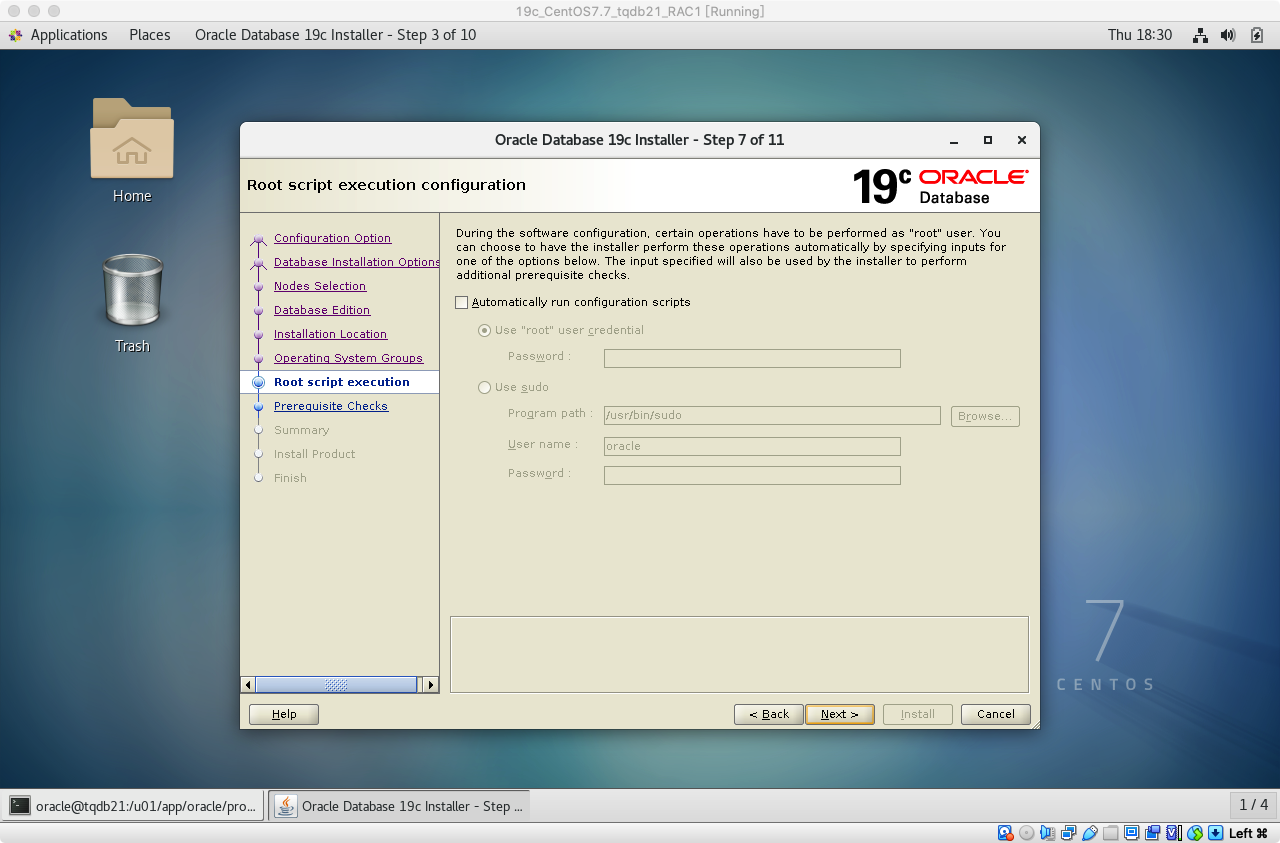

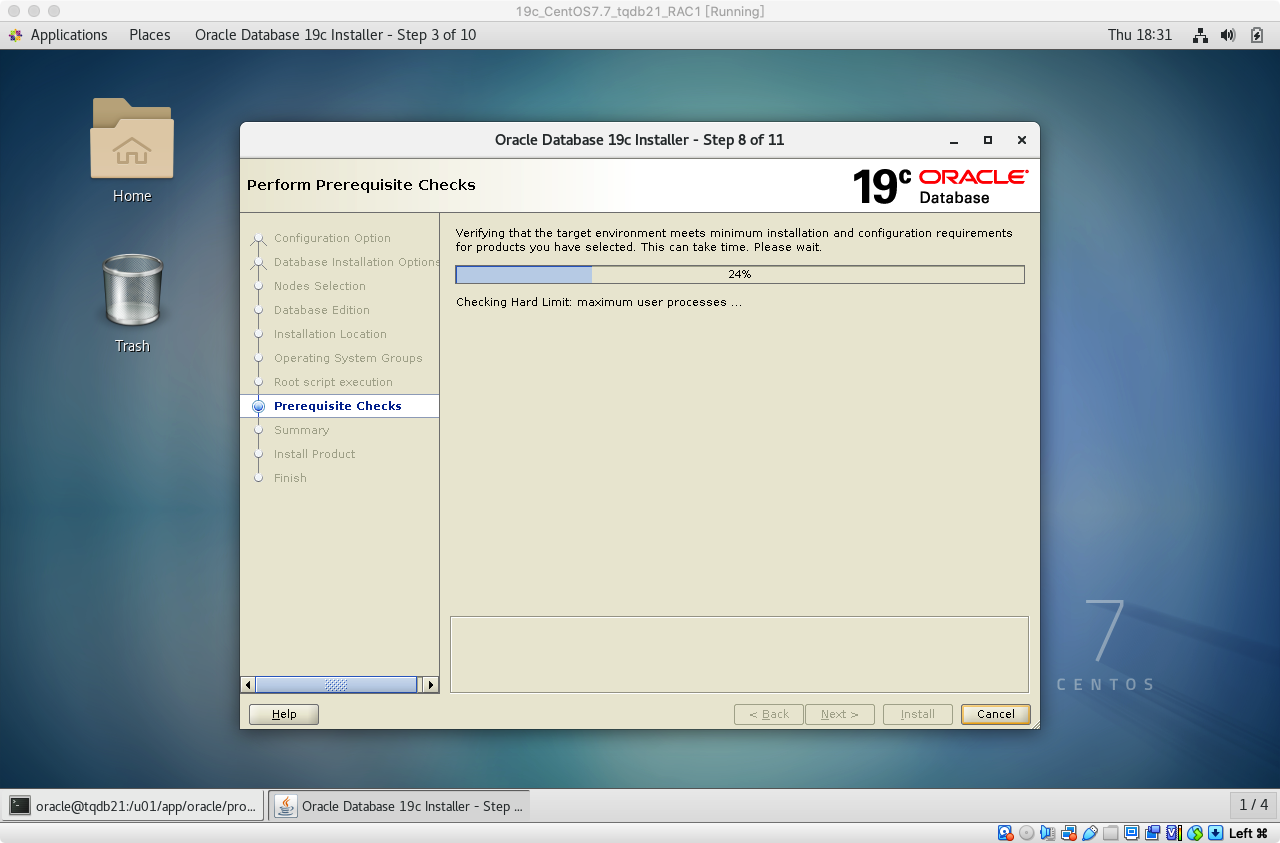

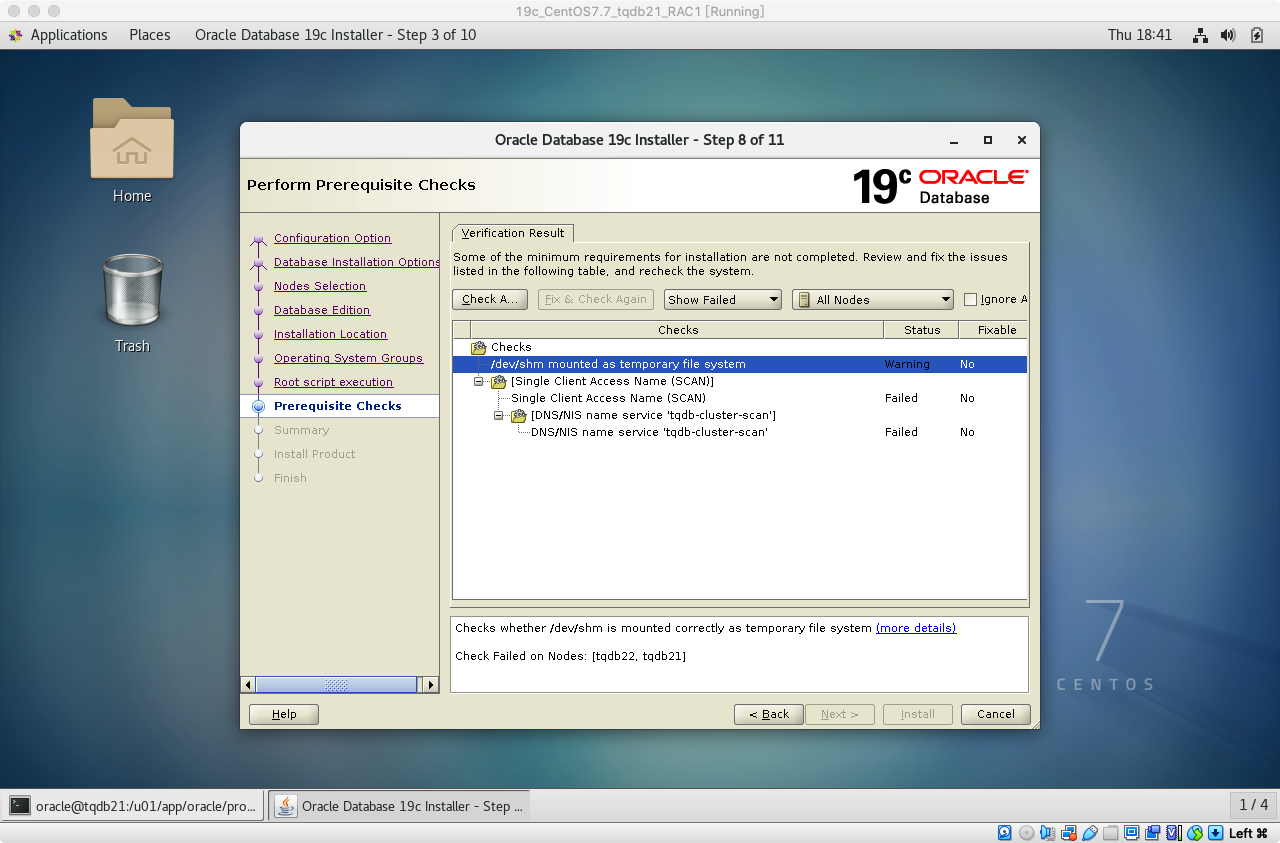

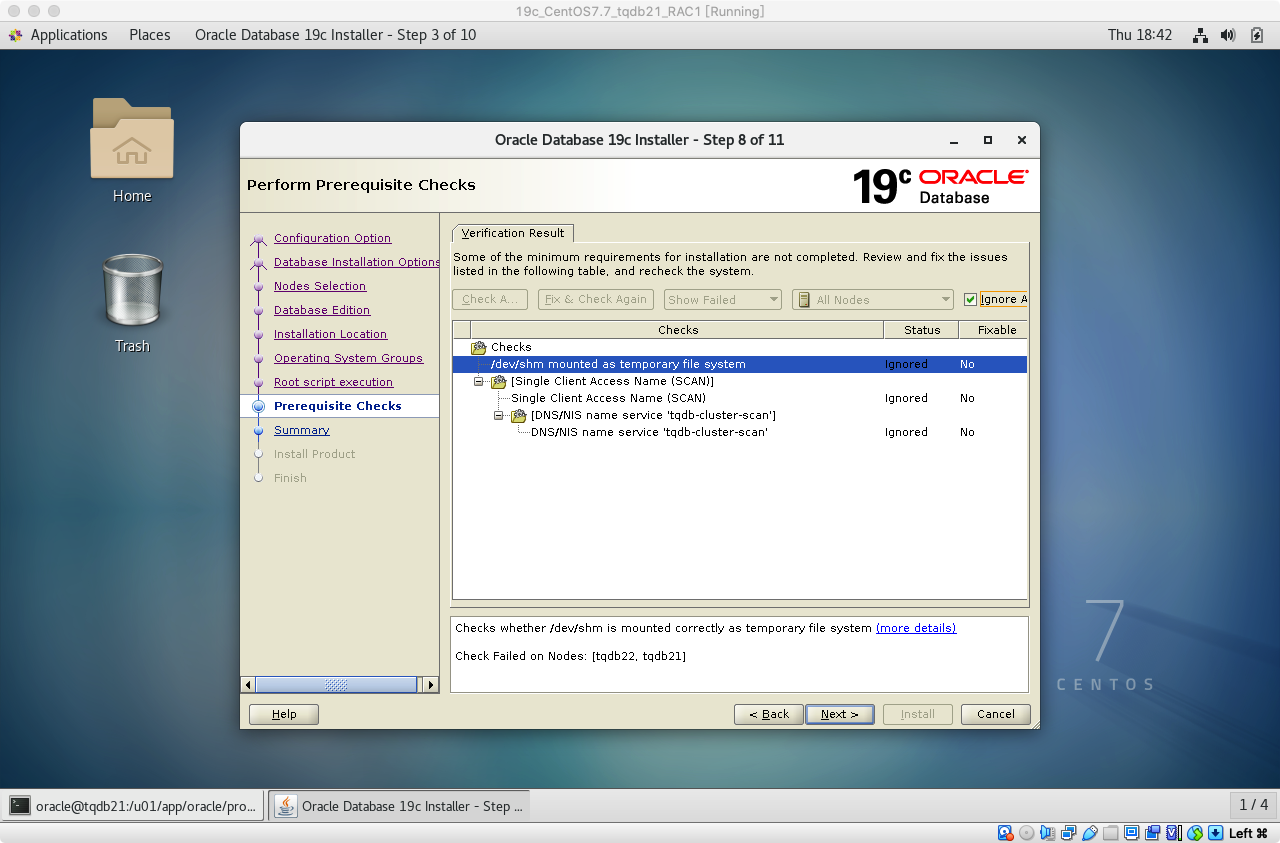

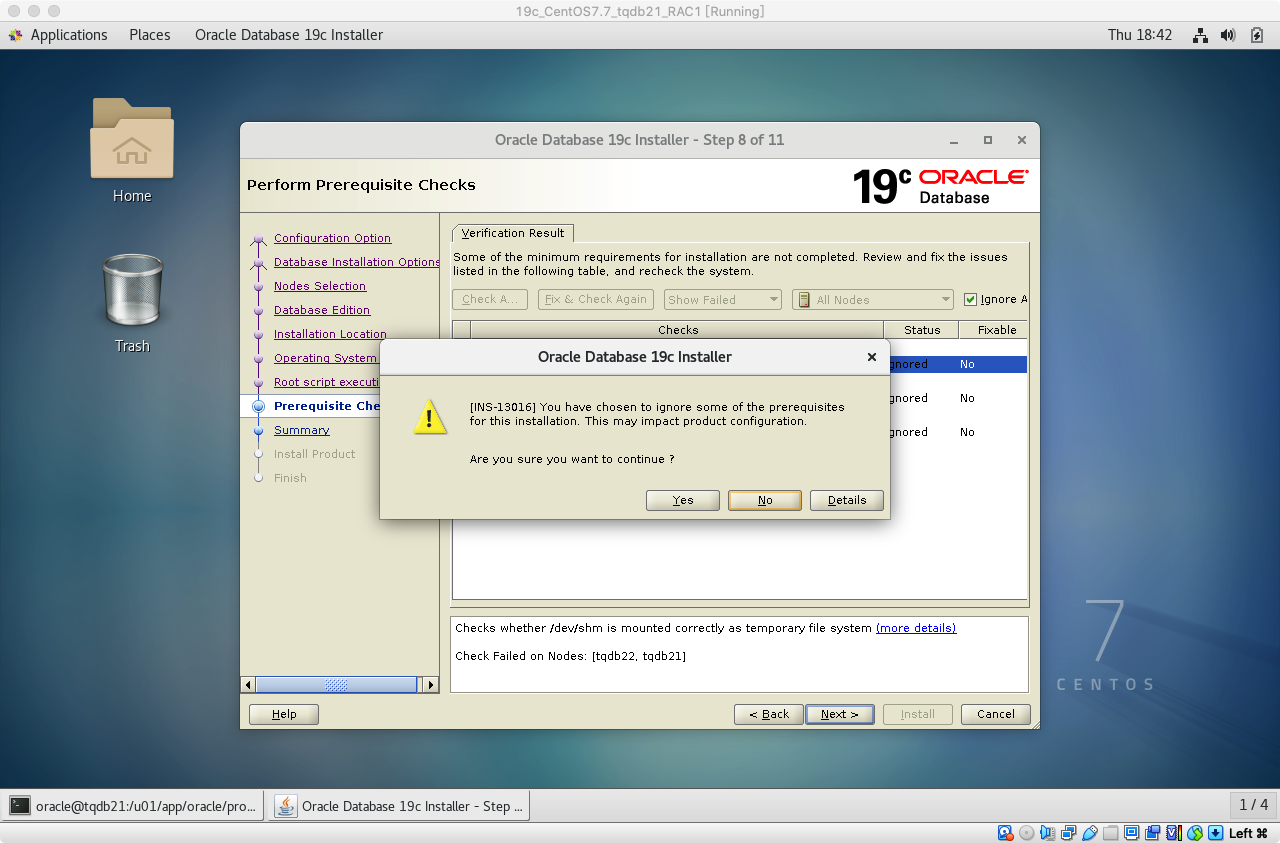

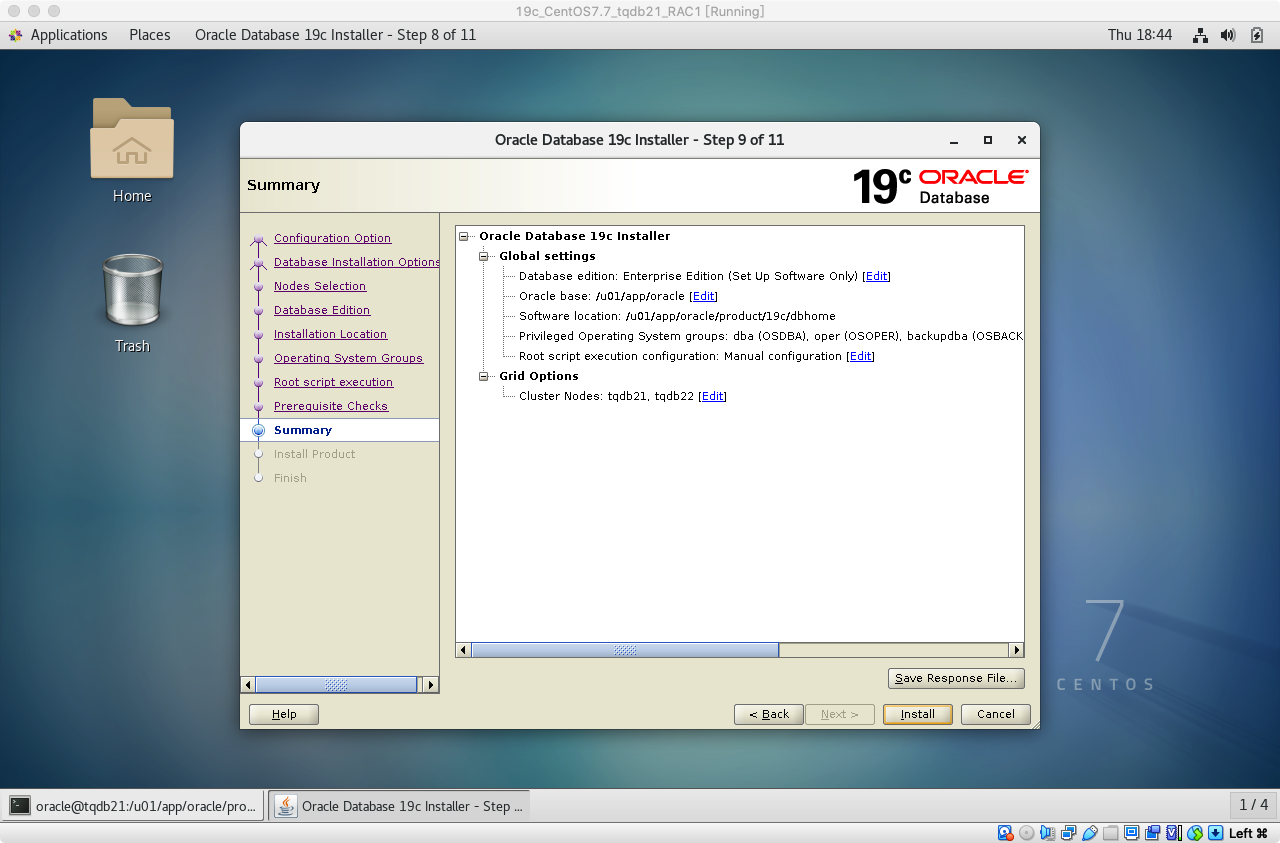

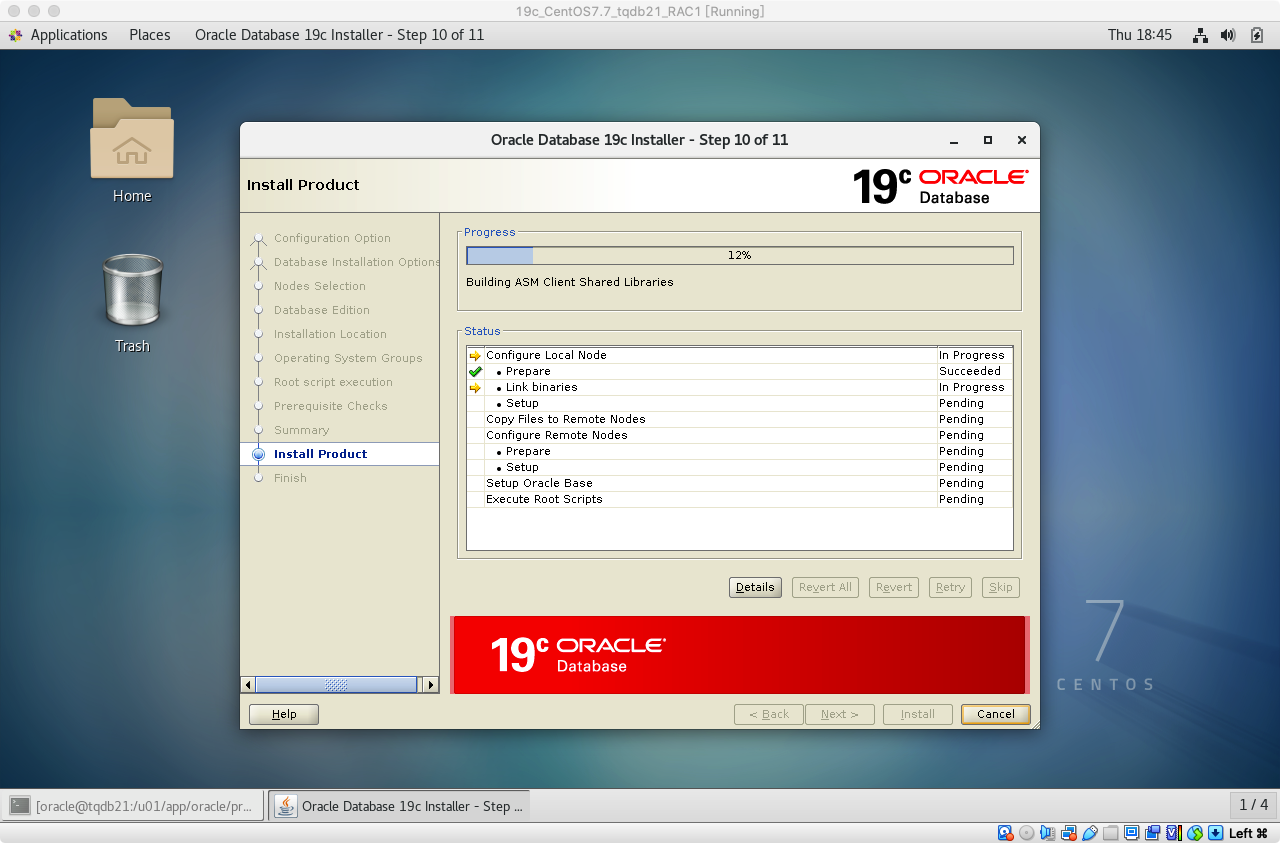

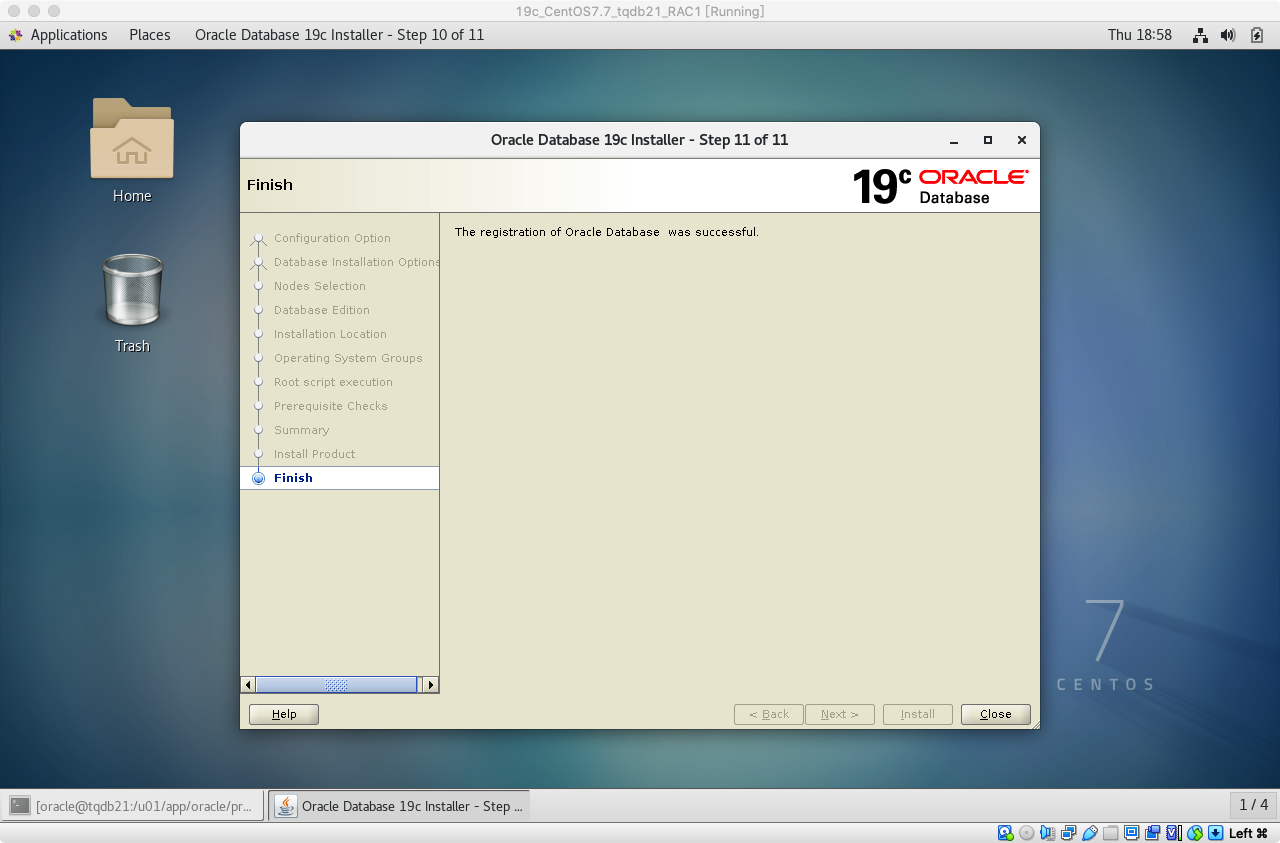

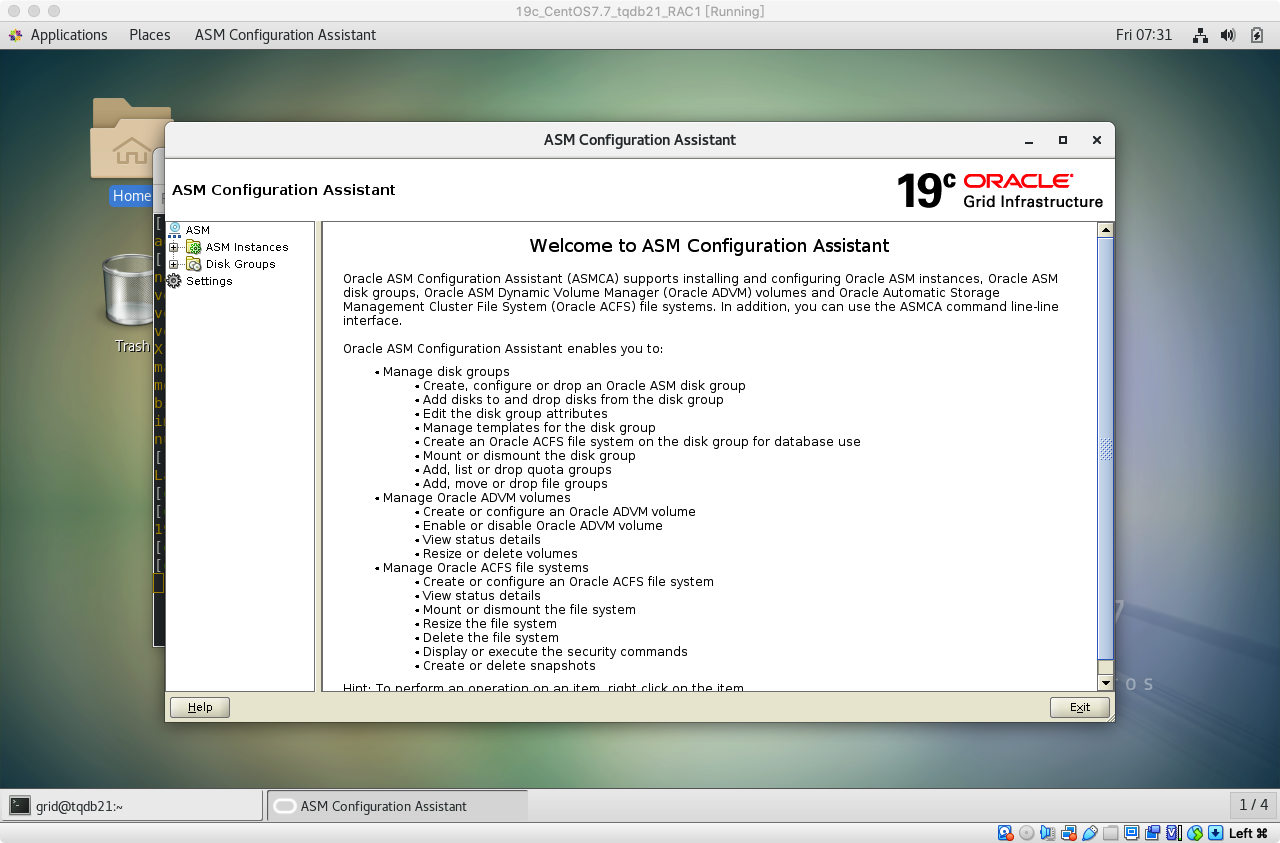

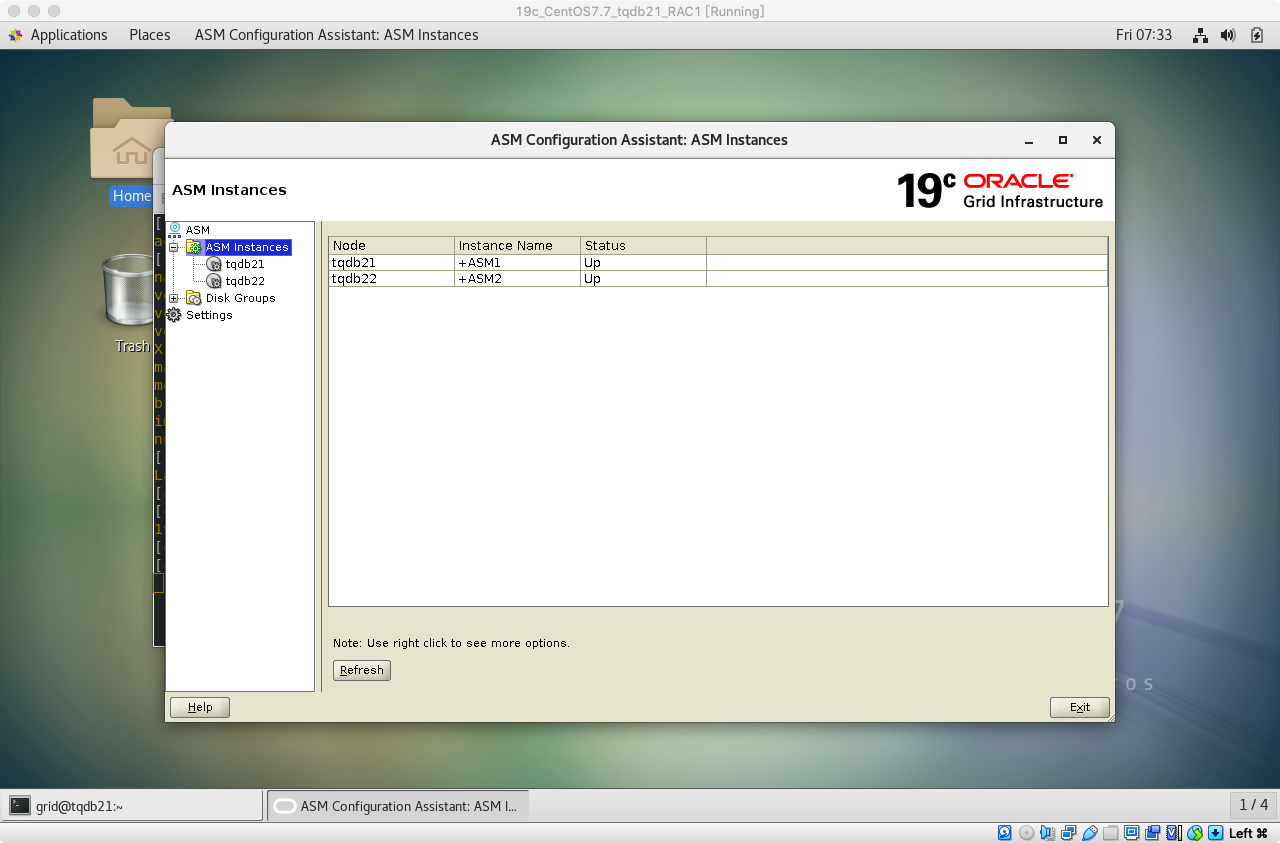

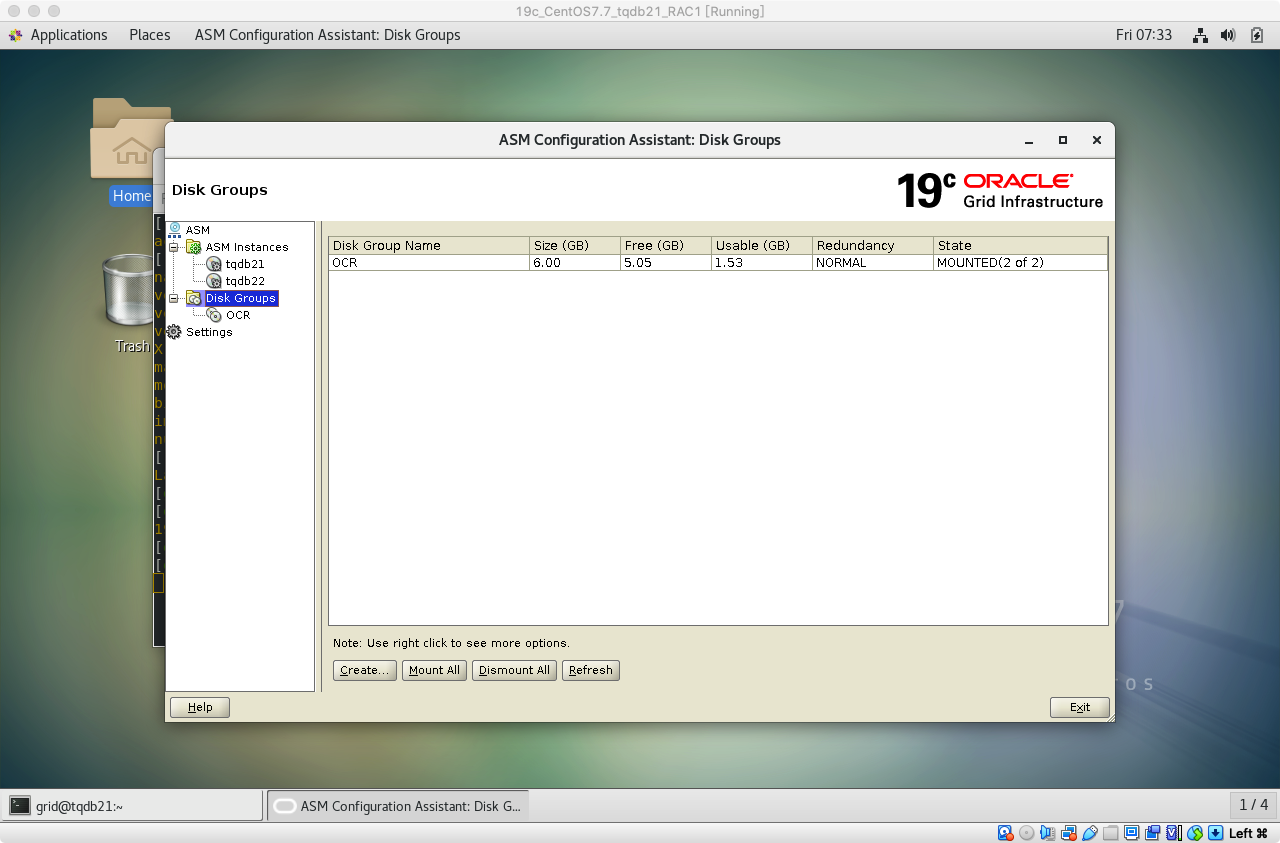

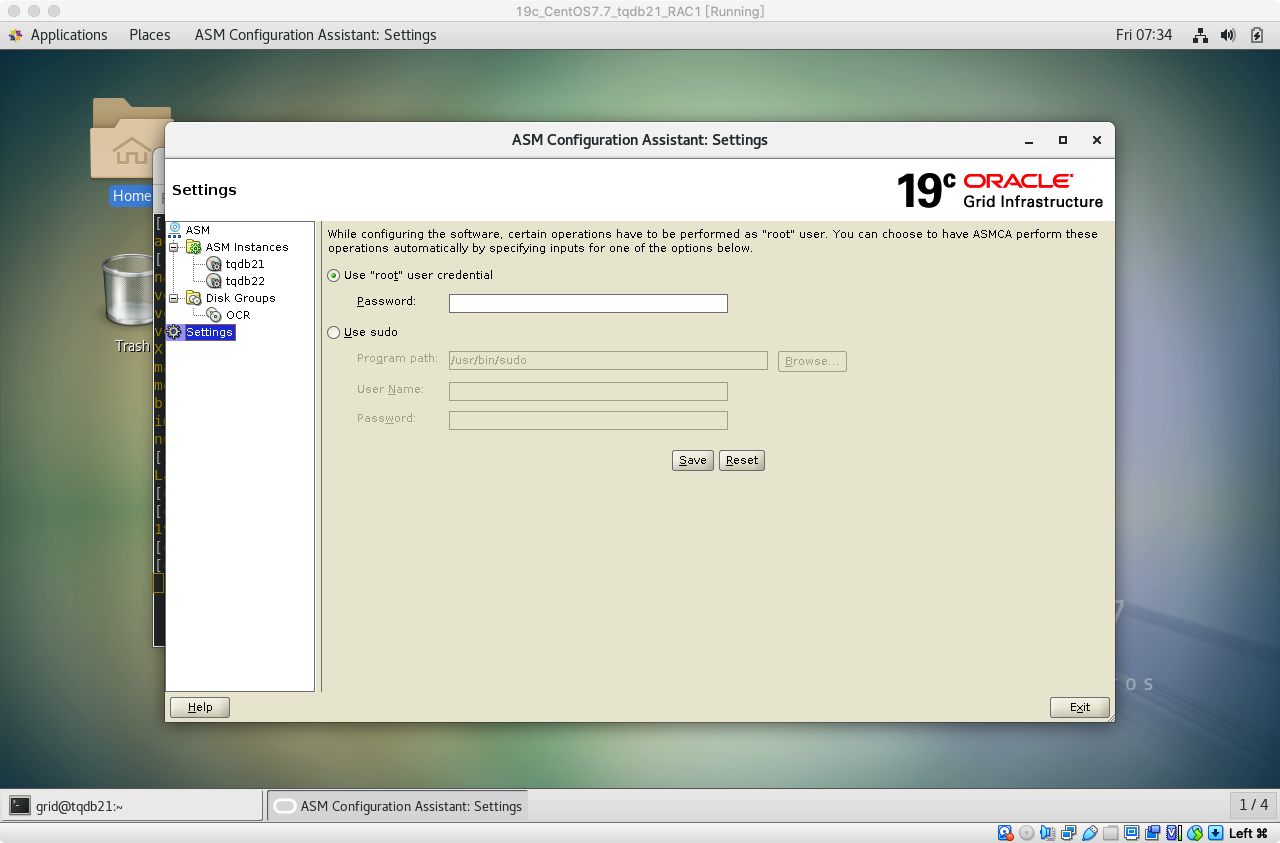

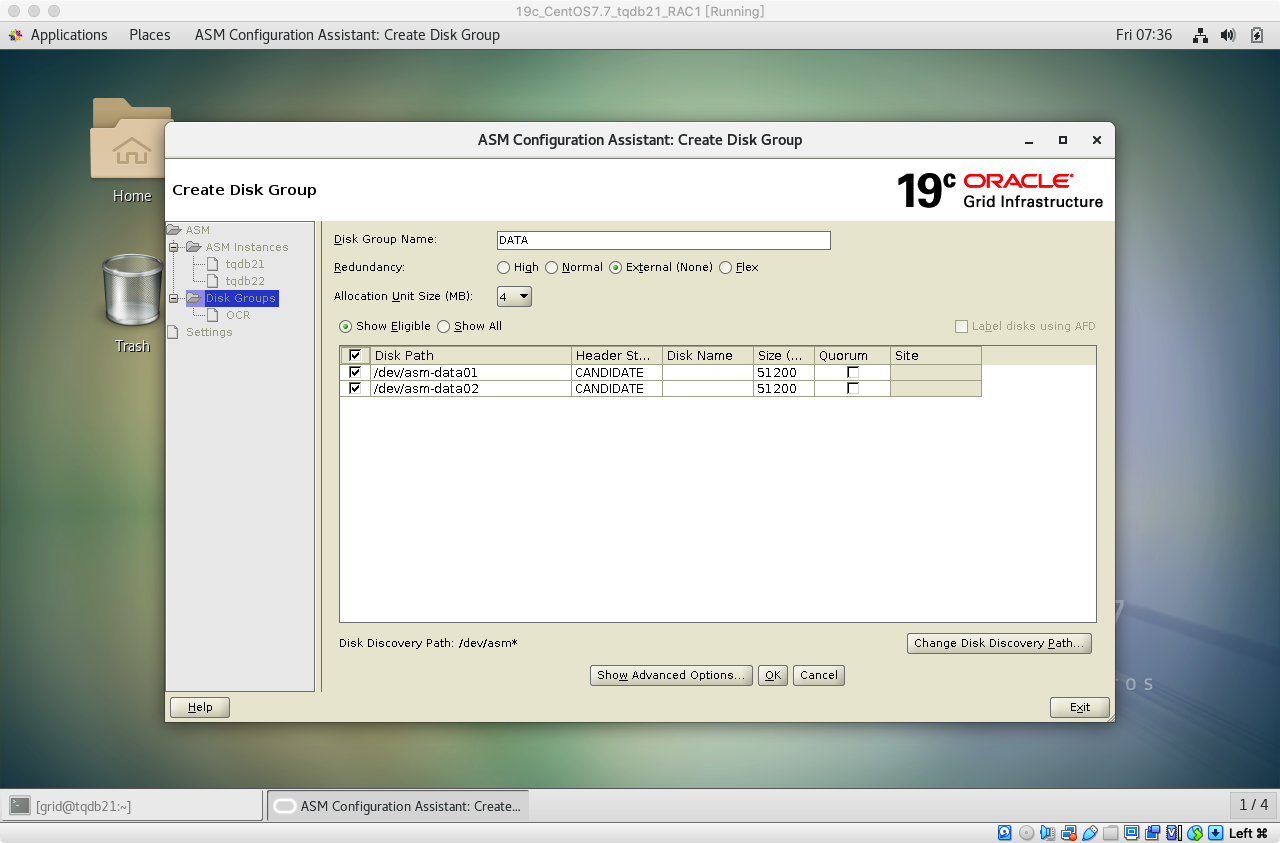

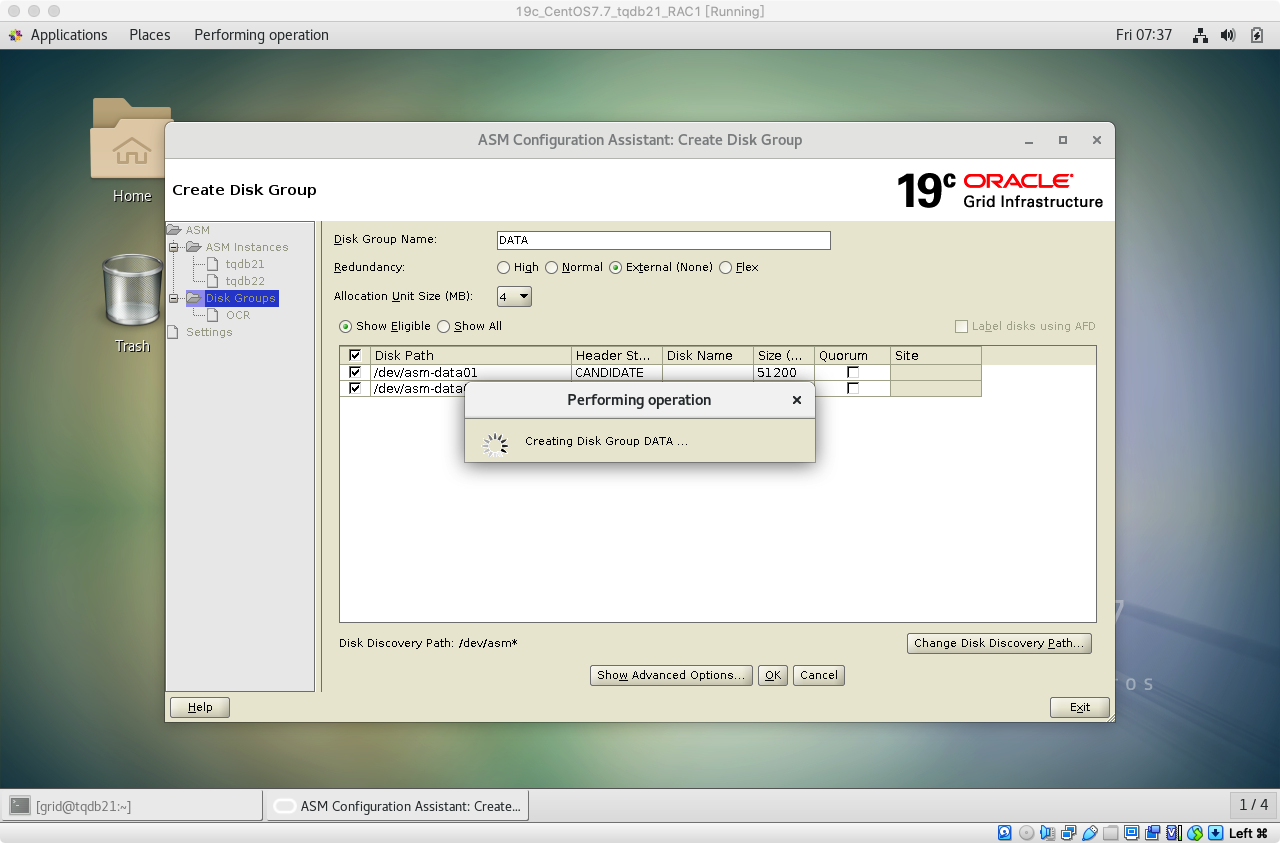

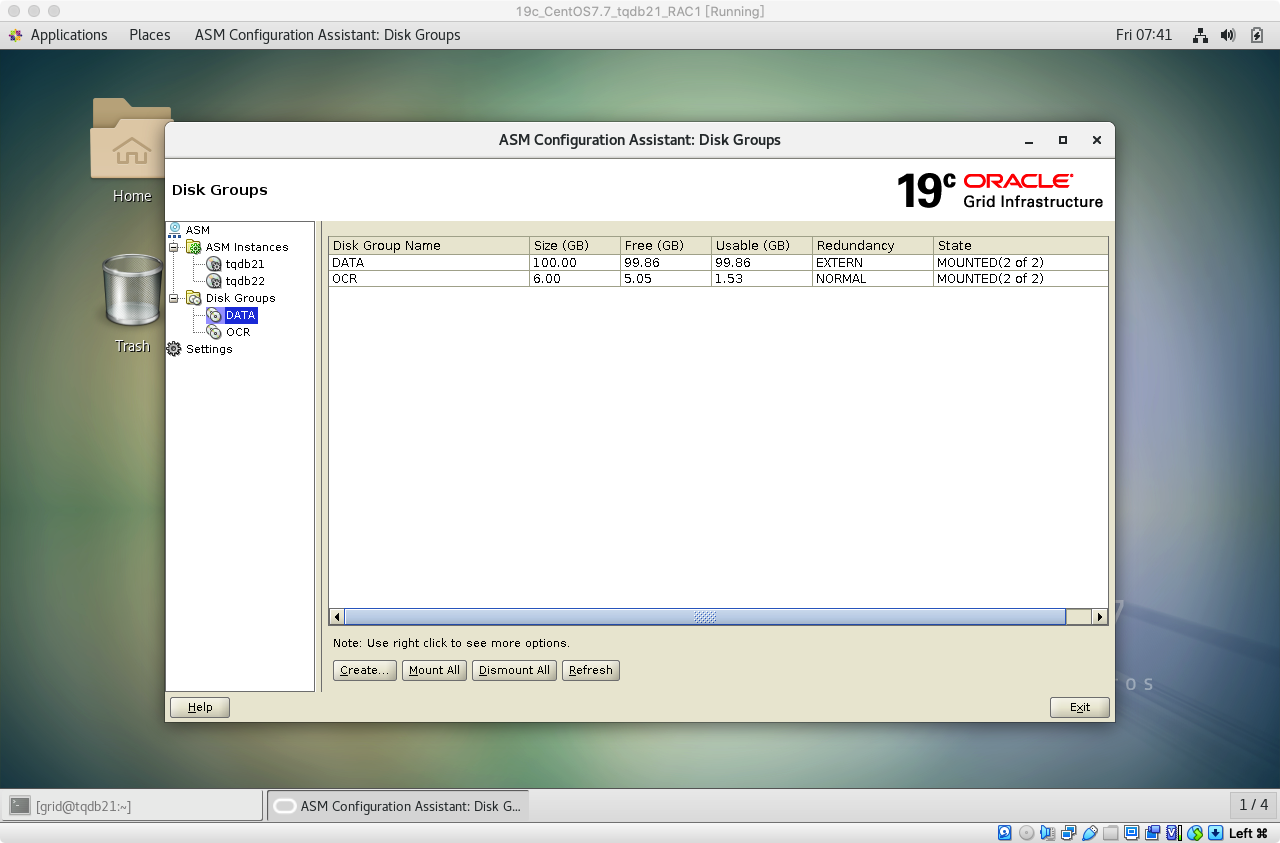

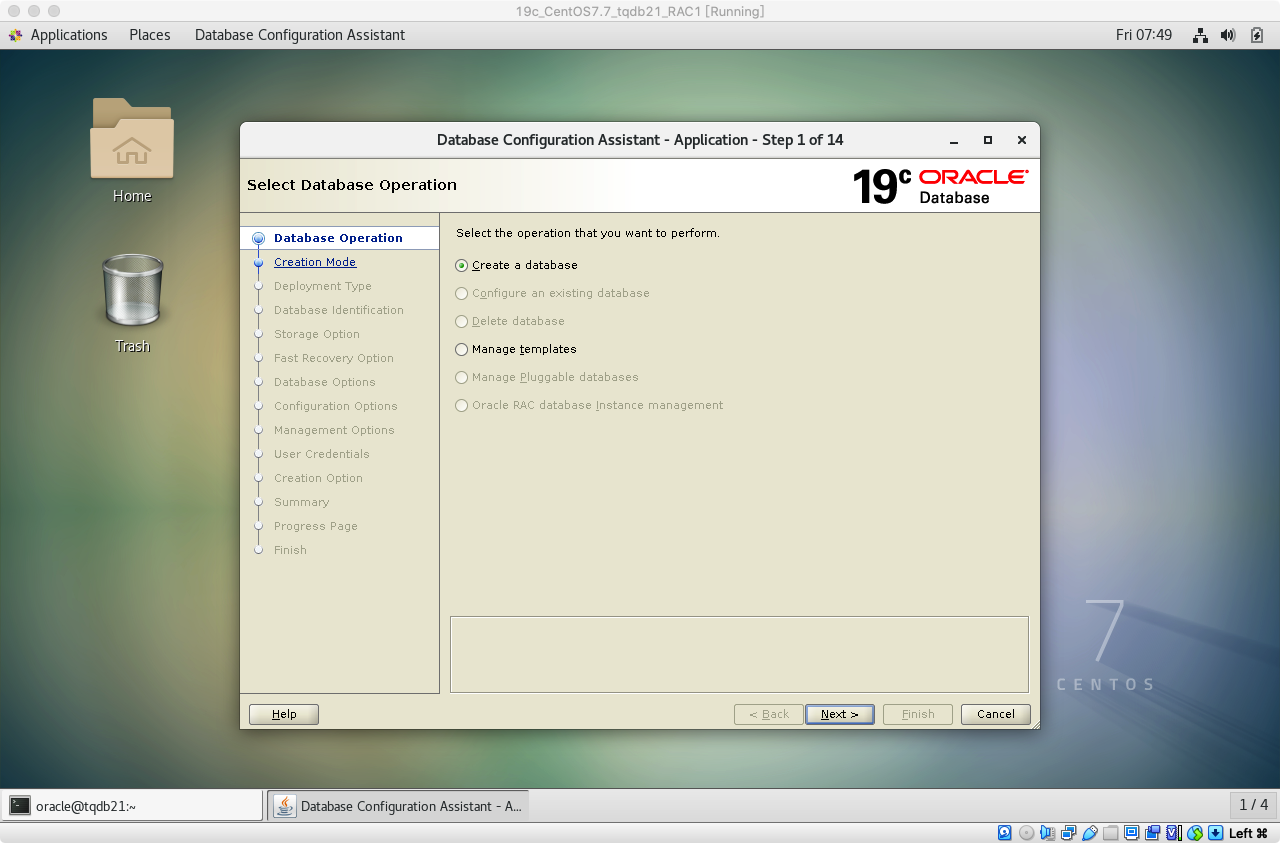

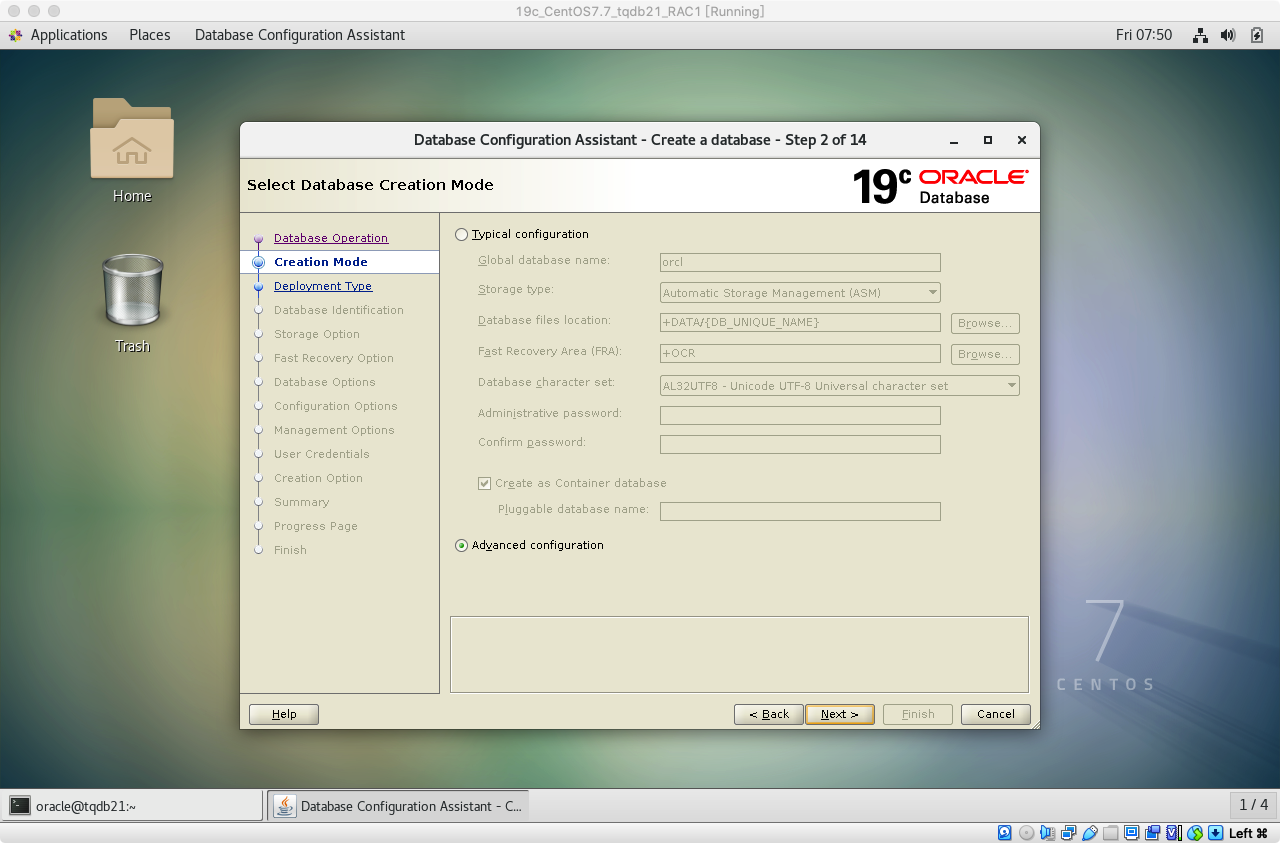

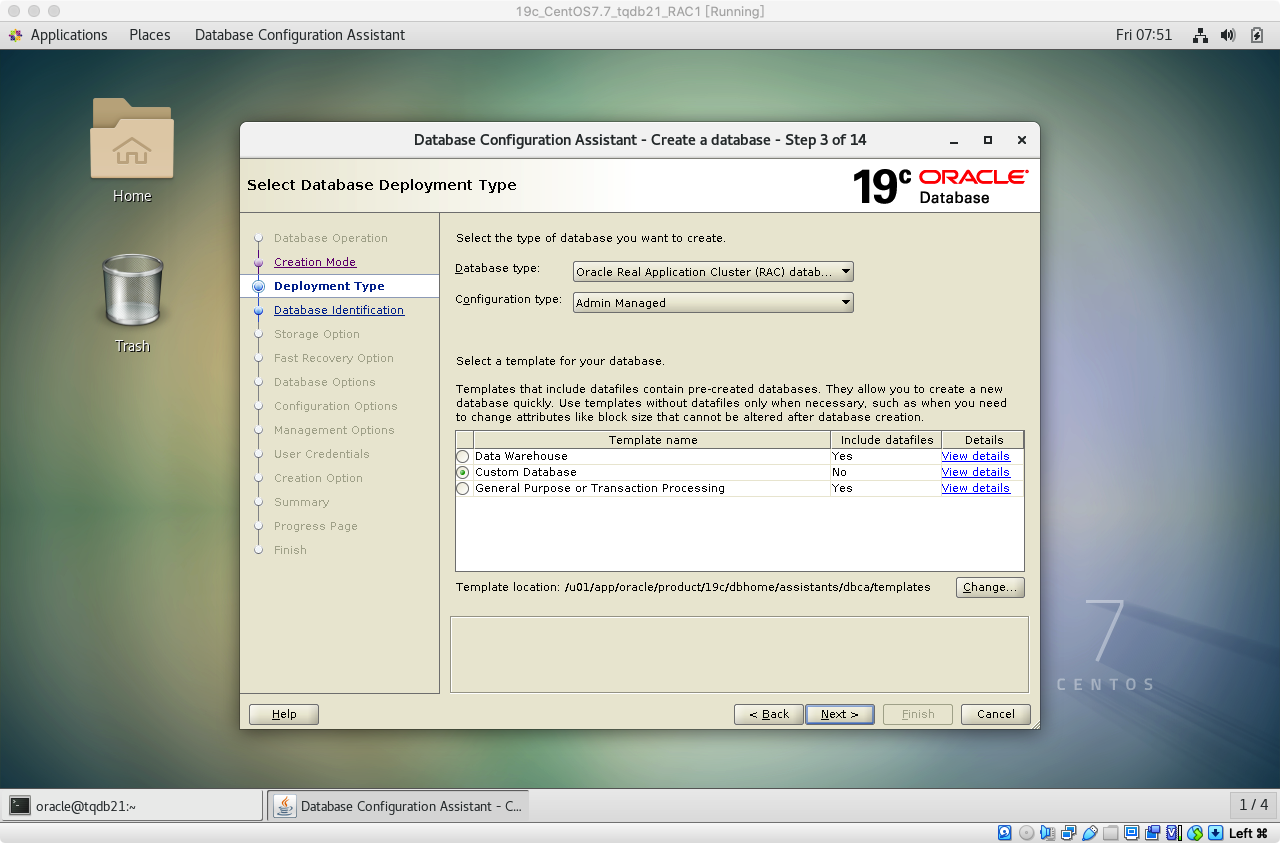

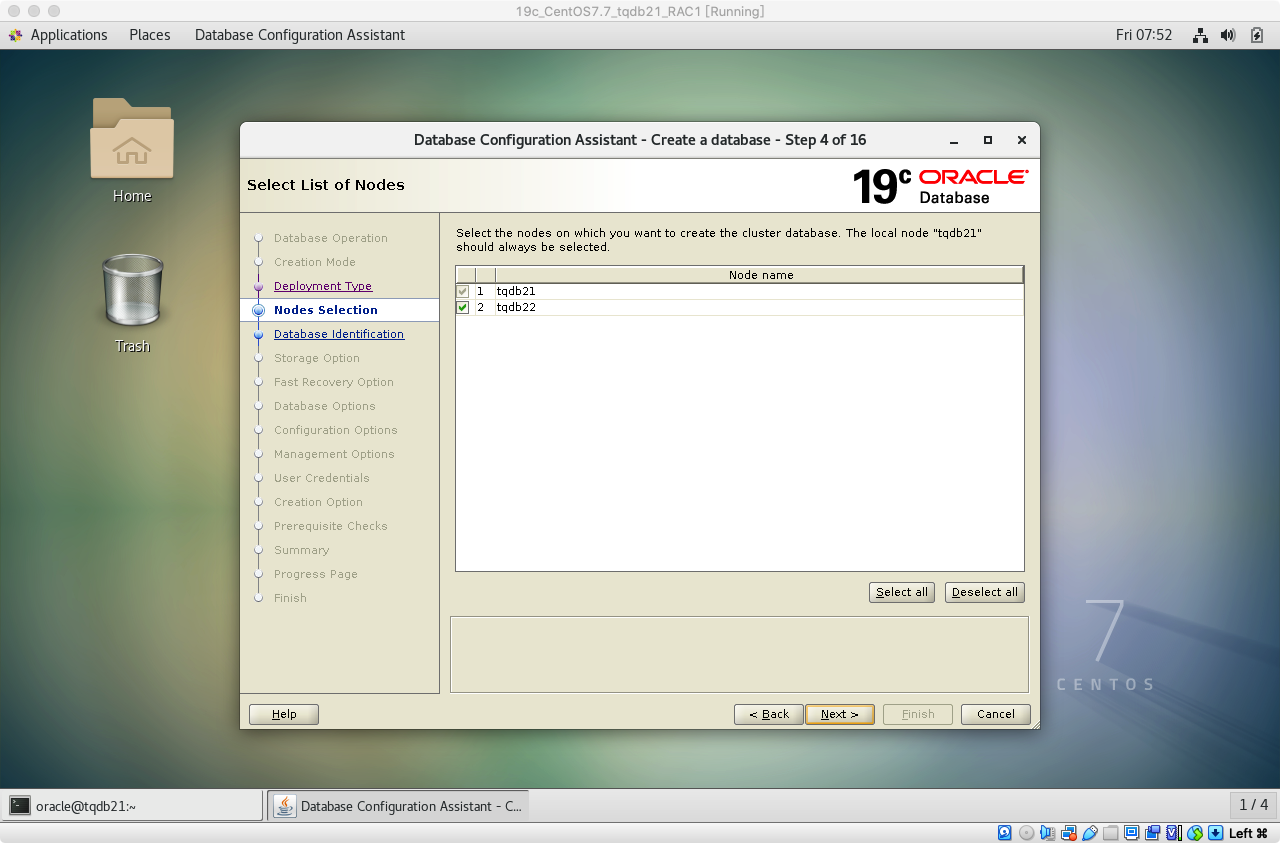

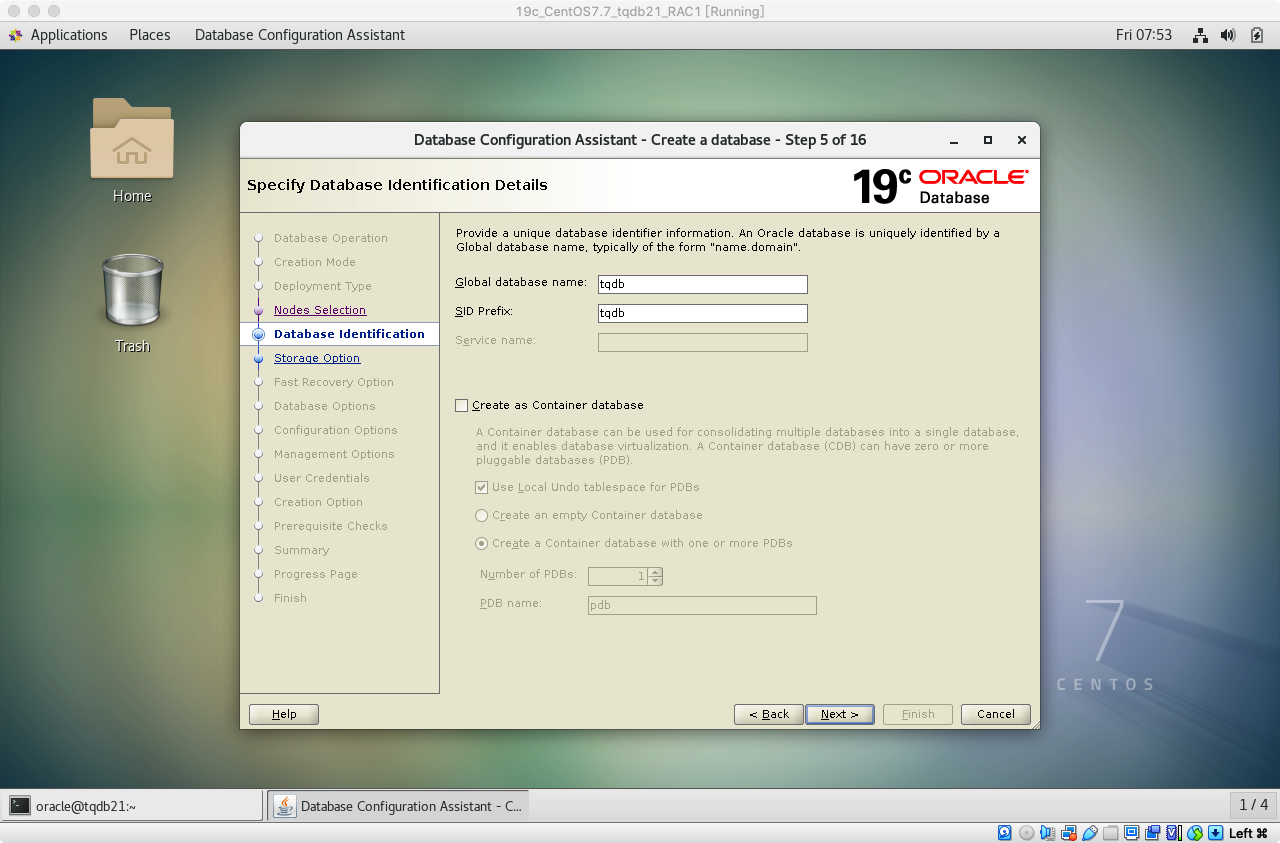

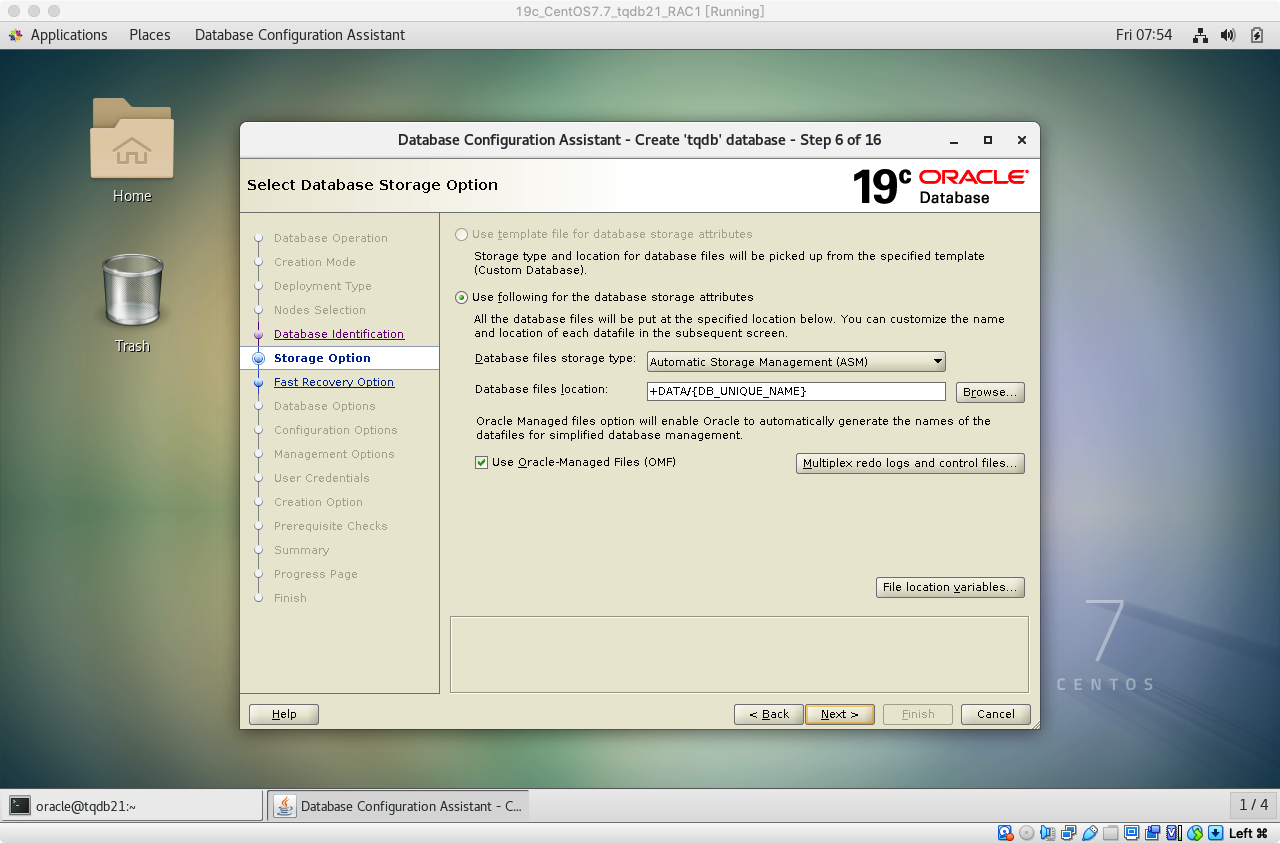

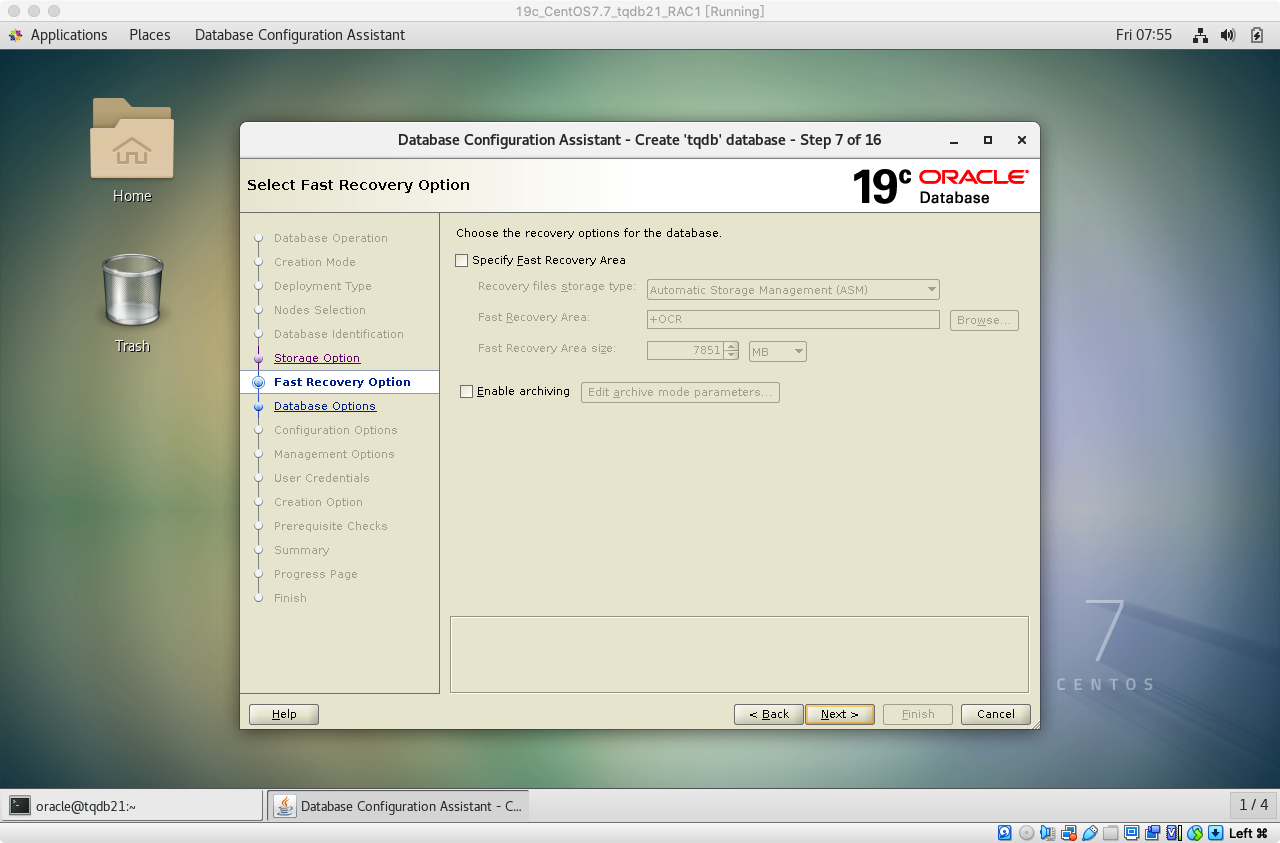

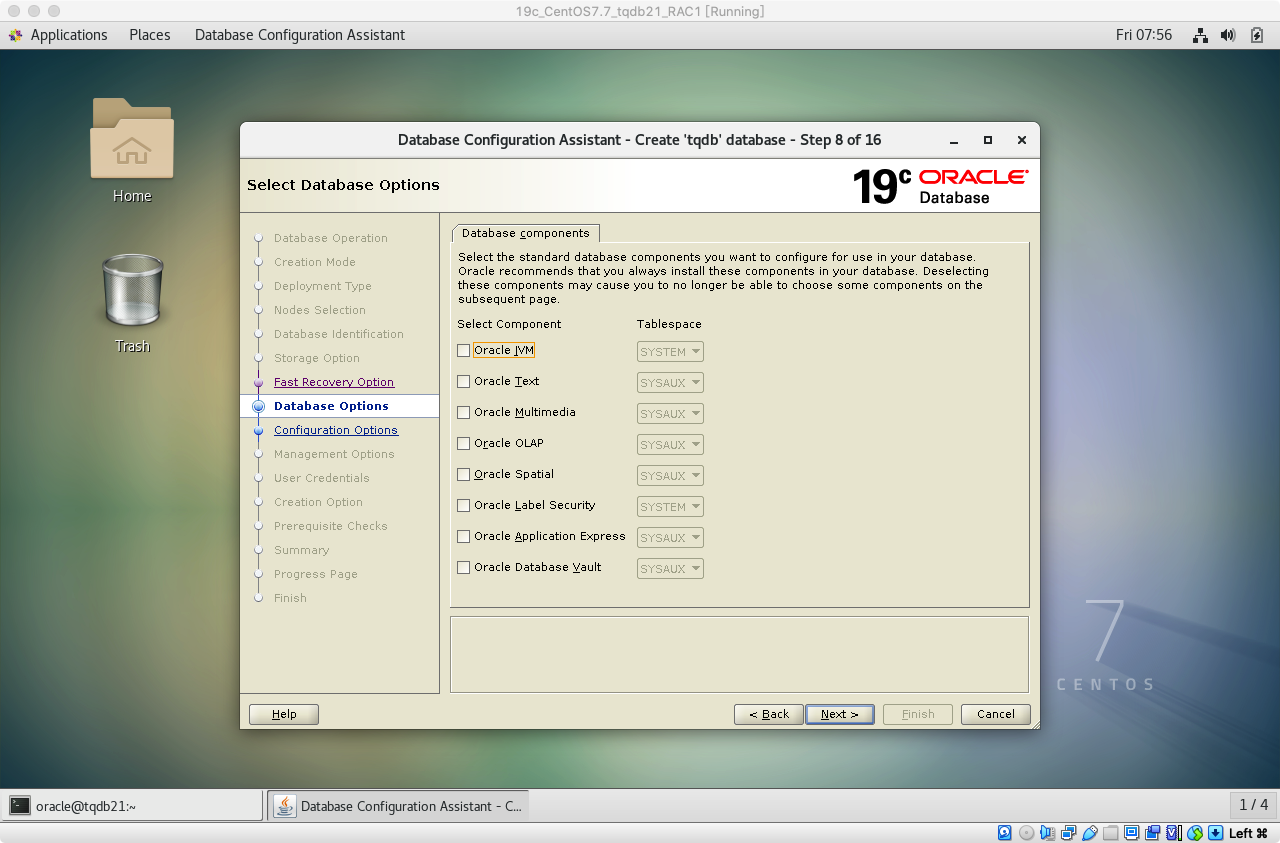

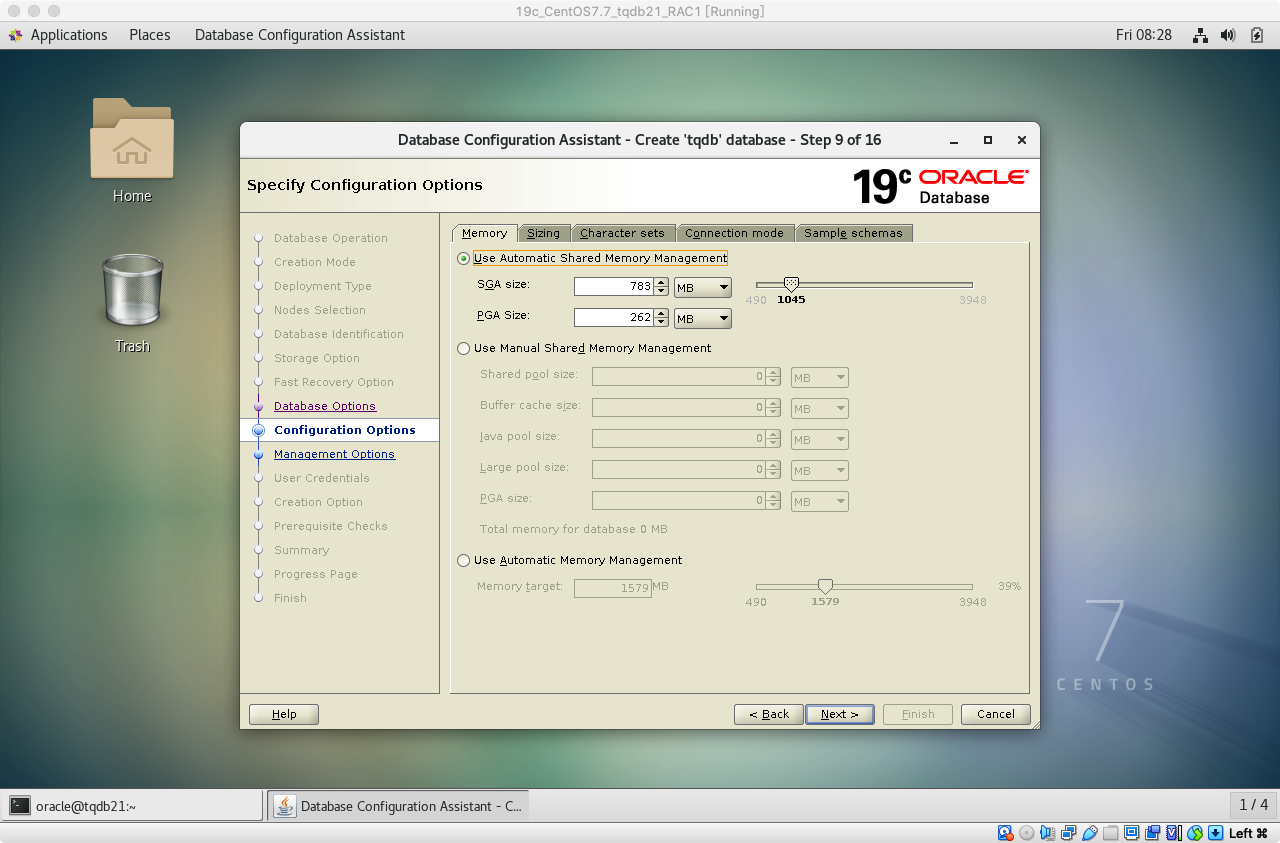

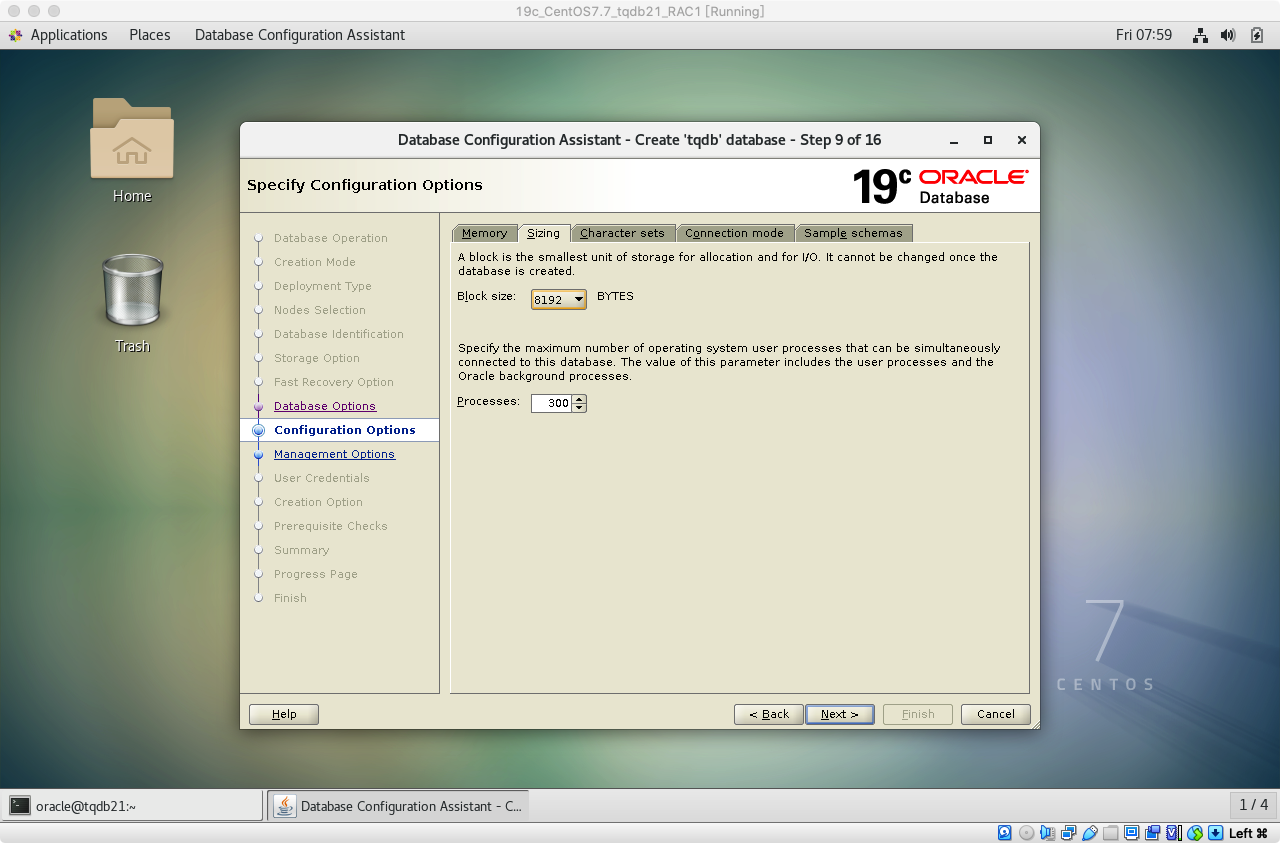

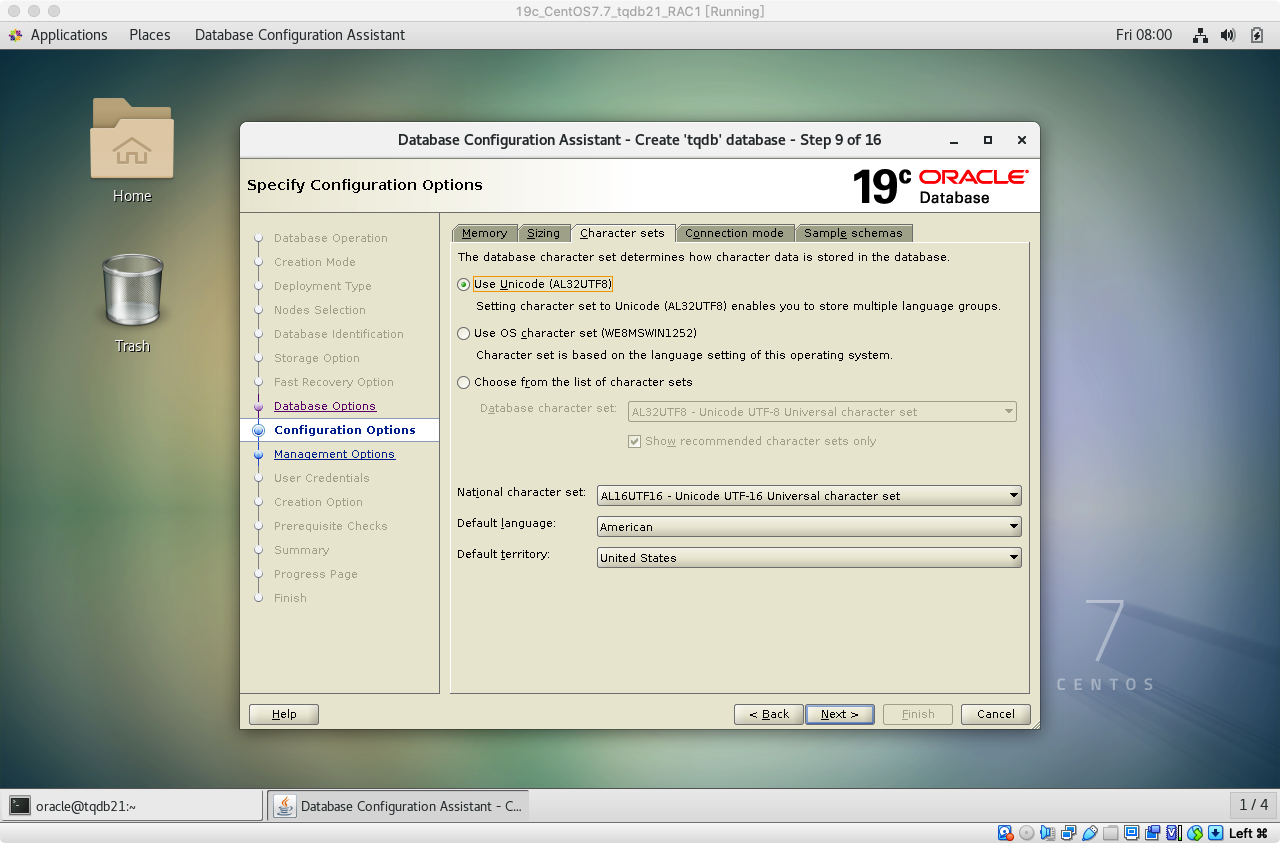

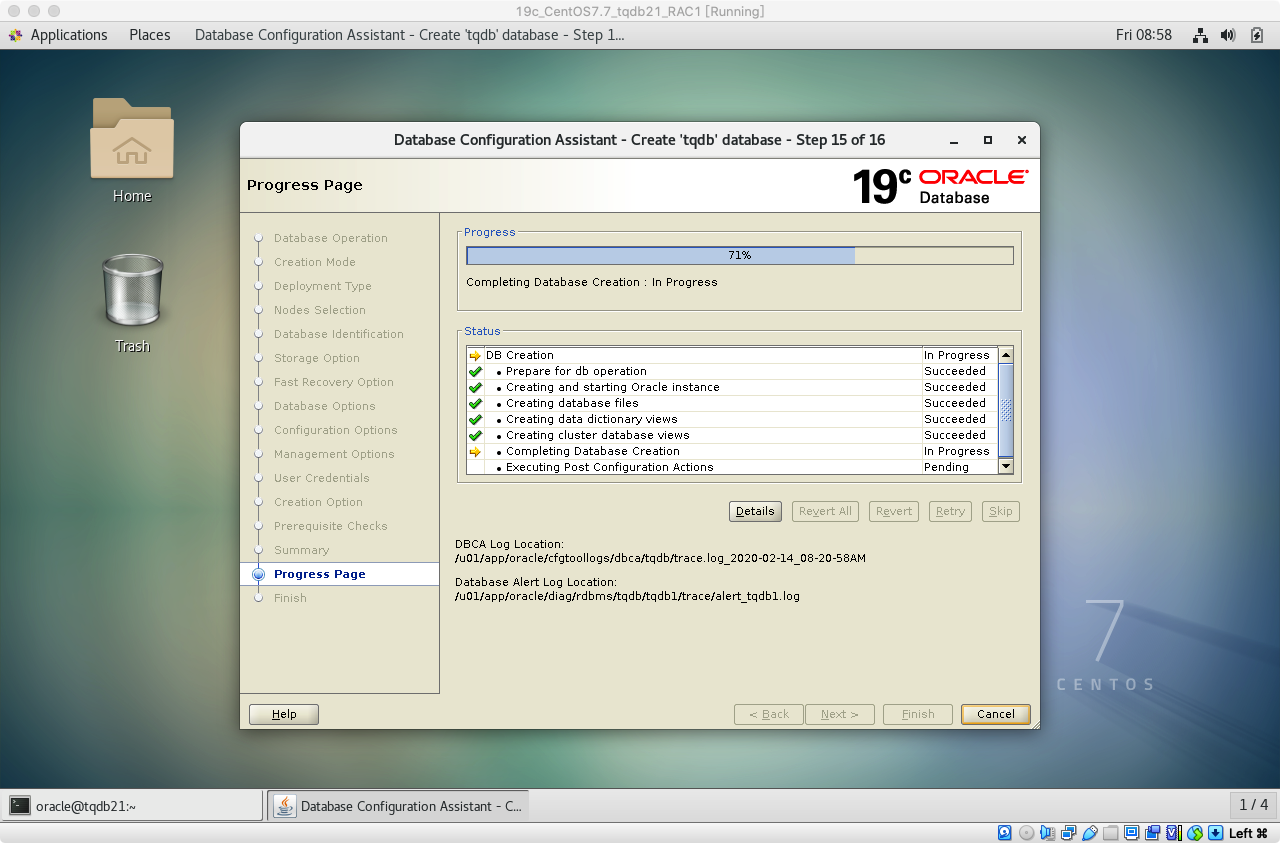

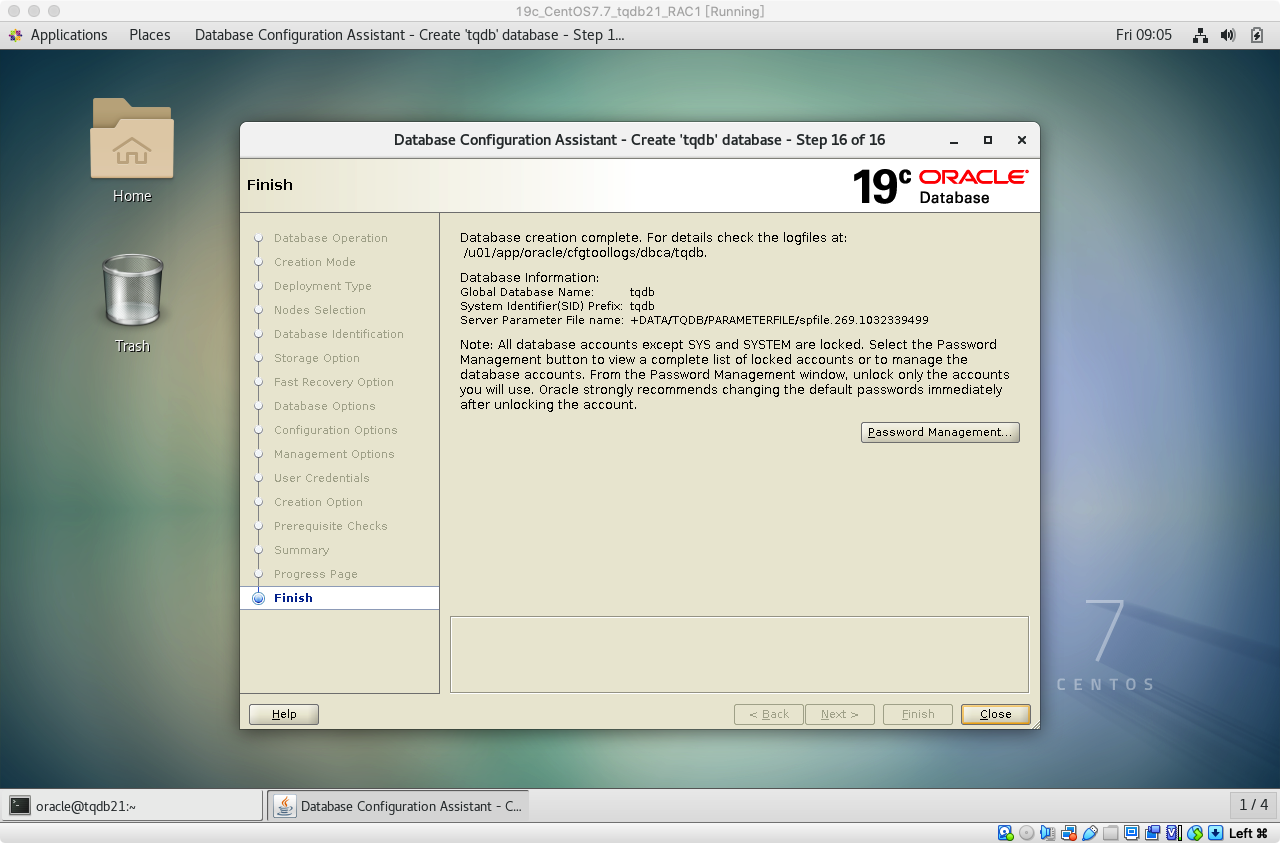

GRID 安装截图:

- 19c RAC GRID 安装 01

19c RAC GRID 安装 02

19c RAC GRID 安装 03

19c RAC GRID 安装 04 ==注意 SCAN Name==

19c RAC GRID 安装 05

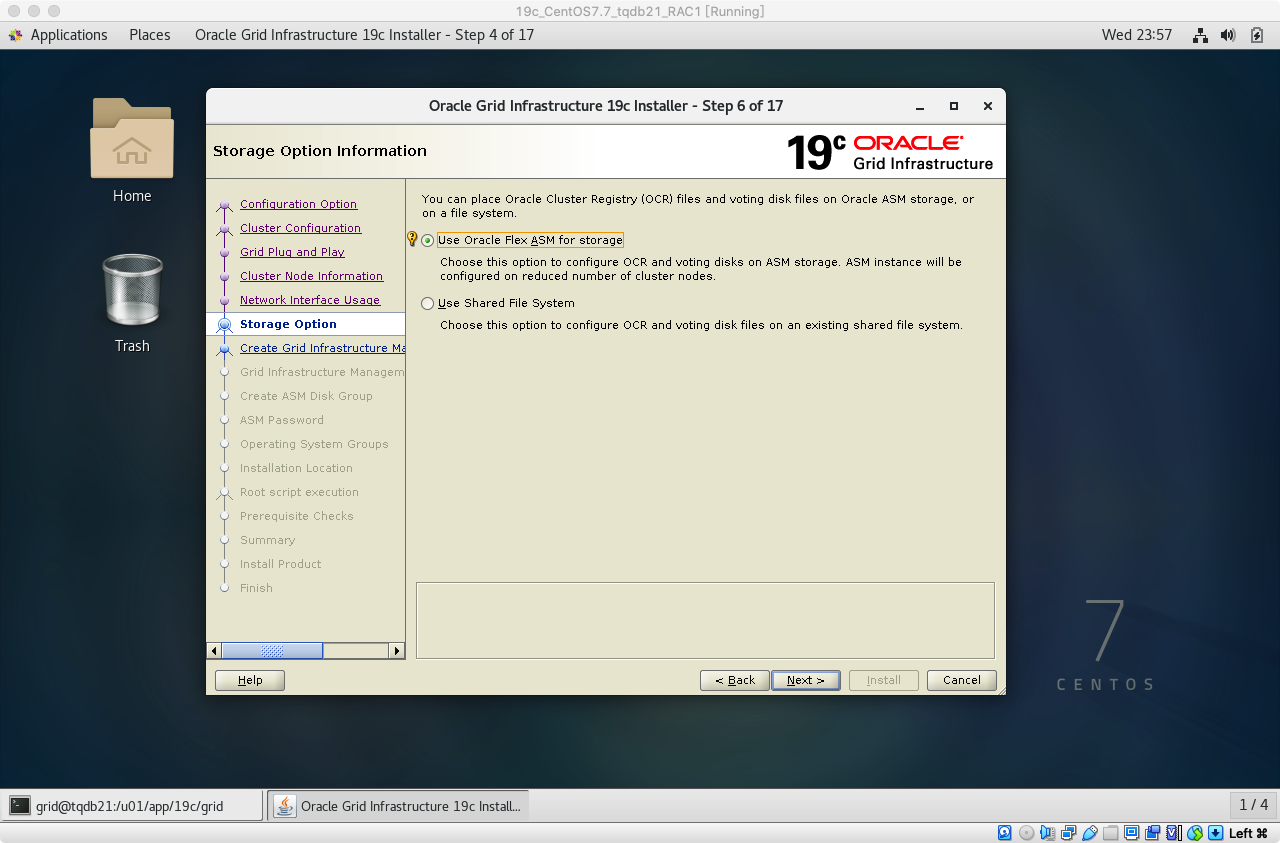

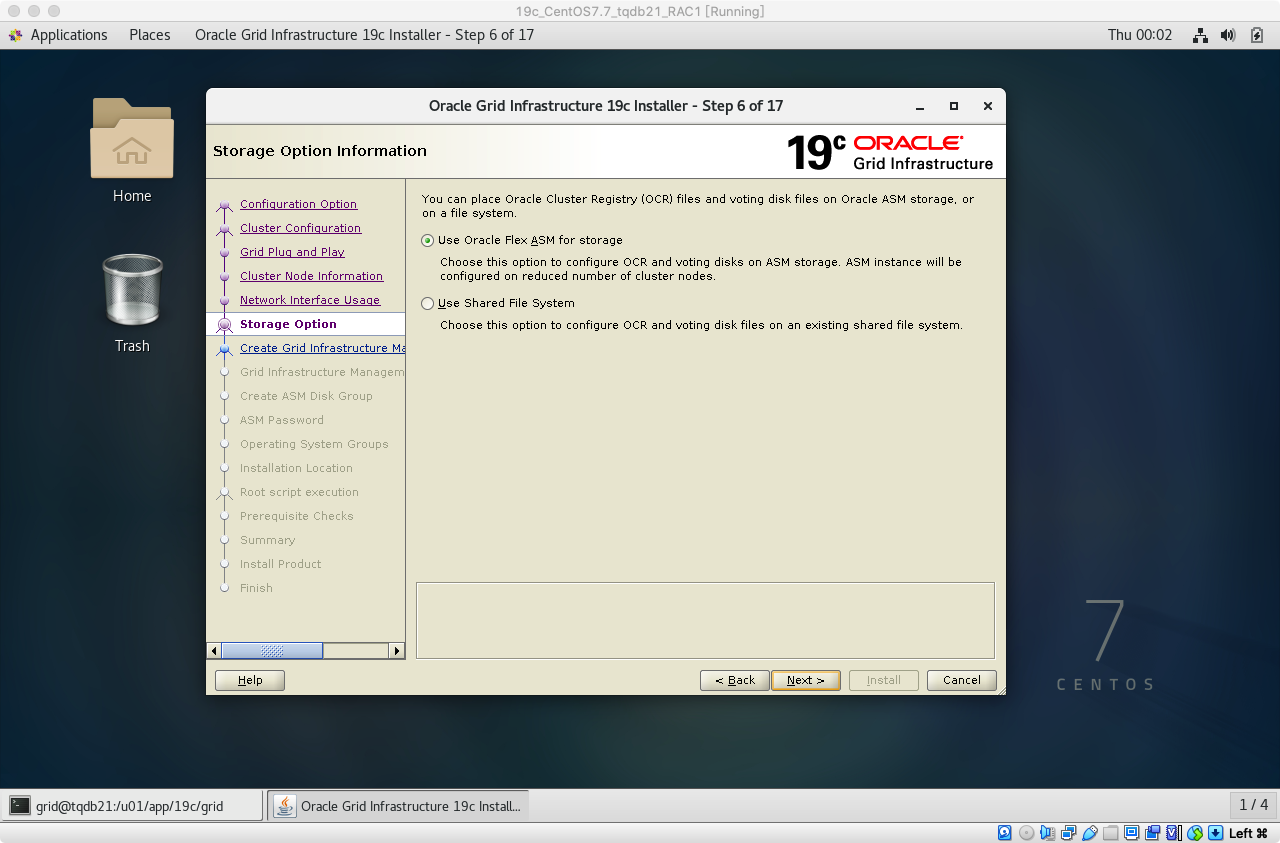

19c RAC GRID 安装 06

19c RAC GRID 安装 07

19c RAC GRID 安装 08

19c RAC GRID 安装 09

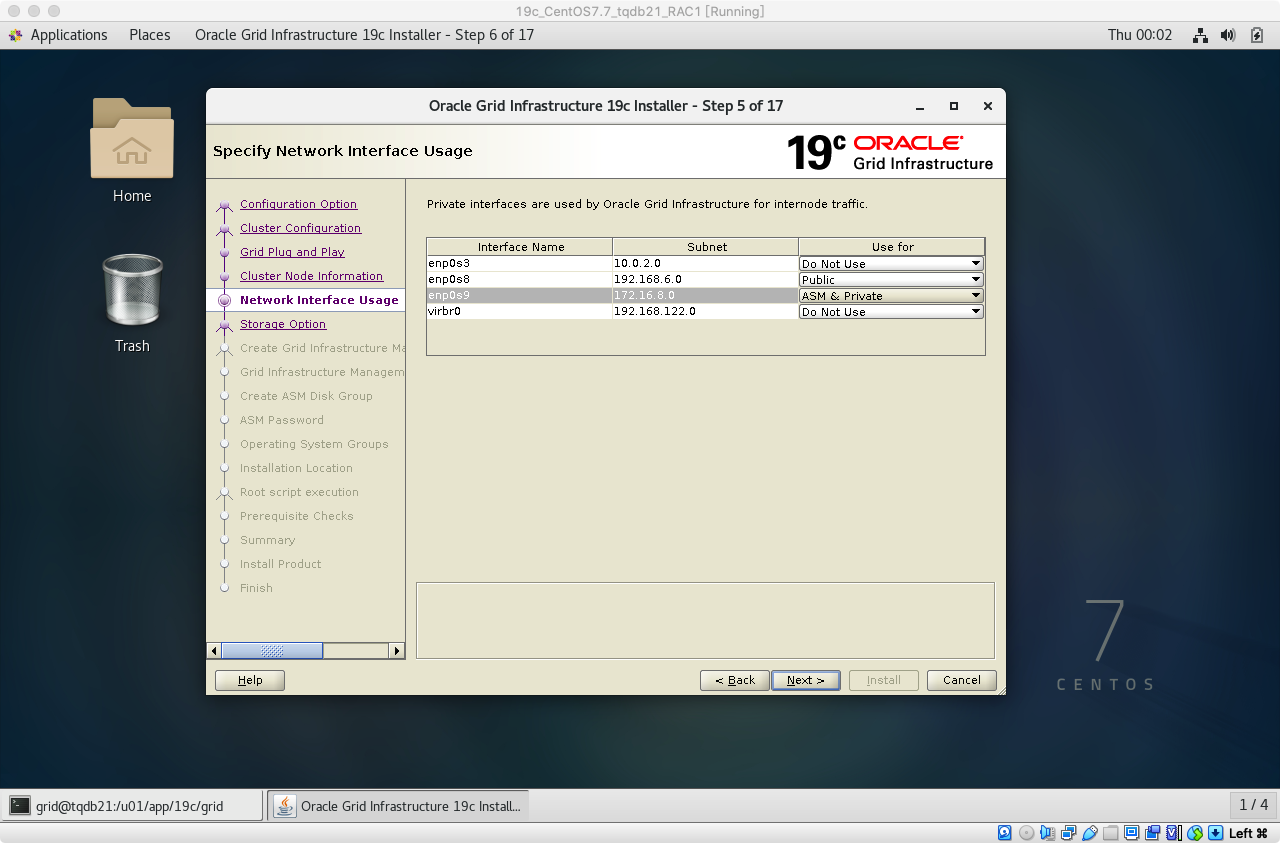

19c RAC GRID 安装 10 选定 Private Interfaces

19c RAC GRID 安装 11

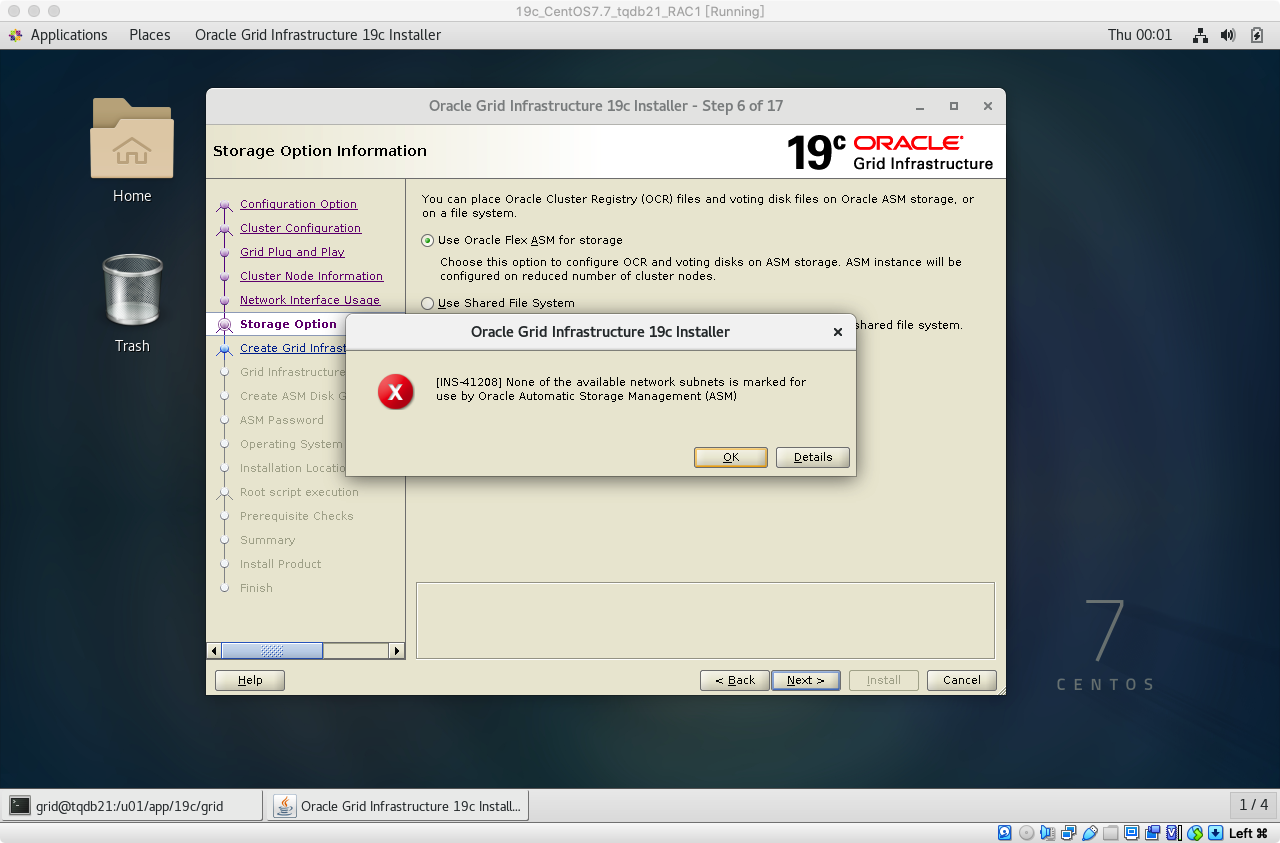

如果使用

Oracle Flex ASM内网接口需要选ASM & Private

- 19c RAC GRID 安装 12

19c RAC GRID 安装 13 私有网络选 ASM & Private

19c RAC GRID 安装 14

19c RAC GRID 安装 15

不安装集群配置管理库。如果安装建议单独分配磁盘。在这有点区别,12c选no也会强制装,而且不能将

mgmtdb单独装在一个磁盘,导致ocr磁盘不能少于40g。18c的时候可以单独分,19c选择no不装。

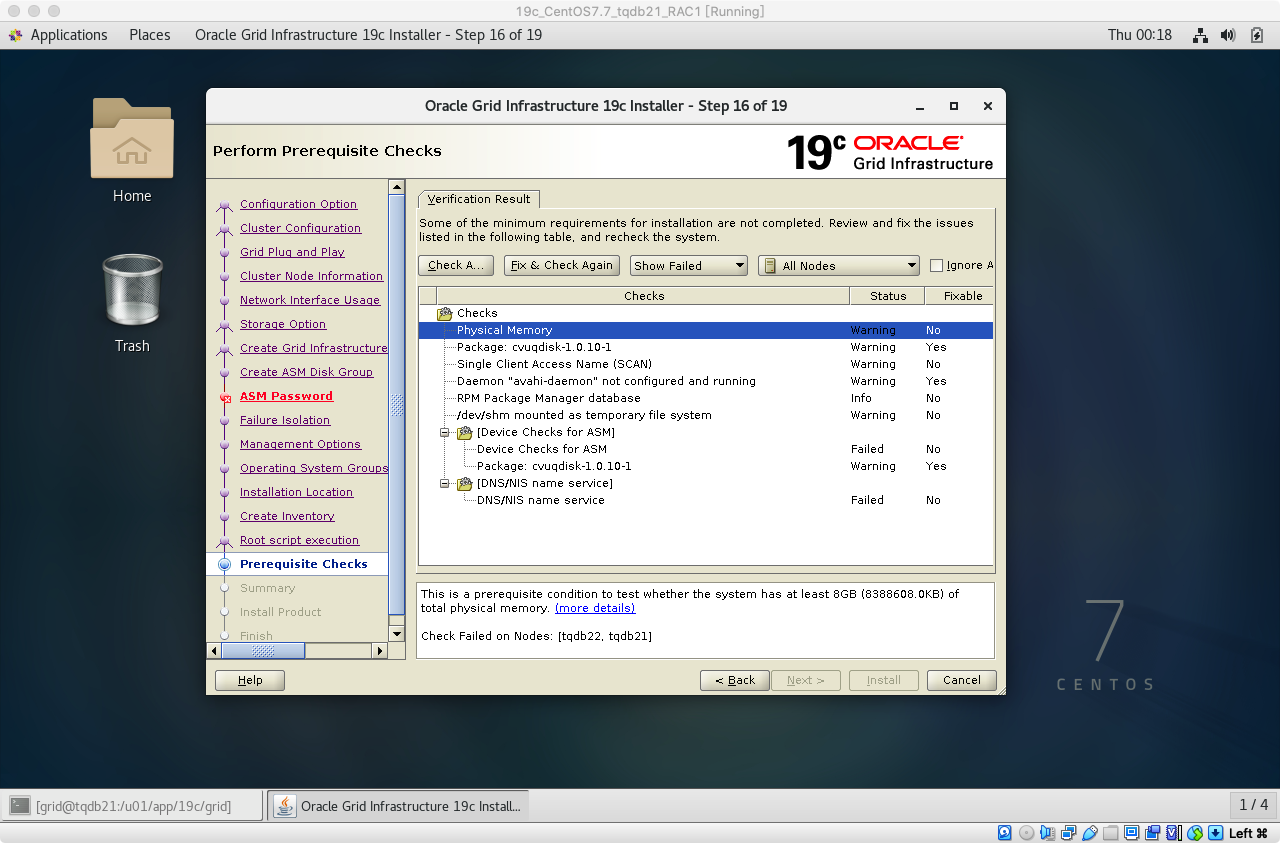

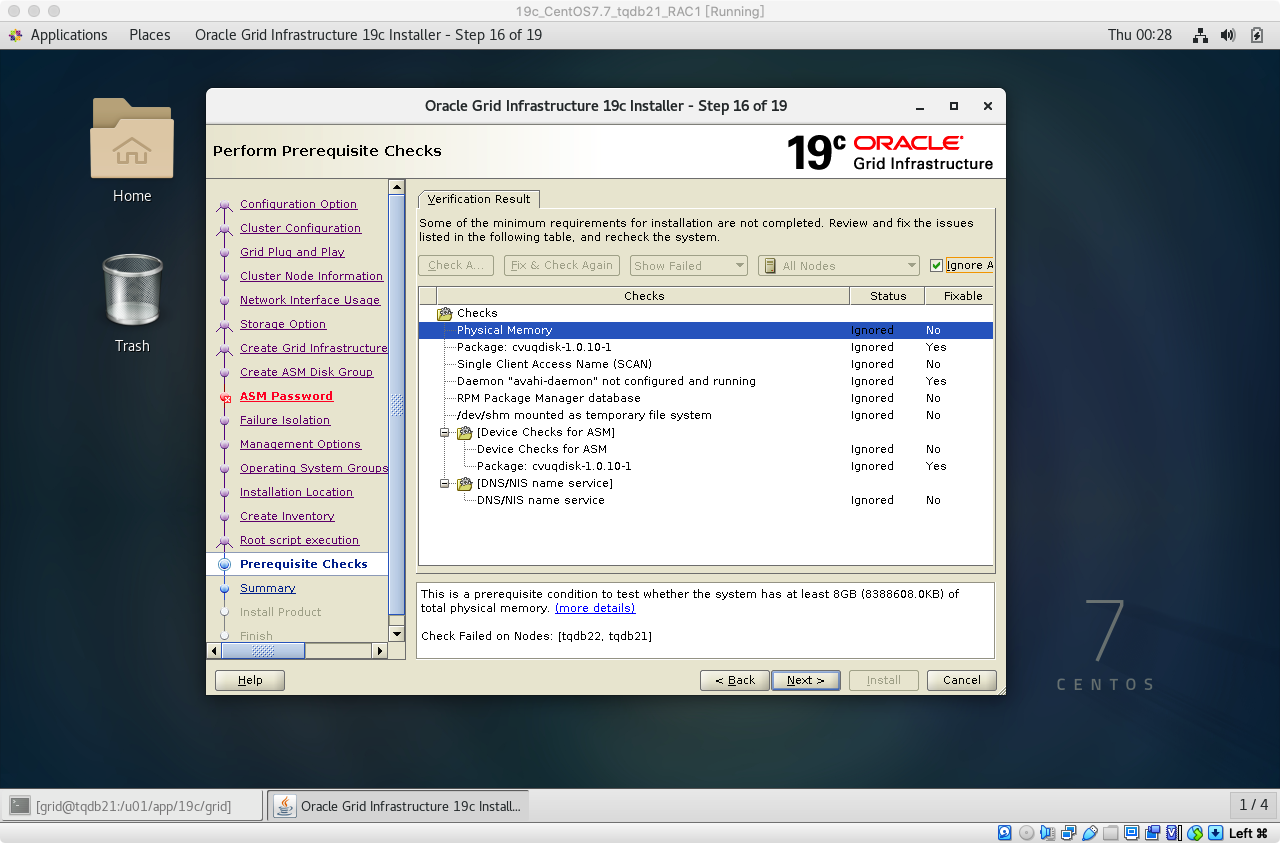

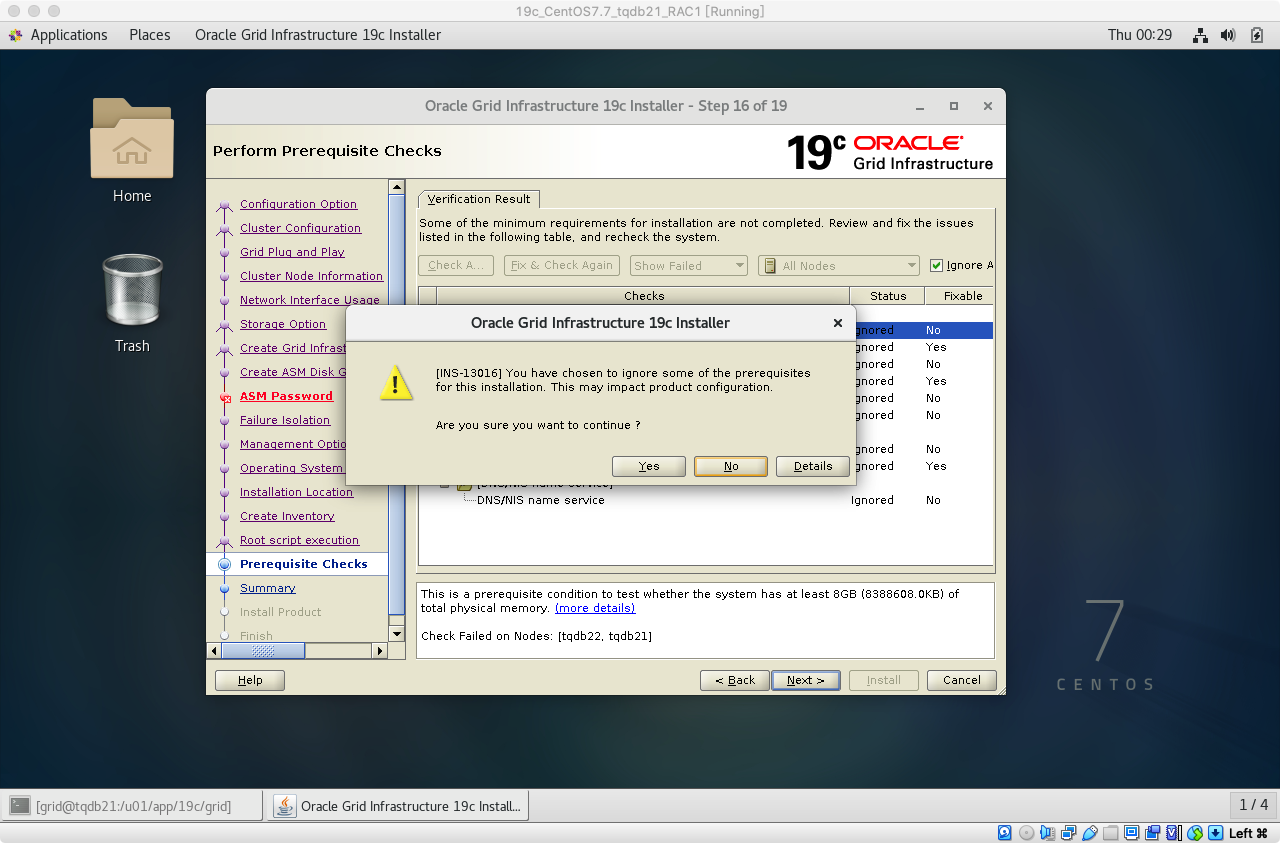

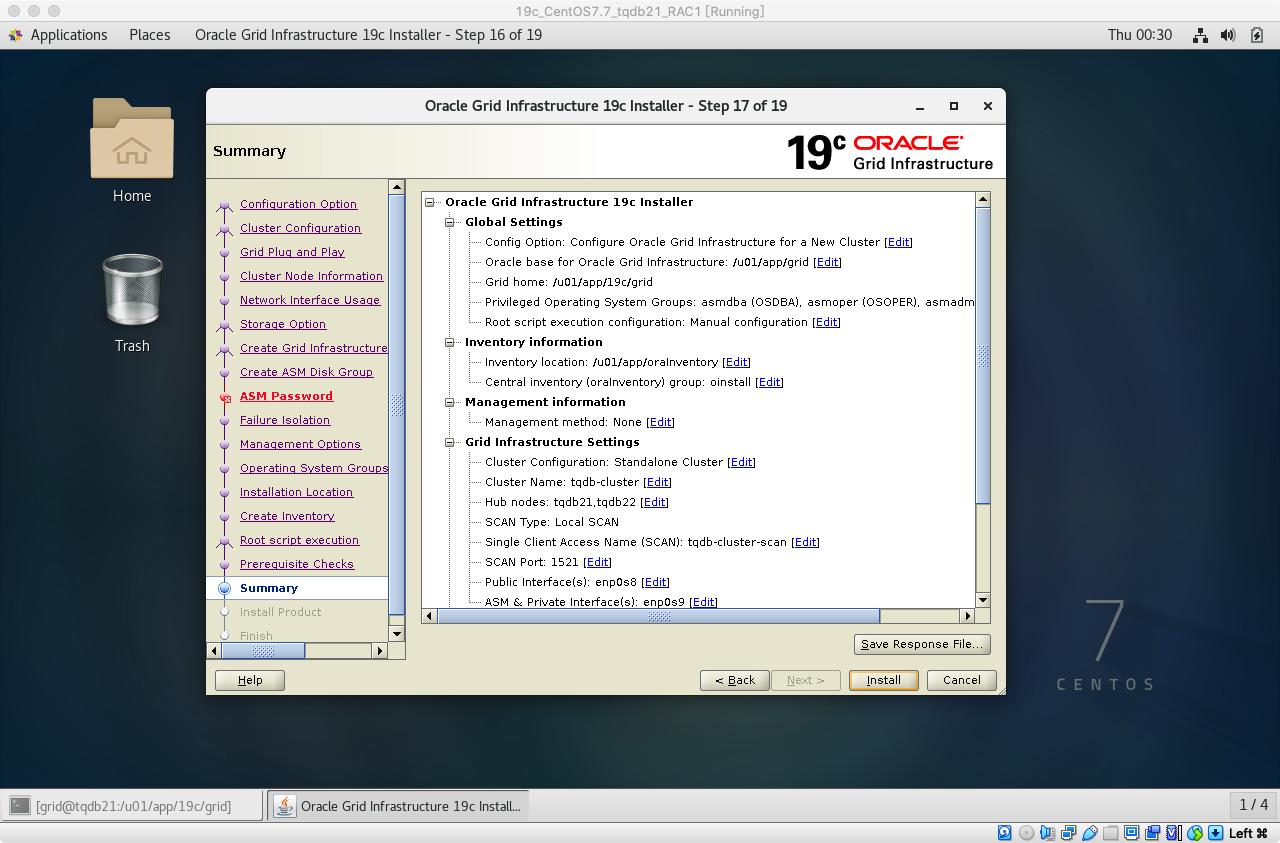

- 19c RAC GRID 安装 16

19c RAC GRID 安装 17 创建 `OCR` 磁盘组

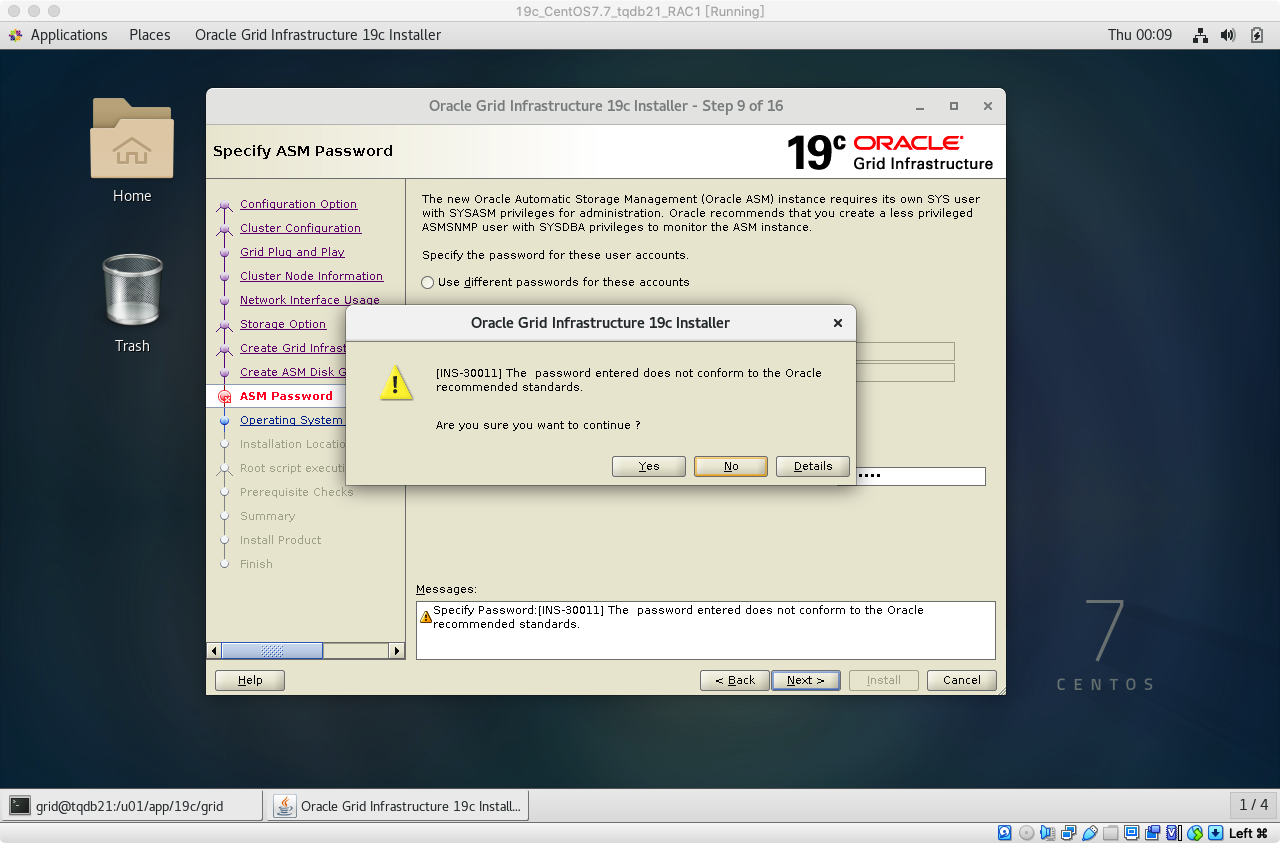

19c RAC GRID 安装 18 ASM Password

19c RAC GRID 安装 19 ASM Password Yes

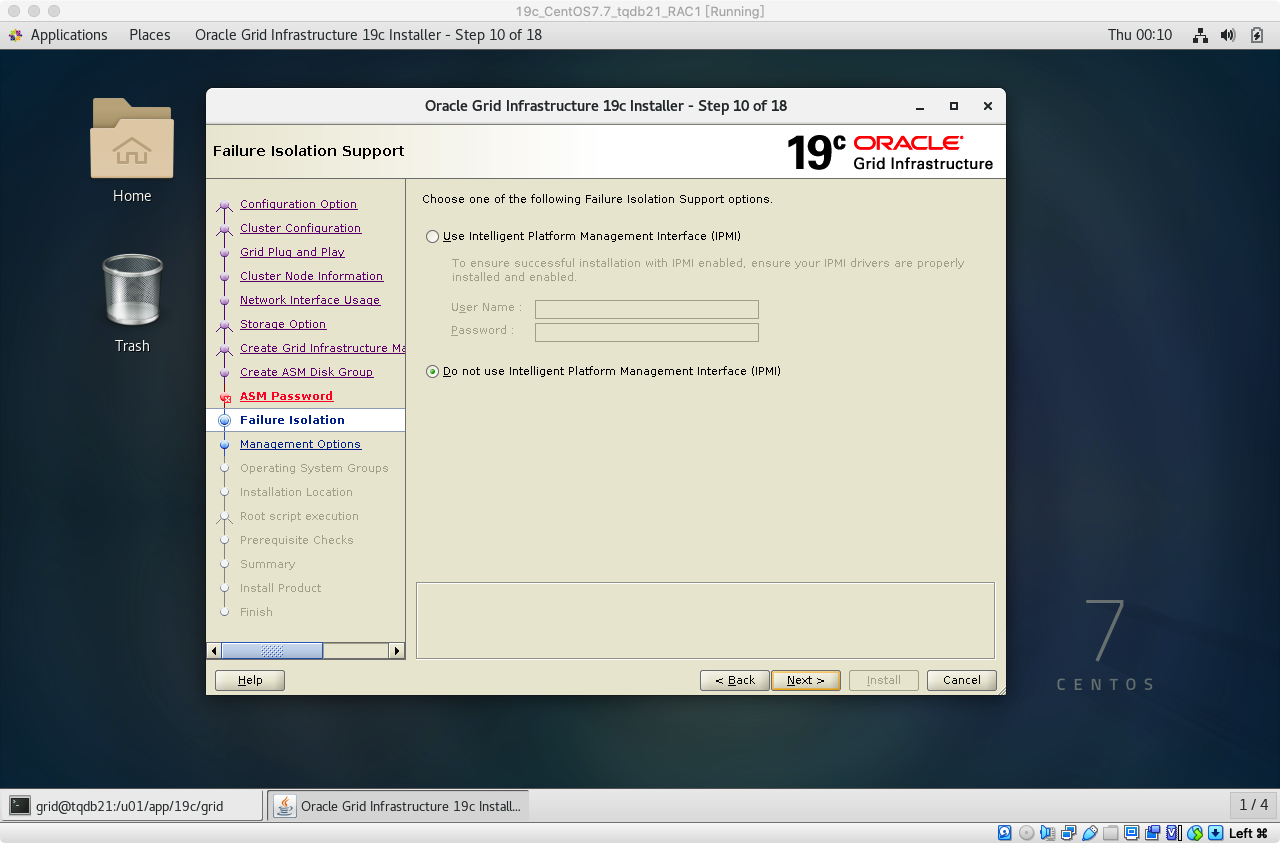

19c RAC GRID 安装 20

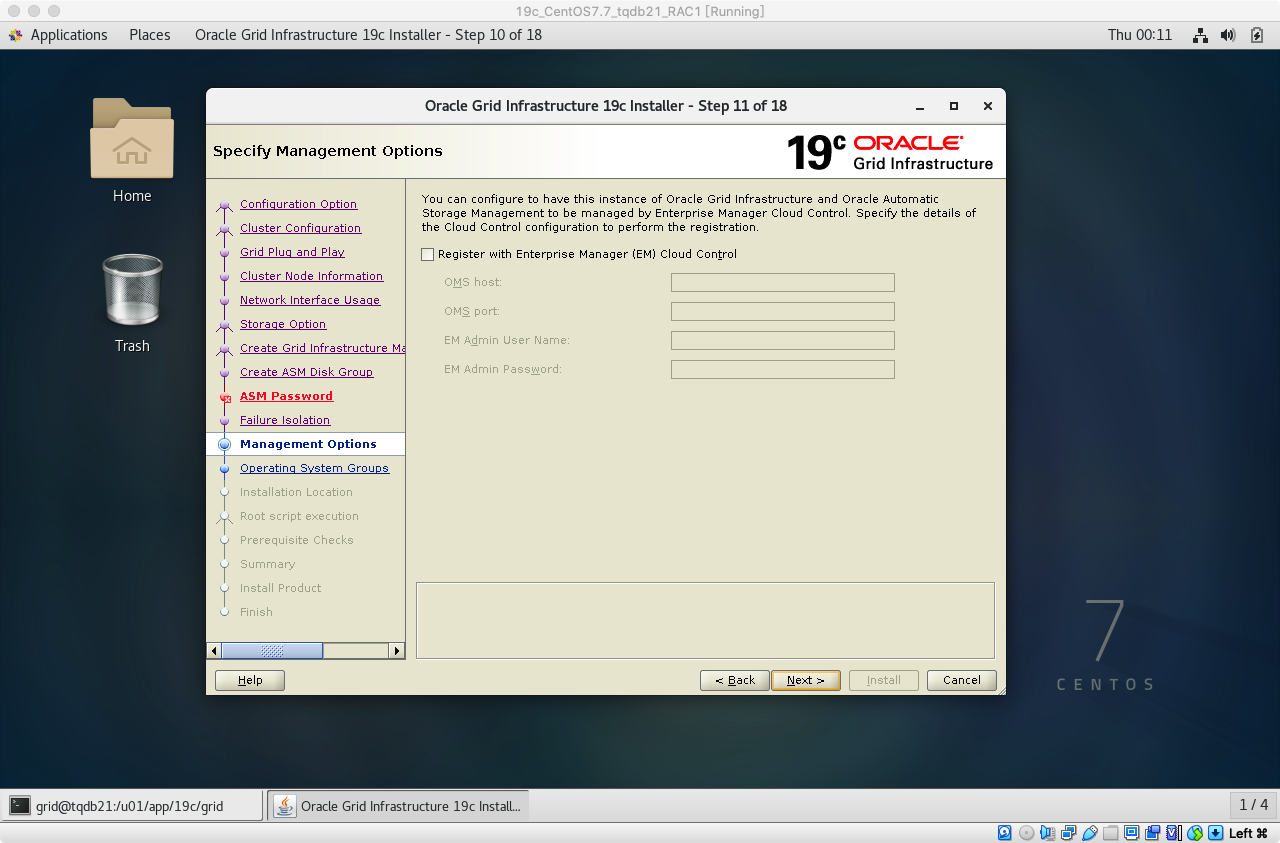

19c RAC GRID 安装 21

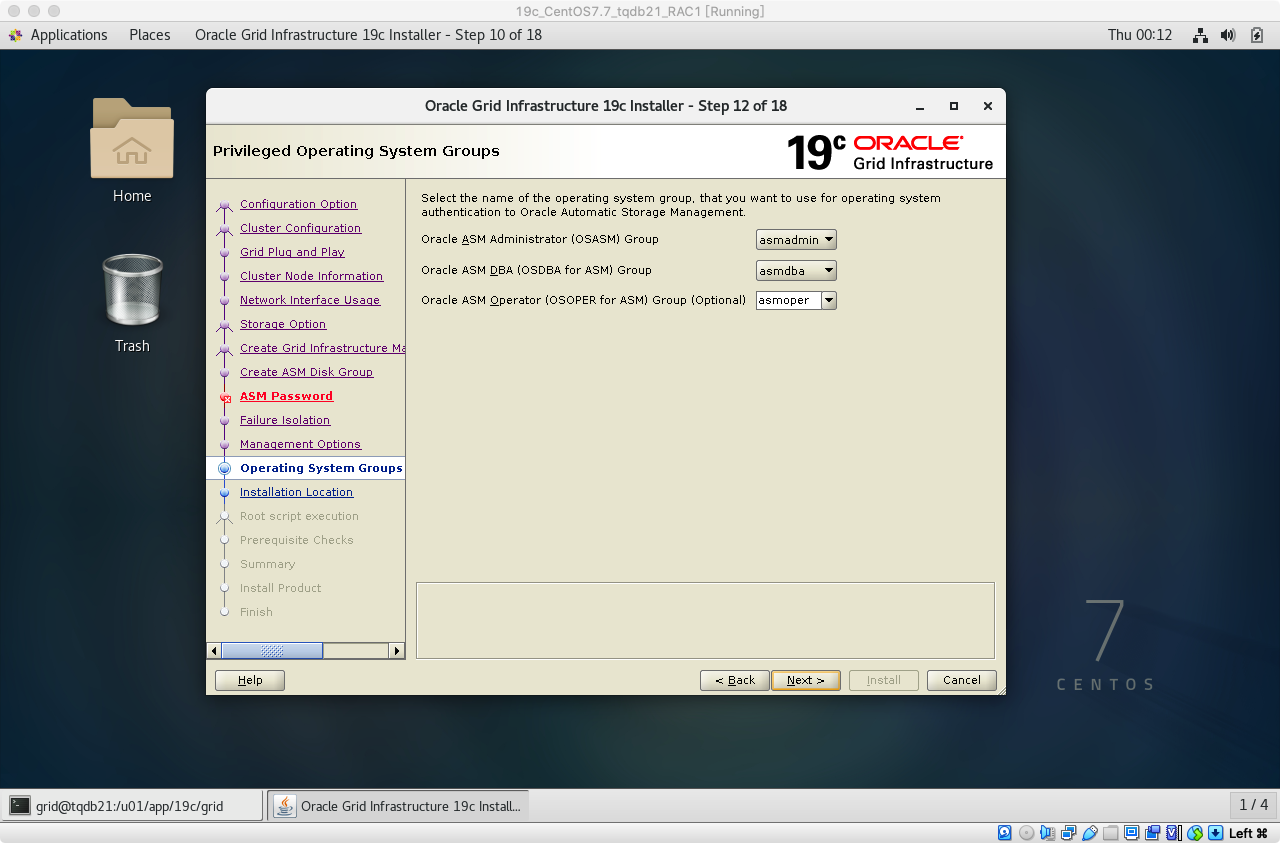

19c RAC GRID 安装 22

19c RAC GRID 安装 23

19c RAC GRID 安装 24

19c RAC GRID 安装 25

19c RAC GRID 安装 26

19c RAC GRID 安装 27

19c RAC GRID 安装 28

19c RAC GRID 安装 29 Yes

19c RAC GRID 安装 30

19c RAC GRID 安装 31

19c RAC GRID 安装 32

19c RAC GRID 安装 33

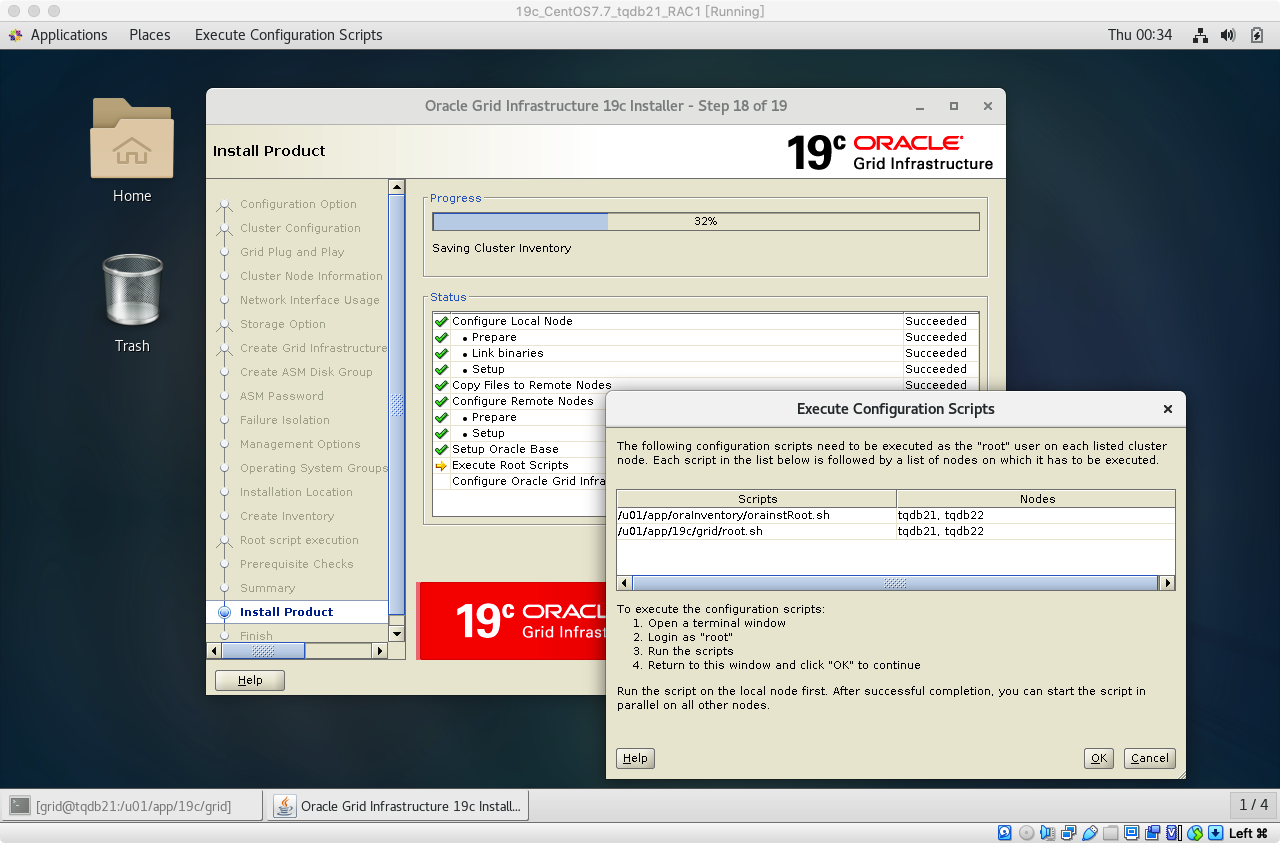

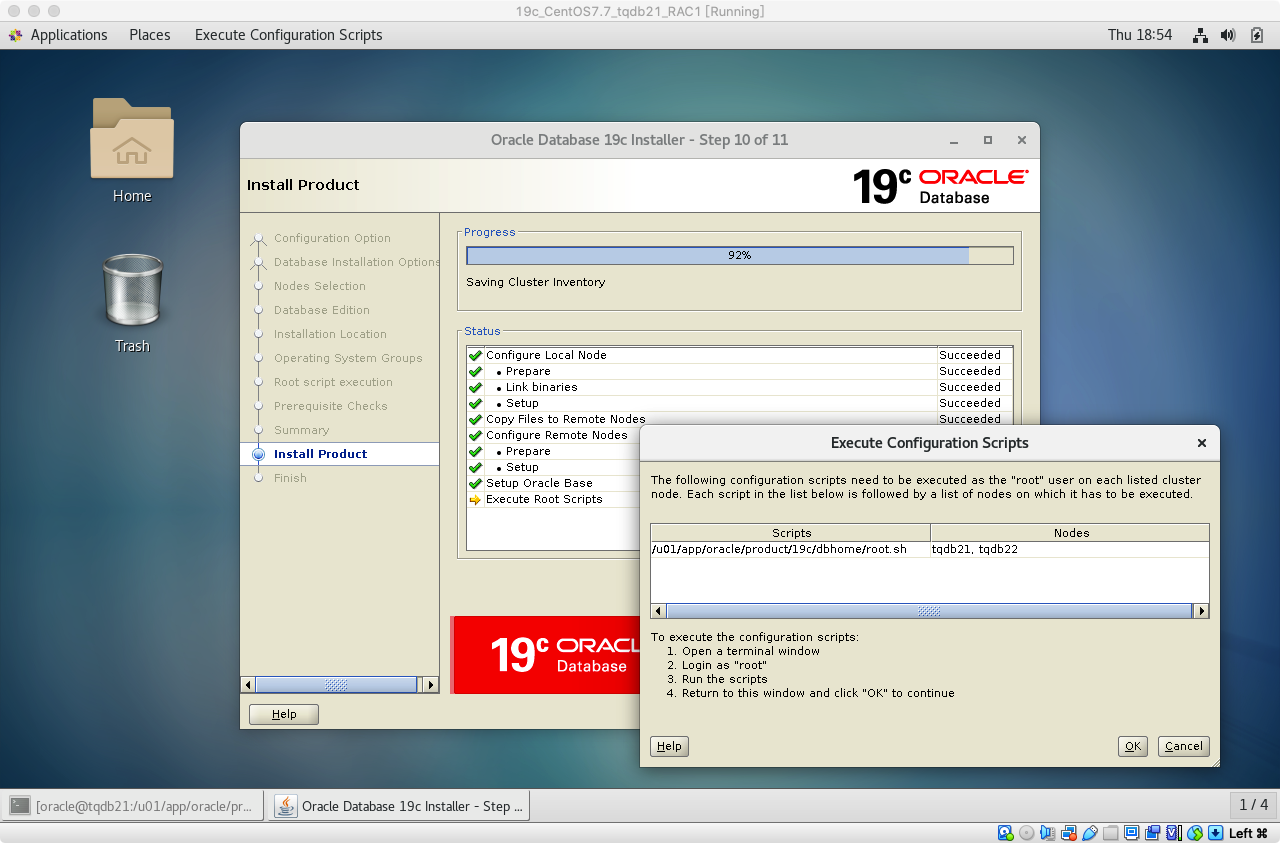

19c RAC GRID 安装 34 各个节点依次执行 2 个 root 脚本

==跑完脚本点ok==

root用户运行脚本

第一个脚本:

节点一:

```

[root@tqdb21: ~]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@tqdb21: ~]#

```

节点二:

```

[root@tqdb22: ~]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@tqdb22: ~]#

```

第二个脚本:

节点一:

```

[root@tqdb21: ~]# /u01/app/19c/grid/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/19c/grid